OpenPLC

Components & Subsystems

What Is OpenPLC?What Is OpenPLC?

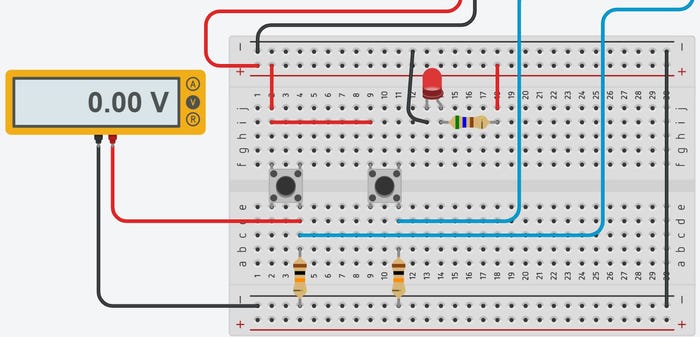

This project for building an Arduino Uno or compatible PLC illustrates OpenPLC’s effective and low-cost tools for automation development.

Sign up for the Design News Daily newsletter.

This project for building an Arduino Uno or compatible PLC illustrates OpenPLC’s effective and low-cost tools for automation development.