How an Autonomous Drone Flies With Deep Learning

A team of engineers at Nvidia share how they created a drone capable of fully autonomous flight in the forest.

May 11, 2017

Autonomous cars haven't even fully hit the roads yet, and companies are already touting the potential benefits of autonomous drones in the sky – from package delivery and industrial inspection, all the way to modern warfare. But a drone presents new levels of challenges beyond a car. While a self-driving car or land-based autonomous robot at least has the ground underneath it to use as a baseline, drones potentially will have a full 360-degree space to move around in and must avoid all of the inherent obstacles and pitfalls associated with this.

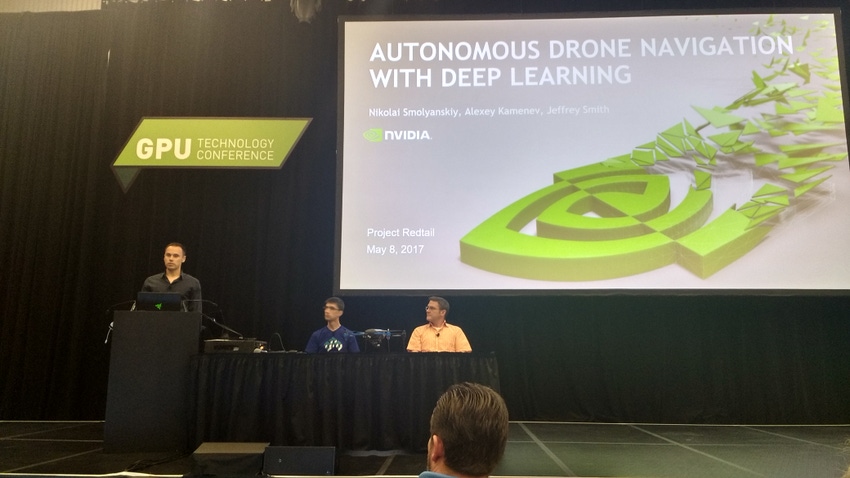

Speaking at the 2017 GPU Technology Conference (GTC), a team of engineers from Nvidia believe the solution to having freely autonomous drones lies in deep learning. Their research has already yielded a fully autonomous drone flight through a 1 km forest path while traveling at 3 m/s, the first flight of its kind according to Nvidia.

“We decided to pick the forest because it's the most complex use case and it applies to search and rescue and military applications,” Nikolai Smolyanskiy, a principle software engineer at Nvidia, told the GTC audience. Forests have challenging light and dynamic environments with light occlusion that make them an absolute nightmare for autonomous flight. Smolyanskiy and his team reason if they can get an autonomous drone to fly through the forest, they can get one to fly almost anywhere.

The drone was a commercially available 3DR Iris+ quadcopter modified with a 3D-printed mount on its underside to hold an Nvidia Jetson TX1 development board, which handled all of the computation. The board was connected to a small PX4Flow smart camera facing downward that was used in conjunction with a lidar sensor for visual-inertial stabilization.

Over the course of nine months of testing, the team initially tried to use GPS navigation to guide the drone, but quickly discovered it was prone to crashes. It also didn't solve the larger question of how you could deploy these drones in remote areas where GPS might not be available (such as in search and rescue applications). “In areas where GPS is not available, you need to navigate visually,” Smolyanskiy said.

From left: Nvidia engineers Nikolai Smolyanskiy, Alexey Kamenev, and Jeffrey Smith discuss their automous drone project at the 2017 GPU Technology Conference. (Image source: Design News) |

The Nvidia team opted to use a deep neural network (DNN) they called TrailNet to solve the problem, training it to handle orientation and lateral offset to follow a path through the forest. For obstacle avoidance they employed Simultaneous Localization and Mapping (SLAM), the same algorithmic technology being used to help automous cars avoid collisions. SLAM allows the drone to get a sense of itself in 3D physical space as well as where it is in relation to the obstacles around it (in this cases trees, branches, and other foliage).

'We tried several neural network topologies. So far we've found that the best performing is based on S-ResNet-18 with some modifications,” Alexey Kamenev, a senior deep learning and computer vision engineer at Nvidia, told the GTC audience. Deep Residual Learning Neural Networks (ResNets) were first introduced by Microsoft in 2015 and are specifically targeted at image recognition applications. The key advantage ResNet has over other DNNs is that it is easier to train and optimize. Using openly available image datasets captured by other researchers in the Swiss Alps and the pacfiic northwest (the Nvidia team tested their drone in Seattle), Kamenev said they were able to quickly train the drone.

A first-person demo of the drone's flight shows its ability to recognize a path (green), as well as obstacles (red). |

There were however challenges that required the team to make adjustments to the ResNet architecture, Kamenev said. “We found the network can be overconfident [when navigating],” he said. “When the drone is pointing left, right, or center it should be confident. But what happens if it's facing in between?” The solution to this was to implement a loss function into the programming that helped the drone find its position at those other angles.

In addition, the team found that the neural network was easily confused by bright spots in the forest and changes in lighting that came from tree cover. They also needed to account for disturbances like wind that might push the drone off course and require it to adjust. “Some suggested just hitting the drone with a stick,” Kamenev laughed. “But we just used manual override to turn the drone and see how well it gets back onto the path.”

“In addition to low level navigation we also wished to have some kind of system to help us avoid obstacles,” Jeffrey Smith, a senior computer vision software engineer at Nvidia, said, explaining the use of SLAM in the the drone. “Unexpected hazards have to also be identified.”

Smith said that while the SLAM system can very well estimate the camera's position as well as its position in real world space it also has issues with accuracy and missing scale information. “We can track objects, but can't tell how far away they are in real world units,” Smith said.

The Nvidia team experimented with several deep neural networks and got the best results from S-ResNet-18. (Image source: Design News) |

Smith said that by using an algorithm based on Procrustes analysis, a type of statistical shape analysis, the team was able to determine translation, rotation, and scaling factors. “The real world intrudes in the form of measurement error,” he said. “ There was a 10-20% error in the distance estimates.”

Ultimately Smith said the team realized they didn't need to calculate from SLAM space to real world space at all, “Because we aren't doing mapping we don't care about scale, we are about not hitting things.”

The type of camera also presented challenges. “We used a simple webcam,” Smith said. “The problem with inexpensive digital cameras is their rolling shutter. SLAM assumes an imagine is being taken all at once. That's not true for rolling shutter.” Since rolling shutter captures a scene by rapdily scanning it vertically or horizontally, it introduces anomalies that aren't visible to the naked eye, but which SLAM picks up on – resulting in huge errors to SLAM algorithms. The solution Smith said was to implement a Semi-dense SLAM specifically for rolling shutter.

Certainly the Nvidia team's project is a long way away from being the sort of fully capable drone we'd imagine in a science fiction film. The in the tests the drone was only about to fly one to two meters off the ground and was limited to a relatively slow speed. Smolyanskiy admitted that the model probably won't work at night right now without special night vision cameras and it may also need additional training to handle rain and other conditions. “The problem was not with the DNN but with the optical flow in low light conditions,” he said.

The team's goal with the project was to keep it as inexpensive as possible with an eye toward miniaturization. Smolyanskiy said given the small form factor of the Jetson TX1 and TX2 development boards it's not hard to imagine a smaller drone handling these tasks already.

Smart Manufacturing Innovation Summit at Atlantic Design & Manufacturing. Designed for industry professionals looking to overcome plant and enterprise-level manufacturing challenges using IT-based solutions. Immerse yourself in the latest developments during the two-day, expert-led Smart Manufacturing Innovation Summit. You'll get the latest on the factory of future including insights into Industrial IoT and IIoT applications, predictive maintenance, intelligent sensors, security, and harmonizing IT/OT. June 13-15, 2017. Register Today! |

Chris Wiltz is the Managing Editor of Design News.

About the Author(s)

You May Also Like