AI Compute Company Banks on Chiplets for Future Processors

Inference AI company develops scalable packaging platform to achieve higher performance and cost-effective interconnections.

Chiplet packaging is catching on with companies designing high-performance processors for data center and AI applications. While familiar names such as Intel and AMD are in this space, so are some smaller startup companies. One of them is d-Matrix, a young company developing technology for AI-compute and inference processors.

Founded by CEO Sid Sheth and Chief Technical Officer Sudeep Bhoja, d-Matrix has developed patented technologies to solve the physics of memory-compute integration using innovative circuit techniques, ML tools, software and algorithms. The company is trying to tackle the memory-compute integration problem, which is considered the final frontier in AI compute efficiency.

In an interview with Design News, Sheth said chiplet technology, where smaller, multiple chips are connected together, was the way to go in order to develop AI and high-end server processors. “We are able to scale up and rapidly deploy our platform using smaller chips. We can get a lot more silicon and more easily test and verify these parts,” he added.

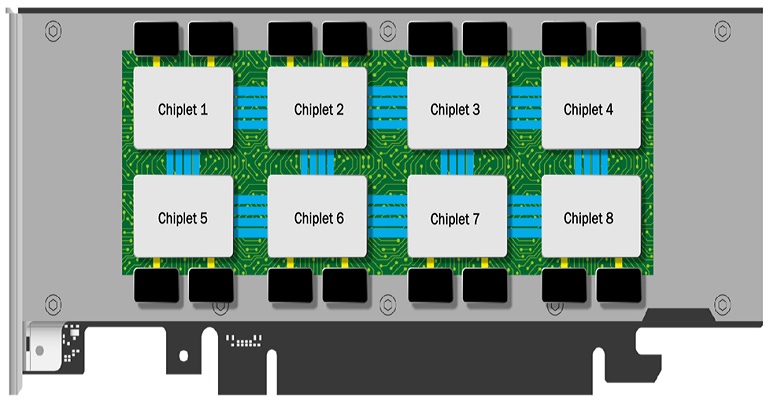

The Bunch of Wires (BoW) based chiplet platform, called Jahawk, provides energy-efficient die-die connectivity over organic substrates. The 8-chiplet AI system uses 6nm process technology from foundry company TSMC. It comprises one pair of differential clock and a 16-bit, single-ended data bus. The system provides 16 Gbps per wire bandwidth and achieves an energy efficiency of better than 0.5 picojoules/bit.

d-Matrix used the Nighthawk chiplet platform launched in 2021 to produce the Jayhawk inference compute platform, which the company is aiming at Generative AI applications and Large Language Model transformer applications with a 10-20X improvement in performance. By using a modular chiplet-based approach, data center customers can refresh compute platforms on a much faster cadence using a pre-validated chiplet architecture.

To enable this, d-Matrix plans to build chiplets based on not just the BoW interconnect platform, but also the open UCIe interconnect standard. Sheth noted that supporting both interconnect platforms would enable a heterogeneous computing platform that can accommodate third-party chiplets.

Spencer Chin is a Senior Editor for Design News covering the electronics beat. He has many years of experience covering developments in components, semiconductors, subsystems, power, and other facets of electronics from both a business/supply-chain and technology perspective. He can be reached at [email protected].

About the Author(s)

You May Also Like