Extrasensory Robots: How Do You Feel About Them?

Robots of tomorrow may have better senses than humans.

December 12, 2022

In my previous column—Are We Ready for the Robot Revolution?—I made mention of the fact that my wife (Gina the Gorgeous) is keen on our having a humanoid robot to perform all the tasks she’s already trained me to do around the house.

We also introduced the human-sized EVE robot from Halodi Robotics. Although this bodacious beauty doesn’t have legs and feet as such, its wheeled base allows it to go anywhere where the facilities are in compliance with the Americans with Disabilities Act (ADA), including travelling up ramps, riding in elevators, and so forth. Boasting arms and hands, EVE can squat down to pick things up off the floor and reach up to place things on shelves.

One of the things I never really used to think about when I read books or watched movies featuring robots was how they sensed the world around them. I think we all assume that robots capable of helping around the house will be equipped with camera-based vision, but what about other senses like taste and touch?

As an aside, thinking about the sense of touch reminds me of the old joke about ... a silly person who visits their physician and says: “Doctor, I’m in agony, I hurt all over!” The doctor replies, “What do you mean you hurt all over?” The patient responds by prodding their forehead, nose, knee, elbow, etc. exclaiming “Ouch!” each time. “That’s easy,” says the doctor, “You’ve broken your finger!” (I didn’t say it was a good joke).

As another aside (and this isn’t a joke), I visited a neurologist a few years ago because of a weird pain I was getting in my right leg a few inches below the knee. After chatting about this for a while, the doctor requested that I close my eyes and say when I felt him prodding me with the end of a paper clip. After a few minutes of this, he told me to open my eyes and look at my leg, upon which he’d drawn an ellipse about 1 inch wide and 5 inches long. He then told me, “You have no sensation in this area.” My knee-jerk reaction (no pun intended) was to say, “That can’t be right because I can feel it when I touch myself with my finger.” He responded that the only sensation I was really getting was from my finger—my brain was augmenting this with what it expected to feel from my leg.

Speaking of senses, how many do you think we are talking about? Let’s start with humans—how many sensors do we have? This is the point where most folks respond with what they were taught by rote at school, saying “We have five senses—sight, hearing, touch, taste, and smell.” In reality, as I wrote in my blog, People With More Than Five Senses, we have at least nine senses (including thermoception, nociception, proprioception, and equilibrioception to name just the obvious ones), and possibly as many as twenty or more.

Returning to robots, and sticking with the original five senses, there’s currently a lot of work underway with respect to creating olfactory and gustatory receptors/sensors that will be able to provide the machine equivalent of human smell and taste senses.

In the case of machine vision, the last 10 years or so have seen tremendous strides with respect to things like object detection and recognition. Interestingly enough, the parameters used by image signal processing (ISP) pipelines are traditionally hand-tuned by human experts over many months. A company called Algolux has some very interesting products that use artificial intelligence (AI) to tune ISP pipelines to make them better suited for machine vision applications.

And, of course, robots aren’t limited to use only the electromagnetic wavelengths available to us humans. They could also be equipped with radar (which can “see” in the dark) and lidar sensors (which can do all sorts of interesting things), thereby giving the robots a form of “extrasensory perception.” In the case of lidar, for example, the folks at a company called SiLC Technologies are making a sensor that can extract instantaneous depth, velocity, and polarization-dependent intensity on a per-pixel basis.

What about sound? Whenever I mention this sense in the context of robots, most people immediately think of something like an Alexa on steroids; that is, a robot that can understand and respond to spoken commands, but sensing sound offers the potential for much more powerful capabilities. For example, my cousin Graham is now 100% blind due to some medical condition whose name I forget (I couldn’t spell it even if I could remember it). The point is that I’ve seen Graham navigate his way through city streets and buildings almost as though he were sighted. I can only assume he’s developed some form of echolocation ability, and the same sort of ability could be gifted to robots.

This reminds me of a company called XMOS whose XCORE processors and algorithms have the ability to “disassemble a sound space.” What this means is that you can have a roomful of people talking, and—by means of an Amazon Echo-like microphone array—the folks at XMOS can separate out all the individual voices and track people as they move around the room in real-time. If you were accompanied by a robot called Max (the name of almost every dog and robot in every science fiction film) equipped with this capability, you could say, “Max, what are those two people talking about over there,” and Max could respond in tones reminiscent of Marvin the Paranoid Android, “You really don’t want to know.”

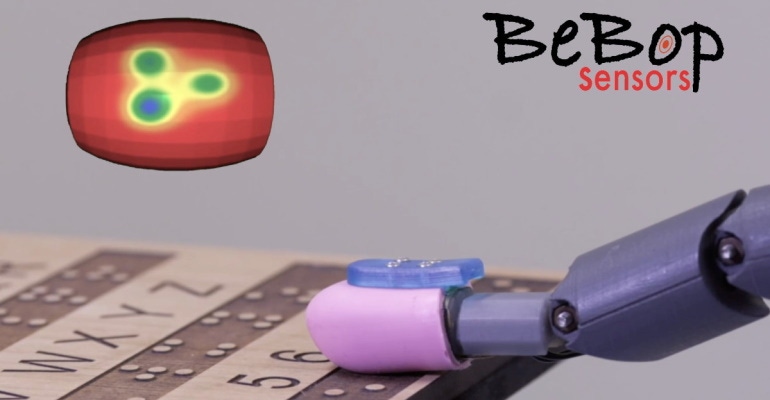

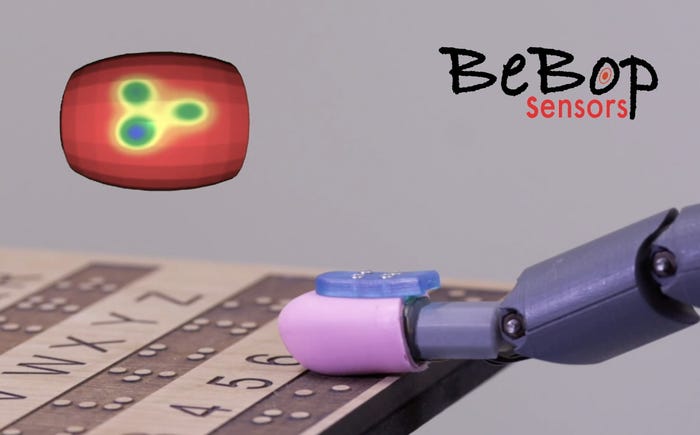

Last, but certainly not least, for this column, we have the sense of touch. I was recently introduced to Keith McMillen, who is the founder and CTO of a company called BeBop Sensors. Keith told me that they’ve created a smart fabric called BeBop RoboSkin that can be used to create a skin-like covering to provide humanoid robots with tactile awareness that exceeds the capabilities of human beings with respect to spatial resolution and sensitivity.

I must admit that I was a little doubtful when I first heard this, but then Keith showed me an image of an android finger equipped with BeBop RoboSkin reading Braille.

Have you ever tried reading Braille yourself? Each cell (character) is distinguished by the presence or absence of six raised dots. I have some Braille text sheets here in my office. I find it impossible to tell how many raised dots are under my finger without lifting it up and looking (I know I would get better with practice).

Keith informs me that humans have about a 4 mm pitch with respect to the nerves in our fingertips. He says one easy way to test this is to take two blunt points (like plastic knitting needles), close your eyes, and get a friend to touch the end of your finger with the points. Keith says that if the distance between the points is less than 4mm, then you will perceive them as being a single point. By comparison, the sensors in the BeBop RoboSkin shown here are presented in an array with a 2 mm x 3 mm pitch.

So, now we have robots that can dance better than I and can read Braille better than I. On the one hand, I don’t think we are poised on the brink of a robot apocalypse just at the moment. On the other hand, I do think it might be a good idea to dig out my Aluminum Foil Deflector Beanie and give it a bit of a polish. In addition to shielding one’s brain from most electromagnetic psychotronic mind control carriers, such a beanie is bound to confuse any potential robot overlords.

How about you? Do you have any cogitations and ruminations you’d care to share about robots in general and about robot sensors and senses in particular? As always, I welcome your comments and questions.

About the Author(s)

You May Also Like