The End is Near for MIL-HDBK-217 and Other Outdated Handbooks

Why do some people in the electronics industry keep using outdated approaches to predict component reliability? Here are four key reasons.

July 17, 2018

For decades, the electronics industry has been stuck using the obsolete and inaccurate MIL-HDBK-217 to make reliability predictions that are required by the top of the supply chain (Department of Defense, FAA, Verizon, etc.). Each of these handbooks (some of which have not been updated in more than two decades) assigns a constant failure rate to every component. It then arbitrarily applies modifiers ("lambdas") based on temperature, humidity, quality, electrical stresses, etc. This simplistic approach was appropriate back in the '50s and '60s, when the method was first developed. It can no longer be justified, however, given the rapid improvement in simulation tools and the extensive access to component data.

So, why do some people in the electronics industry keep using these approaches? Four key misconceptions seem to breathe life into these archaic documents even after they have been proven wrong over and over and over again.

Misconception #1: Empirical handbooks are based on actual field failures.

Theoretically, this could be true. In practice, the process is a little more muddled. MIL-HDBK-217 is clearly not based on actual field failures because it has not been updated in over 20 years. Same with IEC 62380, which was published in 2004 and is based on even older field data. What about the rest, like SR-332 or FIDES or SN29500? Yes, they are updated on a more regular basis, but their fatal flaw is their very limited source of information. There are indications that the number of companies submitting field failure information into these documents is less than 10 and sometimes less than 5. How relevant is failure data from 5 companies for the other 120,000 electronic OEMs in the world? Not very.

And it gets even worse the deeper you go. Most of these companies do not identify the specific failure location on all of their field failures. Failure analysis when there is a high number of failures? Yes. Failure analysis on high value products? Yes. The rest of the stuff? Repair and replace or just throw it away. This results in a very teeny, tiny number of samples being the basis for these "etched in stone" failure rates. And what if this arbitrary self-selection causes some components to not have any field failure information? Only two options: Keep the old failure rate number or make up a new one.

It should give all of us some pause. The reliability of airplanes, satellites, and telephone networks could be, in some very loose way, based on an arbitrary set of filtered data from a self-selected group of three companies.

Misconception #2: Past performance is an indication of future results.

That disclaimer on mutual funds is there for a reason. Less than 0.3% of mutual funds deliver top 25% returns four years in a row[1]. Have you ever thought about why mutual funds are unable to consistently deliver? It’s the same reason why handbooks are unable to consistently deliver. Both are unable to capture the true underlying behavior that drives success and failure. Critical details, like how companies treat their customers or their R&D pipeline, are fundamental to the success of companies, but are often not accounted for by mutual fund managers because it's "too hard" and "too expensive."

The same rationale is used by engineers who rely on handbooks. The reason why one product had a mean time between failure (MTBF) of 100 years and another had an MTBF of 10 years may have nothing to do with temperature or quality factors or number of transistors or electrical derating. If you really want to understand and predict reliability, you have to know all the ways the product will fail. Yes, this is hard. And yes, this is really hard with electronics. How hard? Let’s run through a scenario.

A standard piece of electronics will have approximately 200 unique part numbers and 1,000 components. About 20 of these 200 unique parts will be integrated circuits. Off the top of my head, each integrated circuit will have up to 12 possible ways to fail in the field (ignoring defects). These include dielectric breakdown over time, electromigration, hot carrier injection, bias temperature instability, EOS/ESD, EMI, wire bond corrosion, wire bond intermetallic formation, solder fatigue (thermal cycling), solder fatigue (vibration), solder failure (shock), and metal migration (on the PCB). This means you would have to calculate 240 combinations of part and failure mechanisms. Each one requires geometry information, material information, environmental information, etc. And that’s just for integrated circuits!

But these are the true reliability fundamentals of electronics. And, just like stock pickers, if you capture the true fundamentals, you will get it right every single time.

Misconception #3: The bathtub curve exists.

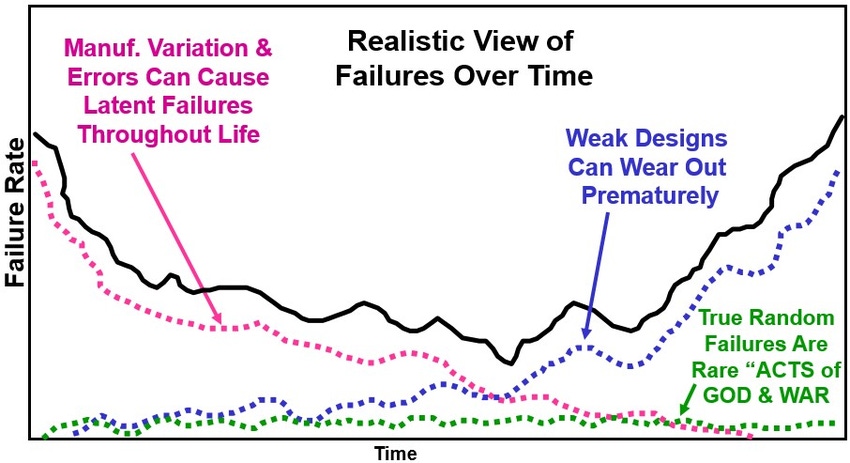

There is a belief that the reliability of any product can be described by a declining failure rate (quality), a steady state failure rate (operational life), and an increasing failure rate (robustness). If this is truly the behavior of a fielded product, one can understand the motivation for handbooks that calculate a MTBF. To avoid the portion of life at which the failure rate declines, companies will screen their products. To avoid the portion of life where the failure rate increases, companies will overdesign their products. If both activities are done well, the only thing to worry about is the middle of the bathtub curve. Right?

|

The traditional “bathtub curve”—in which reliability is described by a declining failure rate (quality), a steady-state failure rate (operational life), and an increasing failure rate (robustness)—is based on a misconception. (Image source: DfR Solutions) |

Wrong! The first, and biggest problem, is this concept of "random" failures that occur during the operational lifetime. If the failures are truly random, the rate at which they occur should be independent of the design of the product. And if they are independent of the design, why would you try to calculate the failure rate based on the design? One slightly extreme example would be the failures of utility meters because a cat decided to urinate on the box. This failure is truly random and, because it is random, it has nothing to do with the design. (Side note: No one ever got fired because the rate of these truly "random" events was too high.) This failure mode may be partially dependent on the housing/enclosure, but housings and enclosures are not considered in empirical prediction handbooks.

|

The actual failure rate of products in the field is based on a combination of decreasing failure rates due to quality, increasing failure rates due to wear-out, and a very small number of truly random occurrences. (Image source: DfR Solutions) |

The reality is that failure rate during operational life is a combination of decreasing failure rates due to quality, increasing failure rates due to wear-out, and a very small number of truly random occurrences. The wear-out portion is increasing in frequency and becoming harder to identify because the shrinking features of the current generation of integrated circuits is causing wear-out behavior earlier than ever before. IC wear-out behavior is different than wear-out seen with moving parts and interconnect fatigue. Most failure mechanisms associated with integrated circuits have very mild wear-out behavior (Weibull slopes of 1.2 to 1.8). This meants that it can be really hard to see these failures in the warranty returns, but they're there.

Misconception #4: Reliability Physics cannot be used to predict operating life performance.

So, now we get to the real reason why these handbooks are still around: There is nothing available to replace them. At least, that can be the mentality. If you scratch the surface, however, there are other forces at play. The first is that human nature is to not ask for more work. Switching from empirical prediction to reliability physics will be more work. The activity goes from simple addition (failure rate 1 + failure rate 2 + failure rate 3 + …) to algorithms that can contain hyperbolic tangents (say that three times fast) and may require knowledge of circuit simulation, finite element, and a lot of other crazy stuff. For reliability engineers trained in classic reliability, which teaches you to use the same five techniques regardless of product or industry, this can be daunting.

The second is that the motivation to change practices is not there. In many organizations and industries, traditional reliability prediction can be a “check the box” activity without realizing the damaging influence it has on design, time to market, and warranty returns. Companies end up implementing very conservative design practices, such as military grade parts or excessive derating, because these activities are rewarded in the empirical prediction world. Many times, design teams guided toward these practices have no idea of the original motivation (i.e., “we have always done it this way”). If reliability prediction becomes a check the box activity, design is forced to go through the laborious design-test-fix process (also known as reliability growth, though it is more wasting time than growing anything). Finally, since handbook reliability prediction is divorced from the real world, the eventual cost of warranty returns can experience wild swings in magnitude for each product. These costs are not expected or predicted by the product group.

RELATED ARTICLES:

Most engineers and managers will agree that critical decisions regarding design and reliability should be based on robust analyses and data. With the race toward autonomy, AI, and IoT, electronics reliability cannot be just an afterthought. Consumers are increasingly depending on electronics for safety. Reliability predictions must be based on real data and real-world conditions. In Part II of this article, we will address in greater detail the brave new world of reliability physics.

Preview of Part ll: Reliability Physics, A Brave New World

Industry-leading companies around the world are now using reliability physics to predict extended warranty returns and operational failure rate more accurately and with more consistency than any empirical handbook ever dared dream. Accomplishing this requires two actions: Capture the relevant degradation mechanisms (see the original 12 for integrated circuits) and use robust statistical techniques to extrapolate failure rates through the operational lifetime. When these two activities are combined, companies are, for the first time, able to truly see how any and all design decisions (part selection, derating, materials, temperature, layout, housing, etc.) affect failure rates, warranty returns, customer satisfaction, and organizational profitability.

1 https://finance.yahoo.com/news/mutual-fund-past-performance-scorecard-171510113.html

Craig Hillman, PhD, is CEO of DfR Solutions.

|

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)