GPUs and CPUs are reaching their limits as far as AI is concerned. That’s why Tenstorrent is creating something different.

May 12, 2020

GPUs and CPUs are not going to be enough to ensure a stable future for artificial intelligence. “GPUs are essentially at the end of their evolutionary curve,” Ljubisa Bajic, CEO of AI chip startup Tenstorrent told Design News. “[GPUs] have done a great job; they’ve pushed the field to to the point where it is now. But in order to make any kind of order of magnitude type jumps GPUs are going to have to go.”

|

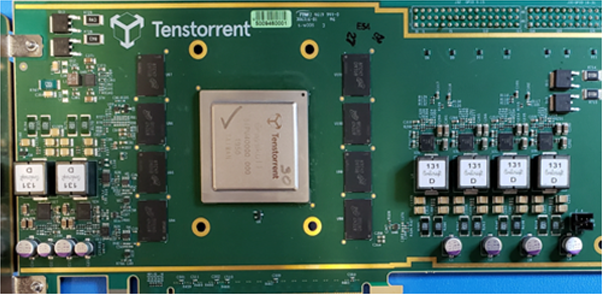

Tenstorrent's Grayskull processor is capable of operating at up to 368 TOPS with an architecture much different than any CPU or GPU (Image source: Tenstorrent) |

Bajic knows quite a bit about GPU technology. He spent some time at Nvidia, the house that GPUs built, working as senior architect. He’s also spent a few years working as an IC designer and architect at AMD. While he doesn’t think companies like Nvidia are going away any time soon, he thinks it’s only a matter of time before the company releases an AI chip product that is not a GPU.

But an entire ecosystem of AI chip startups is already heading in that direction. Engineers and developers are looking at new, novel chip architectures capable of handling the unique demands of AI and its related technologies – both in data centers and the edge.

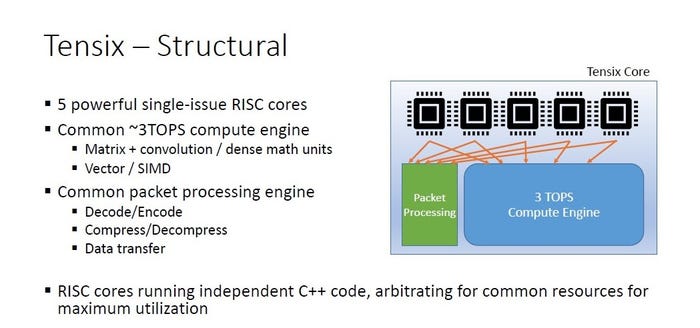

Bajic is the founder of one such company – Toronto-based Tenstorrent, which was founded in 2016 and emerged from stealth earlier this year. Tenstorrent’s goal is both simple and largely ambitious – creating chip hardware for AI capable of delivering the best all around performance in both the data center and the edge. The company has created its own proprietary processor core called the Tensix, which contains a high utilization packet processor, a programmable SIMD, a dense math computational block, along with five single-issue RISC cores. By combining Tensix cores into an array using a network on a chip (NoC) Tenstorrent says it can create high-powered chips that can handle both inference and training and scale from small embedded devices all the way up to large data center deployments.

The company’s first product Grayskull (yes, that is a He-Man reference) is a processor targeted at inference tasks. According to company specs, Grayskull is capable of operating at up to 368 tera operations per second (TOPS). To put that into perspective as far as what Grayskull could be capable of, consider Qualcomm’s AI Engine used in its latest SoCs such as the Snapdragon 865. The Qualcomm engine offers up to 15 TOPS of performance for various mobile applications. A single Grayskull processor is capable of handling the volume of calculations of about two dozen of the chips found in the highest-end smartphones on the market today.

|

The Grayskull PCIe card (Image source: Tenstorrent) |

Nature Versus Neural

If you want to design a chip that mimics cognition then taking cues from the human brain is the obvious way to go. Whereas AI draws a clear functional distinction between training (learning a task) and inference (implementing or acting on what’s been learned), the human brain does no such thing.

“We figured if we're going after imitating Mother Nature that we should really do a good job of it and not not miss some key features,” Bajic said. “If you look at the natural world, there’s the same architecture between small things and big things. They can all learn; it's not inference or training. And they all achieve extreme efficiency by relying on natural sparsity, so only a small percentage of the neurons in the brain are doing anything at any given time and which ones are working depends on what you're doing.”

Bajic said he and his team wanted to build a computer would have all these features and also not compromise on any of them. “In the world of artificial neural networks today, there are two camps that have popped up,” he said. “One is CPUs and GPUs and all the startup hardware that's coming up. They tend to be doing dense matrix math on hardware that's built for it, like single instructional, multiple data [SIMD] machines, and if they're scaled out they tend to talk over Ethernet. On the flip side you've got the spiking artificial neural network, which is a lot less popular and has had a lot less success in in broad applications.”

Spiking neural networks (SNNs) more closely mimic the functions of biological neurons, which send information via spikes in electrical activity. “Here people try to simulate natural neurons almost directly by writing out the differential equations that describe their operation and then implementing them as close we can in hardware,” Bajic explained. “So to an engineer this comes down to basically having many scalar processor cores connected to the scalar network.”

This is very inefficient from a hardware standpoint. But Bajic said that SNNs have an efficiency that biological neurons have in that only a certain percentage of neurons are activated depending on what the neural net is doing – something that’s highly desirable in terms of power consumption in particular.

“Spiking neural nets have this conditional efficiency, but no hardware efficiency. The other end of the spectrum has both. We wanted to build a machine that has both,” Bajic said. “We wanted to pick a place in the spectrum where we could get the best of the both worlds.”

Behind the Power of Grayskull

With that in mind there are four overall goals Tenstorrent is shooting for in its chip development – hardware efficiency, conditional efficiency, storage efficiency, and a high degree of scalability (exceeding 100,000 chips).

“So how did we do this? We implemented a machine that can run fine grain conditional execution by factoring the computation from huge groups of numbers to computations of small groups, so 16 by 4 or 16 by 16 groups to be precise,” Bajic said.

“We enable control flow on these groups with no performance penalty. So essentially we can run small matrices and we can put “if” statements around them and decide whether to run them at all. And if we’re going to run them we can decide whether to run them in reduced precision or full precision or anywhere in between.”

He said this also means rethinking the software stack. “The problem is that the software stacks that a lot of the other companies in the space have brought out assume that there's a fixed set of dimensions and a fixed set of work to run. So in order to enable adaptation at runtime normally hardware needs to be supportive of it and the full software stack as well.

“So many decisions that are currently made at compile time for us are moved into runtime so that we can accept exactly the right sized inputs. That we know exactly how big stuff is after we've chosen to eliminate some things at runtime so there's a fairly large software challenge to keep up with what the hardware enables.”

|

(Image source: Tenstorrent) |

Creating an architecture that can scale to over 100,000 nodes means operating at a scale where you can’t have a shared memory space. “You basically need a bunch of processors with private memory,” Bajic said. “Cache coherency is another thing that's impossible to scale for across more than a couple hundred nodes, so that had to go as well.”

Bajic explained that each of Tenstorrent’s Tensix cores is really a grid of five single-issue RISC covers that are networked together. Each Tensix is capable of roughly 3 TOPS of compute.

“All of our processors can pretty much be viewed as packet processors,” Bajic said. “The way that works on a single processor level is that you have a core and every one of them has a megabyte of SRAM. Packets arrive into buffers in this SRAM, which triggers software to fetch them and run a hardware unpacketization engine – this removes all the packet framing, interprets what it means, and decompresses the packet so it leaves compressed at all times, except when it’s being computed on.

“It essentially recreates that little tensor that made the packet. We run a bunch of computations on those tensors and eventually we're ready to to send them onward. What happens then is they get repacketized, recompressed, deposited into SRAM, and then from there our network functionality picks them up and forwards them to all the other cores that they need to go to under the directional compiler.”

While Tenstorrent is rolling out Grayskull it is actively developing its second Tensix core-based processor, dubbed Wormhole. Tenstorrent is targeting a Fall 2020 release for Wormhole and says it will focus even more on scale. “It’s essentially built around the same architecture [as Grayskull], but it has a lot of Ethernet links on it for scaling out,” Bajic said. “It's not going be a PCI card chip – it’s the same architecture, but for big systems.”

Searching for the iPhone Moment

There are a lot of lofty goals for AI on the horizon. Researchers and major companies alike are hoping new chip hardware will help along the path toward big projects like Level 5 autonomous cars all the way to some idea of general artificial intelligence.

Bajic agrees with these ideas, but he also believes that there’s a simple matter of cost savings that makes chips like the ones being developed by his company an attractive commodity.

“The metric that everybody cares about is this concept of total cost of ownership (TCO),”he said. “If you think of companies like Google, Microsoft, and Amazon, these are big organizations that run an inordinate amount of computing machinery and spend a lot of money doing it. Essentially they calculate the cost of everything to do with running a computer system over some set of years including how much the machine costs to begin with – the upfront cost, how much it costs to pipe through wires and cooling so that you can live with its power consumption, and the cost of how much the power itself costs. They add all of that together and get this TCO metric.

“For them minimizing that metric is important because they spend billions of dollars on this. Machine learning and AI has become a very sizable percentage of all their compute activity and it’s trending towards becoming half of all that activity in the next couple years. So if your hardware can perform, say, 10 times better then it's a very meaningful financial indicator. If you can convince the market that you've got an order of magnitude in TCO advantage that is going persist for a few years, it's a super powerful story. It's a completely valid premise to build a business around, but it's kind of an optimization thing as opposed to something super exciting.”

For Bajic those more exciting areas come in the form of large scale AI projects like using machine learning to track diseases and discover vaccines and medications as well as in emerging feels such as emotional AI and affective computing. “Imagine if you had a device on your wrist that could interpret all of your mannerisms and gestures. As you’re sitting there watching a movie it could tell if you’re bored or disgusted and change the channel. Or it could automatically order food if you appear to be hungry – something pretty intelligent that can also be situationally aware,” he said.

“The key engine that enables this level of awareness is an AI, but at this point these solutions are too power hungry and too big to put on your wrist or to put anywhere that can follow you. By providing an architecture that will give an order of magnitude boost you can start unlocking whole new technologies and creating things that will an impact on the level of the first iPhone release.”

RELATED ARTICLES:

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, blockchain, and robotics.

About the Author(s)

You May Also Like