While these terms are often used interchangeably, they have significantly different meanings, especially in the system-on-chip (SoC) analog design space.

April 14, 2020

People freely interchange the terms “test” and “verification.” It’s understandable when terms like testcase, testbench and device under test (DUT) are in conjunction with different types of verification. So, what is the difference between test and verification?

Perhaps the simplest way to think of these two terms is as follows:

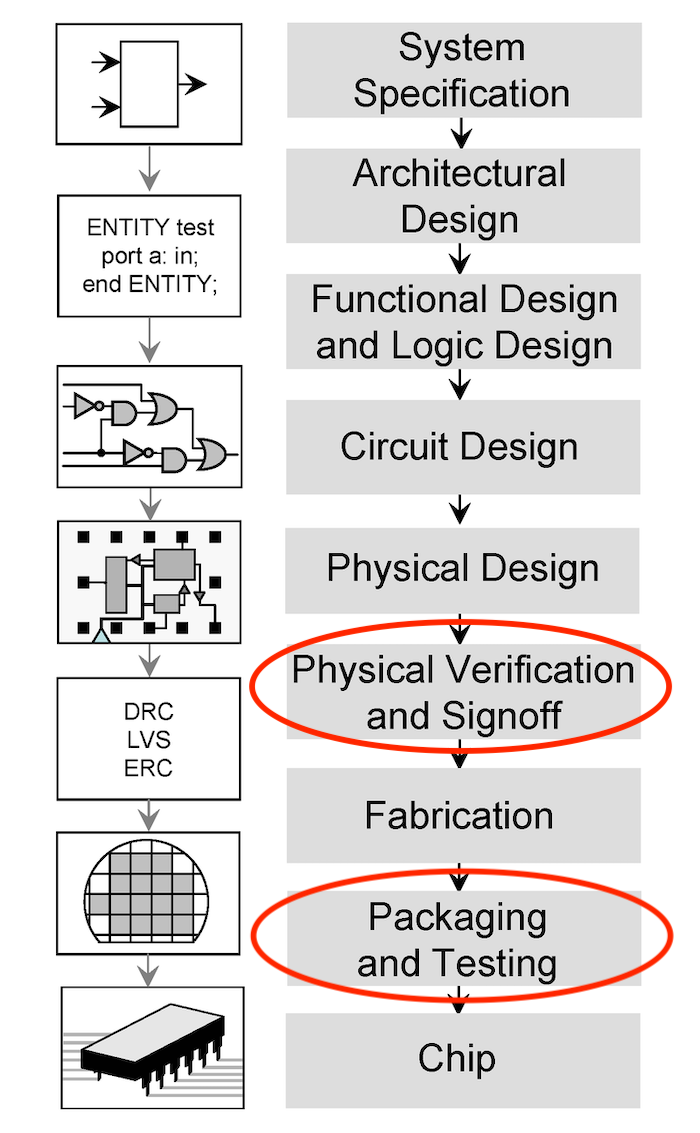

When you test, you are making sure that the product or system works but only after you have created the product components, chips, boards, packages and subsystems.

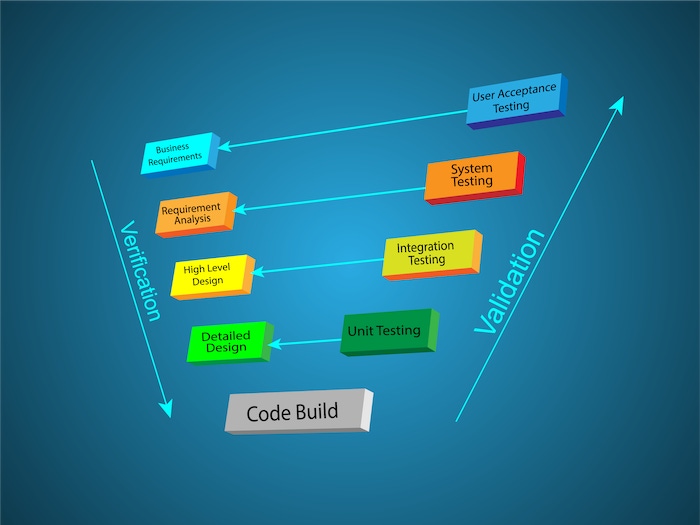

When you verify, you are making sure that the product or system works before it has been created or manufactured. In systems engineering, you verify to make sure that what you designed actually meets the requirements.

In this way, verification is a form of testing, but verification tends to be trickier as you test something before the product actually exists, be it in software or hardware.

For example, chip designers at Intel, AMD, nVidia and others use various techniques to verify their chip designs before sending them to a foundry to be manufactured or fabricated. Verification enables them to make sure their chips are designed to specifications and that everything has a high probability of working together as expected. If they make a mistake, it might cost the company millions of US Dollars to fix the problem with a respin, i.e. to make a new chip.

Verification engineers create models that simulate the behavior of the chip. Testbenches are built that can automatically test designs against these verification models. If the verification simulation results match up with the register transfer level (RTL) design model of the original design, then that portion of the circuity on the chip should work with fabricated.

RTL is a model of the actual circuit written in a hardware design language (HDL) like VHDL or Verilog. In essence, the HDL code describes how data is transformed as it is passed from register to register in the transistor-based circuit.

There are a number of different methodologies used in the chip verification process. One popular approach is the Universal Verification Methodology (UVM).

With the above-mentioned close ties to design, is it any wonder that verification is more closely aligned with the design development process than with the actual testing phase. A good verification engineer must really understand all the intricacies of a system-on-chip (SoC) design plus testbenches, test cases to cover all the feature functionality of chip and how best to try and verify every single line of RTL code for all possible test combinations in a billion plus transistor circuit.

To get a better idea of the current state of chip verification, Design News talked with Philipp A. Hartmann, the recent Chair of the SystemC Language Working Group (LWG) and the recipient of the 2020 Accellera Technical Excellence Award. Lu Dai, the Chairman of the Accellera Systems Initiative, was also present for this interview.

Today, one of the growing areas of technical concern in chip design is in the analog verification space. This concern rises from the increase in Internet of Things (IoT) devices that are packed full of sensors and RF/Wireless analog mixed-signal (AMS) chips. The interview begins with a question about AMS chips, which contain digital and analog circuits on the same chip.

Design News: There has been growing interest on the analog mixed signal (AMS) verification side of the Universal Verification Methodology (UVM) standard. Why is that so?

Phillip Hartman: I'm a system level person, so I would always say that for the AMS domain, there's a strong need to move up the abstraction level – just as in the digital domain. And secondly, there is concern as to how to reuse and how to verify these AMS models across the abstraction levels in a uniform manner. That is why the AMS folks are sometimes envious of the UVM standard on the digital side and often lament, “I want to have something like that for my AMS.”

Lu Dai: It’s interesting that the UVM AMS has received quite a few technical contributions and donations from some of the key players in the space. Many of the contributing companies have been working internally in the UVM AMS for quite some time.

Design News: Are AMS verification methodologies being widely adopted?

Phillip Hartman: I do see less adoption in the U.S. compared to Europe. Some solutions in the high-level synthesis space are based on SystemC while some are more C++ centric. But the SystemC approach is closer to verification and system level simulations, SoC prototyping, architecture exploration, SystemC AMS, and so on.

Design News: And why is that?

Phillip Hartman: I'm actually interested in learning why that is the case, too.

Lu Dai: I think it is more for historical reasons, almost like a Verilog vs VHDL and System Verilog vs SystemC issue. People tend to stay with familiar tools. Many companies do modeling in SystemC and then they generate RTL in SystemC, but verification was started at the Verilog level. This means that the generated SystemC must be converted to Verilog to do the verification. This process is very challenging, partially because companies are not willing to use a SystemC level verification – which does exist.

If you verify at the lower level in Verilog it is very difficult because it's not designed that way. If you do pure SystemC native flow, then everything works well.

Phillip Hartman: I think about this problem in a couple different areas. For example, when you do a digital design in SystemC based on high-level synthesis, et cetera, even solutions like UVM SystemC still has gaps. These gaps are due to the lack of a standard for constraint random, which is not part of SystemC or UVM SystemC yet. The working group is working on closing these gaps in constraint random and also functional coverage to have a more complete verification ecosystem based on standards in the SystemC world. However, we are not there yet. Most of the time I've been working on pure SystemC environments for virtual prototyping and early software development at these kinds of use cases. These are areas where SystemC shines.

Design News: Let’s switch gears to talk briefly about the technical excellence award. What was the most challenging part of working in a SystemC standards group over the years? [Editor’s Note: SystemC is an extension of the C++ programming language that can be used to create hardware, software and data flow models of a chip or other systems. It can be used with UVM for verification.]

Phillip Hartman: First of all, I'm very honored to receive the award. I'm just a regular engineer, right? I have been using SystemC for system level topics in all areas where SystemC is strong for almost 20 years. When you use these standards and tools in a very deep fashion, you realize that there are sometimes shortcomings or things that that makes your life harder with them. As an engineer, I want to fix problems at the source. So that's what got me started with engaging in the standardization process.

The challenging part of the standardization process is always the lack of resources since we rely on volunteer contributions. I want to thank my previous employers because they made it possible for me to donate some of my time to work on the standards. This is important because when we solve the limitations at the core, then the whole ecosystem and everyone can benefit from it. This is always better than just building your own in-house solution that might help you in the short run, but then you still have to build a workaround for some limitations.

Lu Dai: I just want to add that Phillip has been a very good contributor for the Accellera standards organizations. He's being very humble when he says, “I’m just a regular engineer.” Personally, I definitely wish everybody is as regular an engineer as Phillip. That’s part of the reason why he was chosen for the Technical Excellence Award, which was presented by Martin Barnasconi, the Technical Committee Chair.

|

Image Source: Accellera / Philipp Hartman (right) and Martin Barnasconi (left), Technical Committee Chair for Accellera, What Really is the Difference Between Test and Verification |

John Blyler is a Design News senior editor, covering the electronics and advanced manufacturing spaces. With a BS in Engineering Physics and an MS in Electrical Engineering, he has years of hardware-software-network systems experience as an editor and engineer within the advanced manufacturing, IoT and semiconductor industries. John has co-authored books related to system engineering and electronics for IEEE, Wiley, and Elsevier.

About the Author(s)

You May Also Like