The Nvidia DGX-2 Is the World's Largest GPU, and It's Made for AI

Nvidia has unveiled “the world's largest GPU” – the DGX-2, a platform targeted at deep learning computing that boasts ten times the processing power of its predecessor.

March 29, 2018

No entity has been more invested in applying in GPUs for artificial intelligence than Nvidia. Now the chipmaker, known traditionally as a graphics processor company, has made a definitive statement into pivoting itself into an AI and enterprise hardware manufacturer with the announcement of the DGX-2, the “largest GPU ever created.”

Prior to the DGX-2 announcement, Nvidia had been positioning the previous model, the DGX-1, (released only six months ago) for deep learning applications as well. When it was first released Nvidia touted it as the platform that would help leap frog autonomous vehicles to full level-5 autonomy (no steering wheel and no need for a human driver). While the DGX-1 was able to deliver up to 1000 teraflops of performance, the DGX-2 is a 350-lb powerhouse boasting a speed of 2 petaflops (For those doing the math, that's 2 quadrillion floating point operations per second) and 512GB of second-generation high-bandwidth memory (HBM2).

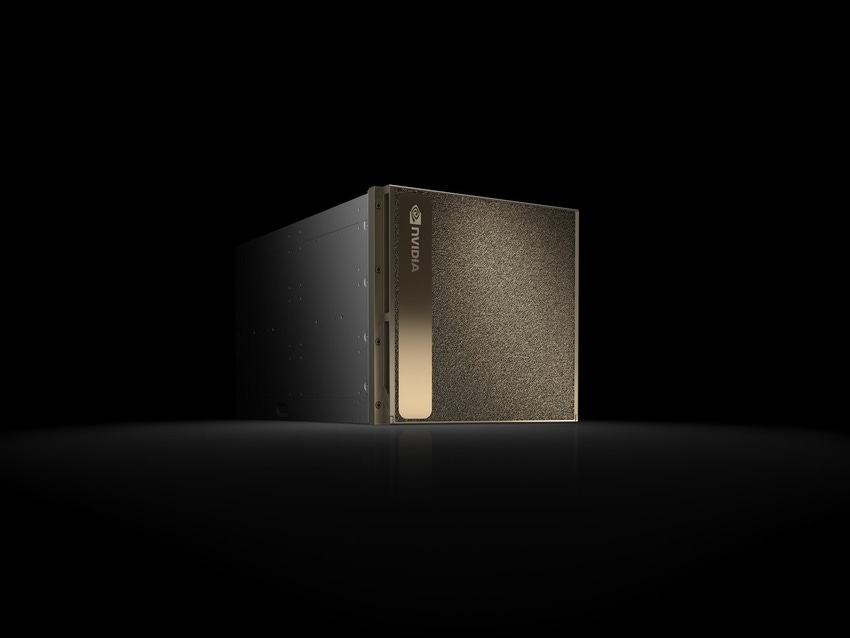

|

The DGX-2 weighs 350 lbs and boasts up to 2 petaflops of processing performance for deep learning. (image source: Nvidia) |

But this new platform won't be used to deliver the next-generation of photorealistic graphics for video games. The DGX-2 has its sights set on deep learning. “The extraordinary advances of deep learning only hint at what is still to come,” Jensen Huang, founder and CEO of Nvidia, said during his keynote at the GTC Conference, where the DGX-2 was unveiled. “We are dramatically enhancing our platform’s performance at a pace far exceeding Moore’s law, enabling breakthroughs that will help revolutionize healthcare, transportation, science, exploration and countless other areas.”

Nvidia wants to position the DGX-2 to engineers as a ready-to-go solution for scaling up AI, allowing them to easily build large, enterprise-grade deep learning computer infrastructures. According to specs from the company, the DGX-2 has the processing power of 300 dual CPU servers, but only takes up 1/60th of the space, consumes 1/18th of the power (10 kW), and at 1/8th of the cost (the DGX-2 will retail for $399,000).

When Huang calls the DGX-2 the “world's largest GPU,” he's not speaking in purely technical terms however. The DGX-2 is really a system that combines an array of GPU boards with high-speed interconnect switches and two Intel Xeon Platinum CPUs. Under the hood the DGX-2 combines 16 Nvidia Tesla V100 GPUs. Each Tesla V100 delivers 100 teraflops of deep learning performance, according to Nvidia. Adding to this, the GPUs are connected via a new connective network Nvidia calls NVSwitch. Huang said NVSwitch allows the GPUs to communicate with each other simultaneously at a speed of 2.4 terabytes per second. “ [About] 1440 movies could be transferred across this switch in one second,” Huang said.

Your browser does not support the video tag. |

A big part of the DGX-2's performance is owed to NVSwitch, a new communication architecture that allows GPUs to communite at speeds up to 2.4 terabytes per second. (image source: Nvidia) |

Huang also discussed benchmark tests Nvidia had conducted for the DGX-2 against it's predecessor. When the DGX-1 was released in December 2017 it broke records when it took 15 days to train it on Fairseq, Facebook's neural network for language translation. The DGX-2 can be fully trained on Fairseq in one-and-a-half days, according to Nvidia. Similarly it takes two GeForce GTX 580 GPUs (released in 2012) working together six days to be trained on AlexNet, a well-known image recognition neural network. The DGX-2 by comparison can be trained on AlexNet in about 16 minutes. “That's 500 times faster processing in only five years,” Huang said.

The DGX-2 is currently available for order, but given it's hefty price tag it's unlikely to see entities outside of big hitters in the AI space like Google investing in it in the short term. In conjunction with the DGX-2's release Nvidia has also updated its software stack for deep deep learning and high-performance computing software stack at no charge to its developer community. The updates include new versions of NVIDIA CUDA, TensorRT, NCCL and cuDNN, and a new Isaac software developer kit for robotics.

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, and robotics.

One of the major hassles of Deep Learning is the need to fully retrain the network on the server every time new data becomes available in order to preserve the previous knowledge. This is called "catastrophic forgetting," and it severely impairs the ability to develop a truly autonomous AI (artificial intelligence). This problem is solved by simply training on the fly — learning new objects without having to retrain on the old. Join Neurala’s Anatoly Gorshechnikov at ESC Boston, Wednesday, April 18, at 1 pm, where he will discuss how state-of-the-art accuracy, as well as real-time performance suitable for deployment of AI directly on the edge, moves AI out of the server room and into the hands of consumers, allowing for technology that mimics the human brain. Use the Code DESIGNNEWS to save 20% when you register for the two-day conference today! |

About the Author(s)

You May Also Like