NXP, Momenta Partner to Monitor How Alert You Are While Driving

The new system will allow vehicles to monitor driver attentiveness -- an ability that will be critical as the auto industry transitions from Level 2 to Level 3 autonomy.

June 28, 2019

|

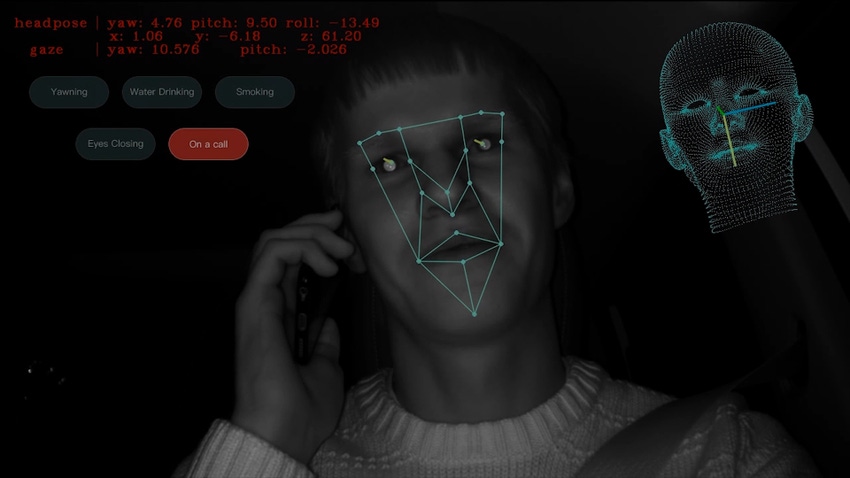

Momenta’s neural network technology will examine camera images of the driver’s face, placing boxes around the head, eyes, nose, and mouth. From those images, it will determine if a driver is asleep, drowsy or inattentive. (Image source: NXP/Momenta) |

Chinese driver-monitoring startup Momenta has announced it is teaming with NXP Semiconductors to help smooth the industry’s autonomy transition from Level 2 to Level 3 and beyond.

The company, considered a rising star in the world of autonomous driving, will collaborate with NXP to put its driver monitoring software on the S32V2 AI-enabled automotive vision processor. “We’ve made the process easier for the Tier One and the OEM,” noted Colin Cureton, senior director of ADAS product management at NXP. “By doing this proactive partnership, we’ve done a lot of the pre-emptive engineering work for them.”

The new Driver Monitoring Solution will combine Momenta’s neural network technology with NXP’s vision processor, which up to now has been used for such applications as front camera imaging and surround-view on vehicles. Together, the software and hardware will enable vehicles to examine the facial features of drivers and “know” if they’re attentive.

The availability of such systems is considered critical right now because automakers need a way to track the state of the driver. As vehicles move from Level 2 (partial autonomy) to Level 3 (conditional autonomy), the drivers’ state grows more important because Level 3 allows them to take their eyes off the road, but still requires they be available to take control if an issue comes up.

“As the driver, you may be able to look away, but you can’t be asleep,” Cureton told us. Driver monitoring – in which cameras and software watch for signs of drowsiness, distraction, or inattention – is one solution to that potential problem. “It’s able to tell the car how engaged the driver is, and to make sure the driver is in a position to deal with the level of autonomy that the car is providing,” Cureton said.

RELATED ARTICLES:

The neural network – which makes deterministic decisions in a manner similar to that of the human brain – will play a key role in enabling such understanding. The software examines a camera image of the driver’s face, isolating the head, eyes, nose, and mouth for telltale signs of inattention. “The neural net is doing the recognition of the facial features,” Cureton said. “Are the eyes closed? Is the person sleeping? Smoking? Eating?”

If the vehicle detects that a person has fallen asleep, it can vibrate the driver’s seat, vibrate the steering wheel, or make noise, Cureton added.

The new solution is also aided by the S32V2 vision processor’s features. The processor is optimized to run artificial intelligence algorithms. It also incorporates an accelerator capable of running neural networks.

NXP expects the new solution to serve as a bridge while the auto industry transitions from Level 2 to Level 3 autonomy. Today, many OEMs and Tier One suppliers are hesitant to move to Level 3 due to potential liability issues because they have no way of ensuring that drivers are engaged and ready to take the wheel under Level 3 conditions. For such reasons, some now use the terms “Level 2.5” or “Level 2-plus” to describe features that are beyond Level 2, but not ready for Level 3.

NXP also expects the new technology to serve in Level 4 (high autonomy), where drivers may still be called upon under certain conditions. “There may be other ways of solving the problem,” Cureton said. “But today this is the most effective way for the car to understand what the driver is doing.”

Senior technical editor Chuck Murray has been writing about technology for 35 years. He joined Design News in 1987, and has covered electronics, automation, fluid power, and auto.

Drive World with ESC Launches in Silicon Valley This summer (August 27-29), Drive World Conference & Expo launches in Silicon Valley with North America's largest embedded systems event, Embedded Systems Conference (ESC). The inaugural three-day showcase brings together the brightest minds across the automotive electronics and embedded systems industries who are looking to shape the technology of tomorrow. |

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)