Nvidia Unveils the EGX, its Supercomputer for 5G Edge Processing

The Nvidia EGX computing platform is designed for 5G connectivity and optimized to give a big performance boost to AI, robotics, and other sensor processing applications.

October 22, 2019

|

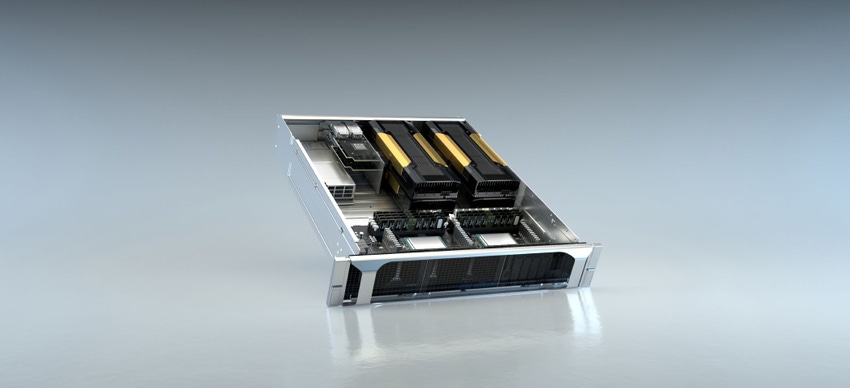

The Nvidia EGX platform is designed to handle 5G data at the edge. (Image source: Nvidia) |

Nvidia kicked off Mobile World Congress (MWC) 2019 in Los Angeles with a big investment on the future of 5G via what the company is calling “the world's first edge supercomputer.” Nvidia wants to position its EGX supercomputer platform as the go-to platform for processing the enormous streams of sensor data that 5G will be bringing in from smart factories, IoT devices, and even city streets. The goal, Jensen Huang, NVIDIA founder and CEO, explained, is to place powerful compute at the edge of the 5G network for high-performance, low latency processing for tasks as varied as enhanced retail experiences all the way to autonomous, connected cars.

Delivering a keynote a MWC 2019, Huang emphasized that for 5G to bring about the next great technological leap, data centers are going to need the computing power to process the new streams of data that will be coming in as 5G connects more and more devices. “We’ve entered a new era, where billions of always-on IoT sensors will be connected by 5G and processed by AI,” Huang said. “Its foundation requires a new class of highly secure, networked computers operated with ease from far away.”

That's where the Nvidia EGX comes in. The configurable platform runs with an Nvidia Cuda Tensor Core GPU and can be optimized for processing a variety of sensor information (including LiDAR) and also for artificial intelligence and robotics applications. Nvidia says that one core can run at processing speeds up to 240 teraflops. “One [EGX] rack will replace hundreds of CPU servers,” Huang told the MWC audience. The EGX is scalable and can go from a single, maker-friendly Jetson Nano to a high horsepower rack of T4 servers depending on engineers' needs. The EGX also runs a proprietary software stack that Nvidia says is enterprise-grade and optimized for running on edge-based servers.

New SDKs, More Powerful AI

Huang announced that Nvidia is already making strategic partnerships and rolling out developer applications around the EGX. Samsung, for example, will be deploying the EGX in its factories to assist with semiconductor design and manufacturing processes. “Samsung has been an early adopter of both GPU computing and AI from the beginning,” Charlie Bae, executive vice president of foundry sales and marketing at Samsung Electronics, said in a press statement. “NVIDIA’s EGX platform helps us to extend these manufacturing and design applications smoothly onto our factory floors.”

On the developer end, Nvidia is looking to the EGX to accelerate and enhance some of its AI efforts already under development. First announced two years ago, Nvidia's Isaac platform for training collaborative robots in virtual reality could see a much-needed processing boost from the EGX platform. The EGX should allow for faster and more robust simulation training of robots as well as faster and more reliable deployments into physical systems. Nvidia said that an official Isaac SDK will launch for developers in January 2020.

RELATED ARTICLES:

Nvidia also unveiled new developer tools in the form of Metropolis IoT, an open-source reference application for utilizing sensors in smart manufacturing and smart city applications. Metropolis, which is now available, runs on the EGX and will allow developers to experiment with developing for predictive maintenance, logistics, manufacturing, and other applications, all while leveraging the EGX's computing power.

Arguably the most intriguing demo of the presentation was the EGX's ability to facilitate multimodal, or multi-sensor, AI applications. Nvidia introduced a software platform dubbed Jarvis (yes, like Tony Stark's computer assistant) that can handle such tasks.

In one demo (shown below) a couple driving a car asked a system about restaurants in the area (Yelp rating, directions, ect.) while also seamlessly having a conversation with each other. In another a woman in a retail space was able to have an AI answer questions about the various products on the shelves by pointing at them or describing them (i.e. “How much is that white water bottle?”).

What was most notable about the demo was the lack of a hot word (no need to say, “Hey Alexa” or another term) and how the system was able to continue the interaction even with breaks in the conversation. There was a recognition of context. The AI tracked users' faces to recognize when they were looking into a camera to ask it a question – meaning it once someone asked about a sushi restaurant they could come back moments later and simply ask, “How far away is it?” without having to resupply context, such as the restaurant's name, to the system.

Enhanced by the EGX, Nvidia's Jarvis SDK can enable multimodal AI applications that can recognize context and handle queries without the need for special words or phrases to activate. (Source: Nvidia) |

Huang said such a system also has clear implications for the manufacturing space, particularly with collaborative robots. “You could tell a robot to 'pass me that,' 'hold that,' or 'hold this while I do that,' all things that require context,” he said.

Nvidia is releasing its Jarvis SDK for early access in December. Included in the SDK will be several pre-trained AI models for tasks such as speech recognition, natural language processing, text-to-speech, speech synthesis, computer vision, and pose classification. Developers will be able to use Jarvis' pre-trained models to create AI applications that take advantage of multiple sensor and data streams.

The elephant in the room however is that such functionality would seem to require some degree of continuous monitoring. How can the AI know you're looking into a camera, for example, unless the camera is always-on and checking for such a thing? Nvidia did not comment any further on this, but there's little doubt it will become of a growing and ongoing conversation around AI ethics and privacy.

But even with the moral and ethical implications Huang said the next computing revolution – the one that will create a seismic shift on par with the introduction of the first iPhone – won't happen until engineers rethink the data sensor with technologies like the EGX – transforming them into software-defined solutions.

“With 5G the edge can no longer be a pipe,” Huang said. “The edge has to become a computing platform.”

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, blockchain, and robotics

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)