This Tiny Sensor Could Be in Your Next Headset

Neuromorphic computing company develops event-based vision sensor for edge AI apps.

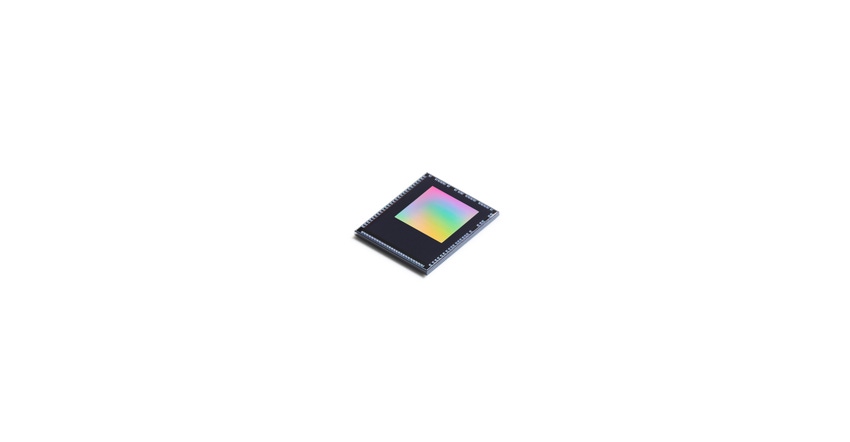

As edge-based artificial intelligence (AI) applications become more common, there will be a greater need for sensors that can meet the power and environmental needs of edge hardware. Prophesee SA, which supplies advanced neuromorphic vision systems, has introduced an event-based vision sensor for integration into ultra-low-power edge AI vision devices. The GenX320 Metavision sensor, which uses a tiny 3x4mm die, leverages the company’s technology platform into growing intelligent edge market segments, including AR/VR headsets, security and monitoring/detection systems, touchless displays, eye tracking features, and always-on intelligent IoT devices.

According to Luca Verre, CEO and co-founder of Prophesee, the concept of event-based vision has been researched for years, but developing a viable commercial implementation in a sensor-like device has only happened relatively recently. “Prophesee has used a combination of expertise and innovative developments around neuromorphic computing, VLSI design, AL algorithm development, and CMOS image sensing,” said Verre in an e-mail interview with Design News. “Together, those skills and advancements, along with critical partnerships with companies like Sony, Intel, Bosch, Xiaomi, Qualcomm, and others have enabled us to optimize a design for the performance, power, size, and cost requirements of various markets.”

Prophesse’s vision sensor is a 320x320, 6.3μm pixel BSI stacked event-based vision sensor that offers a tiny 1/5-in. optical format. Verre said, “The explicit goal was to improve integrability and usability in embedded at-the-edge vision systems, which in addition to size and power improvements, means the design must address the challenge of event-based vision’s unconventional data format, nonconstant data rates, and non-standard interfaces to make it more usable for a wider range of applications. We have done that with multiple integrated event data pre-processing, filtering, and formatting functions to minimize external processing overhead.”

Verre added, “In addition, MIPI or CPI data output interfaces offer low-latency connectivity to embedded processing platforms, including low-power microcontrollers and modern neuromorphic processor architectures.”

Low-Power Operation

According to Verre, the GenX320 sensor has been optimized for low-power operation, featuring a hierarchy of power modes and application-specific modes of operation. On-chip power management further improves sensor flexibility and integrability. To meet aggressive size and cost requirements, the chip is fabricated using a CMOS stacked process with pixel-level Cu-Cu bonding interconnects achieving a 6.3μm pixel-pitch.

The sensor performs low latency, µsec resolution timestamping of events with flexible data formatting. On-chip intelligent power management modes reduce power consumption to a low 36uW and enable smart wake-on-events. Deep sleep and standby modes are also featured. According to Prophesee, the sensor is designed to be easily integrated with standard SoCs with multiple combined event data pre-processing, filtering, and formatting functions to minimize external processing overhead. MIPI or CPI data output interfaces offer low-latency connectivity to embedded processing platforms, including low-power microcontrollers and modern neuromorphic processor architectures.

Prophesee’s Verre expects the sensor to find applications in AR/VR headsets. “We are solving an important issue in our ability to efficiently (i.e. low power/low heat) support foveated rendering in eye tracking for a more realistic, immersive experience. Meta has discussed publicly the use of event-based vision technology, and we are actively involved with our partner Zinn Labs in this area. XPERI has already developed a driver monitor system (DMS) proof of concept based on our previous generation sensor for gaze monitoring and we are working with them on a next-gen solution using GenX320 for both automotive and other potential uses, including micro expression monitoring. The market for gesture and motion detection is very large, and our partner Ultraleap has demonstrated a working prototype of a touch-free display using our solution.”

The sensor incorporates an on-chip histogram output compatible with multiple AI accelerators. The sensor is also natively compatible with Prophesee Metavision Intelligence, an open-source event-based vision software suite that is used by a community of over 10,000 users.

Prophesee will support the GenX320 with a complete range of development tools for easy exploration and optimization, including a comprehensive Evaluation Kit housing a chip-on-board (COB) GenX320 module, or a compact optical flex module. In addition, Prophesee will offer a range of adapter kits that enable seamless connectivity to a large range of embedded platforms, such as an STM32 MCU, speeding time-to-market.

Spencer Chin is a Senior Editor for Design News covering the electronics beat. He has many years of experience covering developments in components, semiconductors, subsystems, power, and other facets of electronics from both a business/supply-chain and technology perspective. He can be reached at [email protected].

About the Author(s)

You May Also Like