Qualcomm's Latest Chip Wants to be the Most Powerful for Cloud-Based AI

Qualcomm's Cloud AI 100 is a purpose-built chip for AI inferencing in the cloud that the company says outperforms similar offerings from Nvidia and other competitors.

April 9, 2019

|

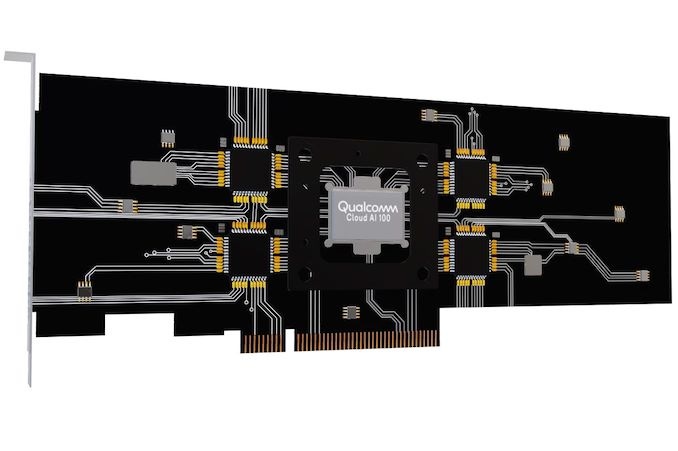

According to Qualcomm, the Cloud AI 100 chip can deliver 10 times the performance per watt over current GPU, CPU, and FPGA solutions for cloud-based AI. (Image source: Qualcomm) |

Qualcomm is applying its chip-making expertise to artificial intelligence in the cloud. The company's latest chip, the Cloud AI 100, is purpose-built to accelerate AI inferencing from the cloud to the edge. This, coupled with a separate announcement of new versions of the Snapdragon 6 and 7 series aimed at bringing AI processing to mid-level smartphones, suggests Qualcomm is looking to become a major player in both sides of the cloud versus edge AI debate.

In the cloud, Qualcomm says the Cloud AI 100 can deliver performance that outpaces other CPU, GPU, and FPGA-based solutions in terms of performance-per-watt. “Our all new Qualcomm Cloud AI 100 accelerator will significantly raise the bar for the AI inference processing relative to any combination of CPUs, GPUs, and/or FPGAs used in today’s data centers,” Keith Kressin, senior vice president of product management at Qualcomm Technologies, said in a press statement. “Furthermore, Qualcomm Technologies is now well positioned to support complete cloud-to-edge AI solutions all connected with high-speed and low-latency 5G connectivity.” According to company specs, the Cloud AI 100 can provide up to 10 times the performance per watt over the current, most advanced AI inference solutions.

Meanwhile on the edge, Qualcomm's new Snapdragon 730, 730G, and 665 mobile platforms are touting the latest version of Qualcomm's proprietary AI Engine. The 730 and 730G employ the fourth generation AI Engine, which the company says is designed for intuitive on-device interactions for video, voice, security, and gaming. The 665 supports a third-generation version of the engine aimed, again, at providing faster on-device performance for AI.

A Matter of Inference

If you've experienced your phone or other device trying to guess what word you're trying to type or say, or had you email suggest an automated response, you've already experienced an example of AI inferencing. Inferencing is used as a means of getting near accurate results from neural networks without the drain on system resources. Having a neural network come upon an exact conclusion or answer based on the data its given can be very demanding in terms of compute. In speech recognition for example, a neural network would have to comb through all of its data to find the exact right word that has been uttered.

Inferencing reduces the compute demands significantly by allowing the neural network to “infer” its answers. Rather than having to come on to an exact hit every time, the neural network uses all available data to make the best guess possible. Instead of having to decide if you meant to type the world “cat” directly it can guess you may have been trying to type “can,” “cart,” “catch,” or some similar world. Experts agree that in most applications inference gives nearly as good of a result but also in a way that's optimized for runtime performance.

RELATED ARTICLES:

The Latest, But Not the First

As Lian Jye Su, a principal analyst at technology advisory firm ABI Research, has noted, typically the market for AI chips for the cloud has been dominated by Nvidia. But with this latest announcement Qualcomm joins a handful of new challengers in the space.

“Several other players, including established suppliers like Intel and Xilinx, and smaller players like Graphcore have already launched similar products to Qualcomm’s Cloud AI 100, targeting exactly the same audience and use-cases,” Su told Design News in an email. “According to ABI research cloud AI inferencing will reach US $7.5 billion in revenues by 2023. This market is so far dominated by Nvidia GPUs and Intel’s CPUs, but these platforms are not fully optimized for inference. Competition in this space is expected to heat up within the coming years, mainly with the emergence of more specialized hardware like the platform announced by Qualcomm.”

While Qualcomm is clearly looking to distinguish the Cloud AI 100 from the competition via its performance, Su said there are other factors that also need to be taken into account. Key among these will be supporting the developer community and allowing customers who implement the Cloud AI 100 chip to customize it to some degree to support specific verticals and use cases without jacking up the price or sacrificing scalability.

“The ability for players to build a strong ecosystem and developer community around their chipsets to spur both technology and business model innovation across multiple use-cases and applications is a very challenging objective for all suppliers of cloud AI accelerators, including Qualcomm,” Su said. “This technology is still in a nascent stage of development and will take time for suppliers to gather a strong ecosystem that can match the scale of existing AI inference platforms, Nvidia’s in particular.”

Qualcomm says the Cloud AI 100 can support most of the popular AI software libaries available today, including TensorFlow, PyTorch, Glow, Keras, and ONNX, but Su said the company will also need to provide developers with the right tools to work with the chip as well. “Qualcomm will need to ensure the AI 100 is fully compatible with the latest AI open source inferencing software. Qualcomm will also need to work on developing quantization tools that will allow the developer to synthesize the size of their neural networks when deployed across the AI 100, without compromising model accuracy,” Su said.

These same ecosystem challenges also exist for Qualcomm's edge AI devices, Su said. “Qualcomm is not unique here as other competitors, including Hisilicon, with its Kirin 970 chipset, and MediaTek, with its Helio P90 chipsets, have already enabled users of mid-range smartphones to enjoy AI features that were previously only accessible to those who could afford flagship smartphones,” he said. “Since Hisilicon is a captive vendor (meaning its chipsets are only available for Huawei smartphones) and MediaTek Helio P90 shows very a weak AI ecosystem thus far, Qualcomm is well positioned to lead AI democratization in smartphones and become a key strategic chipset supplier for many OEMs, mainly Chinese vendors, who want to bring high-end features enabled by AI to mid-range and low-end smartphones.

“Qualcomm has already built a decent ecosystem around its AI engine for smartphones. Now the company needs to keep the associated ecosystem audience, essentially apps developers, engaged and invested in the platform if they want to extend the success of their AI engines to lower tiers of the smartphone market.”

Qualcomm is expected to being sampling the Cloud AI 100 to customers in the second half of of 2019. Phones based on the Snapdragon 730, 730G and 665 platforms are expected to be released later this year as well.

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, blockchain, and robotics

ESC BOSTON IS BACK! The nation's largest embedded systems conference is back with a new education program tailored to the needs of today's embedded systems professionals, connecting you to hundreds of software developers, hardware engineers, start-up visionaries, and industry pros across the space. Be inspired through hands-on training and education across five conference tracks. Plus, take part in technical tutorials delivered by top embedded systems professionals. Click here to register today! |

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)