Can AI Lift the Electronics Industry Out of its Doldrums?

AI boom is projected to boost the fortunes of chipmakers, but keeping up with growing chip demand could become an issue.

Over a year ago, demand for many electronics components started falling as the pent-up demand from the COVID-19 crisis dramatically slowed from slumping sales of consumer goods such as PCs and flat-panel screens. Many companies saw earnings and sales fall or level off. Inventories for some parts started to increase, though shortages continue to persist for chips used in applications such as automotive.

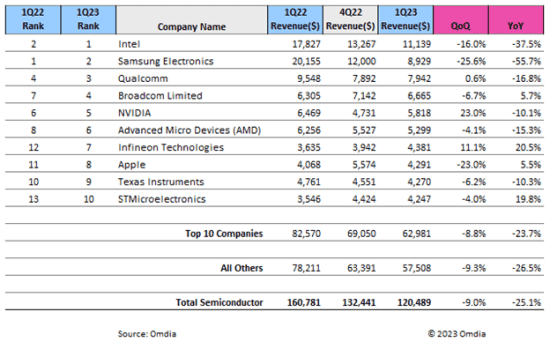

A recent report by Omdia noted that semiconductor revenue declined in the first quarter of 2023, by 9% to $120.5 billion. It was the fifth straight quarter of revenue decline in the semiconductor industry following a prolonged period of record revenue from the fourth quarter of 2020 through all of 2021.

Memory Market Still Weak

The Omdia report noted that the memory and MPU markets were two key culprits in the semiconductor industry slump. However, Omdia Senior Analyst Cliff Leimbach added, “There is demand due to generative AI. NVIDIA has seen strong revenue growth as they lead in this space, reversing the performance of most semiconductor companies to begin 2023, but other semiconductor companies have yet to take advantage of this space in a similar way.”

Another market research firm, Supplyframe, said in a recent report that most component lead times, many of which had stretched out to months during the pandemic, will continue to decline in coming months. Supplyframe also expects pricing to stabilize for most passive components and about half of semiconductors, though prices will remain high for some specialized ICs.

While recent these reports indicate the electronics industry is still not out of the woods yet, there’s light at the end of the tunnel. The growing demand for chips for AI (artificial intelligence), particularly generative AI, is expected to heavily impact future product development plans for electronics companies.

AI has been in the headlines all year as more companies and examining this technology and looking for the best way to leverage it. Aart de Geus, Chairman and CEO of EDA vendor Synopsys, summarized AI’s impact on the semiconductor industry during the company’s June quarter earnings announcement.

“The AI-driven, ‘smart everything’ era is putting positive pressure on the semiconductor to deliver more. Use cases for AI are proliferating rapidly, as are the number of companies designing AI chips. Novel architectures are multiplying, stimulated by vertical markets all wanting solutions optimized for their specific applications.”

The boom in AI has not been lost on semiconductor companies that are gearing for an avalanche of emerging AI applications. Not only are major semiconductor players active, but smaller start-ups are getting into the act as well.

Memory Compute Platform

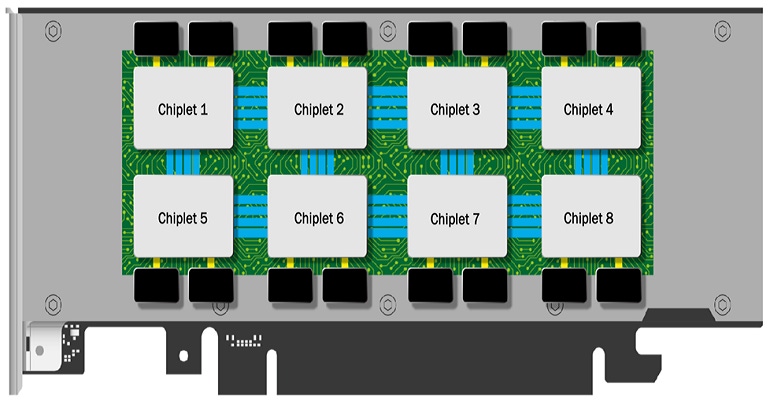

One of these startups is d-Matrix, a company founded by semiconductor industry veterans that has developed a patented in-memory compute platform for AI inference applications. The platform leverages the use of an on-board SRAM to perform intensive computing. This design enables all processing to be done digitally, instead of having to design in additional analog circuitry to handle computing. Chiplet technology is used to package the platform’s functions together into a scalable solution.

“We wanted to build a solution that changes the cost economics to allow generative AI to become more accessible,” said Sid Sheth, CEO of d-Matrix, in a recent interview with Design News.

According to Sheth, their platform is designed to give developers an alternative to costlier chips from suppliers such as Nvidia, arguably the company that to date has made the biggest imprint in the AI market with their chips.

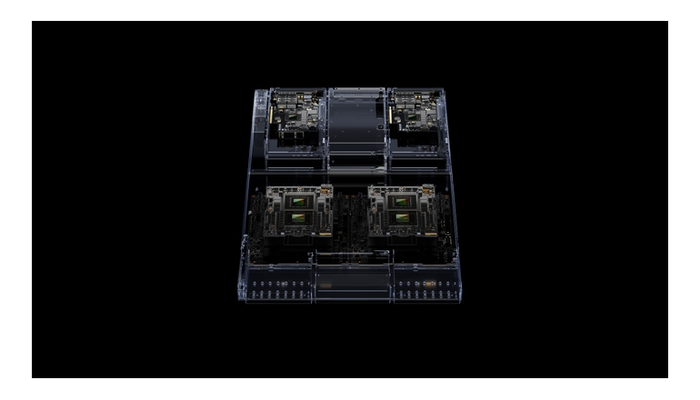

For its part, Nvidia has not stood still. The company’s latest AI processor, the next-generation GH200 Grace Hopper, is reportedly the first HBM3e processor with the ability to connect multiple GPUs for accelerated computing and generative AI.

The Grace Hopper Superchip can be connected with additional Superchips by NVIDIA NVLink™, allowing them to work together to deploy the giant models used for generative AI. This high-speed, coherent technology gives the GPU full access to the CPU memory, providing a combined 1.2TB of fast memory when in dual configuration.

Intel, which has been developing high-speed chips for AI and other supercomputing applications, recently optimized reference kits to speed AI development. The kits are built on the oneAPI open, standards-based, heterogeneous programming model and components of Intel’s end-to-end AI software portfolio, such as Intel® AI Analytics Toolkit and the Intel® Distribution of OpenVINO™ toolkit. They enable AI developers to streamline the process of introducing AI into their applications, enhancing existing intelligent solutions and accelerating deployment. Intel touts that the kits can reduce the time to solution from weeks to days, helping data scientists and developers train models faster and at a lower cost by overcoming the limitations of proprietary environments.

Is the Supply Chain Ready?

Going forward, the x-factor for semiconductor makers would be the ability to ramp up fabs, whether their own or through manufacturing partners, to produce specialized chips for AI applications. The passing of Biden’s Chips Act a year ago pumped some life into chipmakers as a flurry of new or expanded onshore fabs were announced. But given the gestation period to get these plants up and running, it remains to be seen whether a return of the chip shortages of several years ago will return, at least in the short term.

Numerous recent online reports cite a potential shortage of high-speed processors, namely GPUs for AI applications. Companies such as OpenAI have expressed concerns that shortages of GPUs could in turn impact their roll-out plans for additional products and services. As other companies bolster their AI services, cries for more chips are only likely to increase.

The chipmakers seem well-aware of these concerns. A recent report said that Nvidia would triple the production of its GPUs, including the forementioned H100 processor. `But squeezing existing fabs to produce the newer high-speed chips may not be that easy. Many older fabs are designed for older, less efficient semiconductor process nodes. The newer chips are often best optimized for newer process nodes, which are present in newer fabs.

Spencer Chin is a Senior Editor for Design News covering the electronics beat. He has many years of experience covering developments in components, semiconductors, subsystems, power, and other facets of electronics from both a business/supply-chain and technology perspective. He can be reached at [email protected].

About the Author(s)

You May Also Like