NASA Interview With Terry Fong: Intelligent Robotics Group

July 2, 2013

So, I found out recently that "number 5 IS alive," except in the new millennium, it's K10!

I recently spoke to Terry Fong, director of Intelligent Robotics Group and project manager of NASA Human Exploration Telerobotics. Fong stresses that human/robot collaboration in future projects will necessitate a team approach. Physical interactions must be monitored with collision detection algorithms and sensors, activity coordination, and conveyance of information will be critical. So Human Robot Interaction (HRI) methods need to be employed. An entire conference exists for HRI.

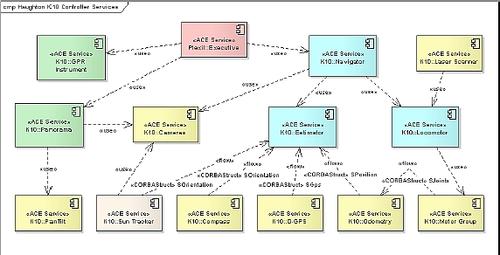

The Human-Robotic Systems Project includes four robots with four very different missions. These K10 robots are basically the same except for some payload differences.

Communications

I was curious about the fact that the K10 was using 802.11 WiFi communications. Fong commented that this mode of wireless communication is used for earth-based tests such as in Black Point Lava Flow area in Northern Arizona. A higher-level communication, such as Data Distribution Service (DDS2), would be used in space for a whole fleet of robots. Fong has said, "Getting four complex robots with very different designs to use a common data system was challenging. The Data Distribution Service for Real-Time Systems standard supports very flexible service parameters. We found that we could adapt the middleware to the unique needs of each robotic system."

Balance

Another question asked was regarding whether the K10 could "right" itself in the event it tipped over. Fong said that since it was an all-wheel-drive unit as well as all-wheel-steering with the ability to even out ground pressure, the K10 would not need "self-righting" capability since it would be kept away from dangerously steep areas.The K10 can scale hills as steep as 45 degrees, and climb over rocks while moving at approximately two feet per second, a human's average walking speed.

Sensors

The K10 needs to sense the world around it. The robot has maps in software for the expected environment travel needed.

Sensors like LIDAR, planar, and 3D scanning as a virtual buffer from obstructions and is used to build topographic and panoramic 3D ter-rain models. Light Detection and Ranging (LIDAR), is a remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) to the Earth. These light pulses -- combined with other data recorded by the airborne system -- generate precise, three-dimensional information about the shape of the Earth and its surface characteristics. A LIDAR instrument principally consists of a laser, a scanner, and a specialized GPS receiver.

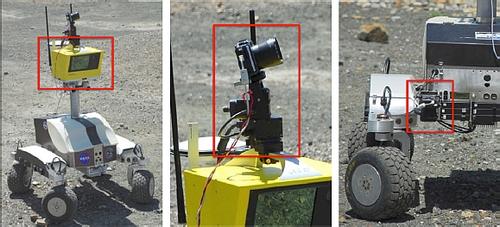

The K10 is also equipped with a Giga-Pan robotic camera that can take panoramic pictures containing more than a billion pixels. It also uses a microscopic imaging camera to take high-resolution photographs of the ground to help scientists analyze surface features. See images below for the location of these devices.

The K10 also uses the Global Positioning System, a digital compass, a sun tracker, and an inertial measurement unit, or motion sensor, for navigation.

K10 digital compass is a Honeywell model HMR-3000. It is composed of a three-axis magnetometer combined with a roll and pitch sensor. The sensor measures the magnetic field and recovers the orientation relative to magnetic north. In test sites at lower latitudes, like in the Marscape at NASA Ames, there is a relatively constant offset between magnetic and true north, called declination. But Haughton Crater, one of the test sites we discuss later in this article, is very close to the magnetic North Pole, so the magnetic field is nearly perpendicular to the surface and the declination varies. (See "Field Tests on Earth" later in this article.)

About the Author(s)

You May Also Like