Testing the Unanticipated: How Instrumental Brings AI to Quality Inspection

Instrumental's founder and CEO Anna-Katrina Shedletsky talks about how her company applies machine learning to quality assurance inspection, lessons she learned as an engineer at Apple, and what Industry 4.0 has gotten wrong.

September 3, 2019

There is a lot of talk of applying artificial intelligence and machine learning to manufacturing. But the big question is still: How can AI actually lead to better manufacturing outcomes? For Palo Alto, CA-based Instrumental the best opportunity lies in using AI for quality assurance and testing.

|

Anna-Katrina Shedletsky (Image source: Instrumental) |

By aggregating images from cameras placed throughout the manufacturing line, Instrumental uses a combination of cloud- and edge-based machine learning algorithms to detect product defects and failures throughout the manufacturing process. The company's system has already found use cases with name brands including FLIR, Motorola, and Pearl Auto.

Design News spoke with company Founder and CEO, Anna-Katrina Shedletsky, about Instrumental's machine learning technology, how her time as an engineer at Apple sparked her vision for the company, and the company's larger vision to transform supply chains forever using AI.

Design News: You spent time at Apple working on the Apple Watch? How do you transition from working at Apple to where you are now having founded Instrumental?

Anna-Katrina Shedletsky: My background is as a mechanical engineer. I was at Apple for six years. I led a couple of product programs there and then I was tapped to lead with some product design for the very first Apple Watch.

The role was very interesting because I was responsible for the engineering team that we flew to China. We stood on the [assembly] line and we'd try to find issues. I had visibility into all that minutia, and I was really seeing how the tail can wag the dog in terms of these seemingly small defects on the line having major impacts.

DN: Was this something that was unique to Apple?

Shedletsky: No, all the companies in the space have this problem. Something like a machine stretching your battery too much can cause your Samsung Galaxy Note 7 problem. You can create these inadvertent perfect storms where these quite small things can impact when you ship the product the schedule, how much money the company is going to make on it, how many returns there are – all of that stuff.

In my role I was continually frustrated by not having good tools to actually fix those small and minute problems – some of which are actually very big from a business perspective. So I decided to leave Apple and start a company to build that technology.

DN: Can you walk us through Instrumental's machine learning technology and how it works?

Shedletsky: The whole thing we're trying to do is to actually find defects that our customers have not yet anticipated.

If you know that there's a certain type of failure mode that could happen as an engineer you can try to design it out or minimize it. You can also put tests in place. What Instrumental does is we're very focused on finding these unanticipated defects.

Our neural networks learn from a very small sample of units – about 30 pieces is enough to get started. The algorithms learn what is normal based on just normal input – we don't need perfect units or defect samples – and from there we can set up tests to essentially identify new defects that weren't anticipated. We can also still look for defects that you do anticipate. So if you do find the defect you can set up an ongoing test to make sure you can catch those units in the future.

|

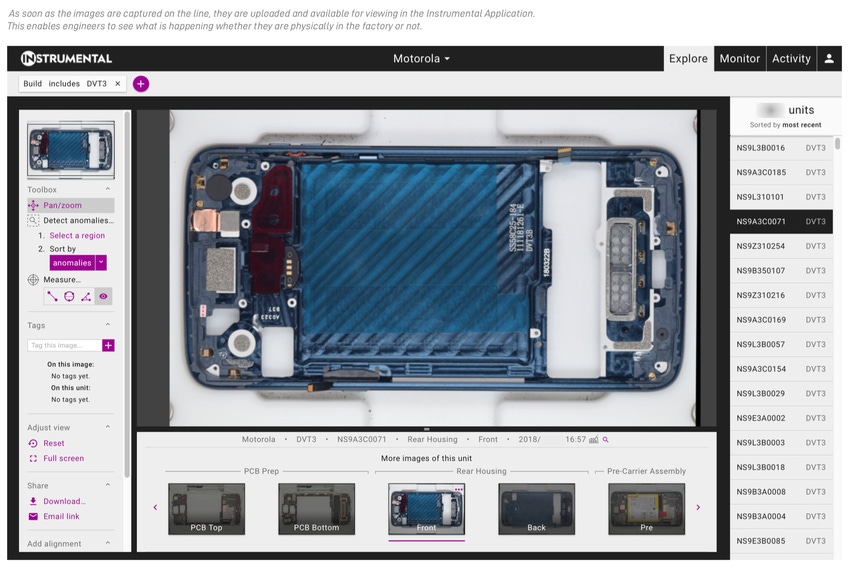

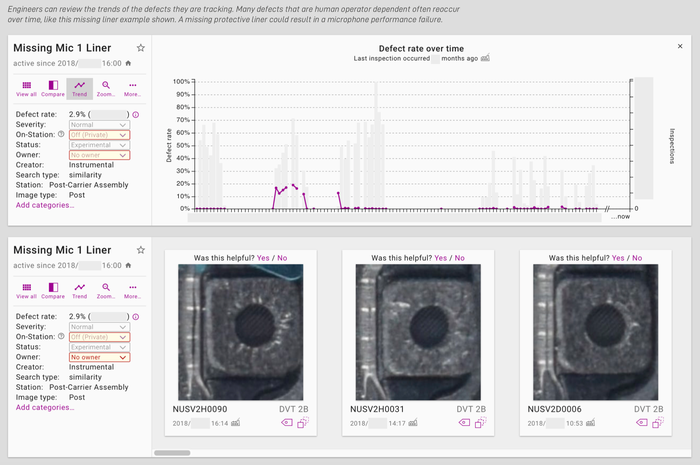

Instrumental uses machine learning algorithms to determine what product details are important or not and flag potentially serious issues for engineers. |

DN: In your sampling of about 30 images or so are you basically telling the algorithm, “this is what the ideal product should look like” from various angles and it's looking for any deviations from that?

Shedletsky: Actually it's even better than that. If you have to produce an ideal unit that's really hard to do in real life. What Instrumental is trying to do is actually determine which differences matter and which differences don't matter and highlight the ones that matter.

As an engineer I'm very creative; I can come up with a thousand different ways that something can go wrong and fail. But I can't create a thousand different tests. It's not practical.

The beauty of machine learning is that we learn over time from the data set about what differences matter. An example of a difference that doesn't matter might be the orientation of the screw head. So our algorithms become desensitized to that particular area.

However, if we've seen 20,000 images that all look pretty much the same and then suddenly there's an image where something is different. There's a really high probability that that difference is important.

From an engineering perspective we're building hundreds, thousands, or in some cases even millions of units a day. I can't look at all of these images, but if a computer can show me the top one percent that are interesting I will look at those because I'm trying to see if there are true defects. Then I can set up enduring tests that make sure that we can find those defects in the future.

DN: Is there any sort of limit to how many tests or experiments an engineer could have running on a particular product?

Shedletsky: We haven't found it yet. In terms of running live on the line, tests do add some time, but we're talking about milliseconds. Today if you want to add an inspection test for a human any kind of test is going to add a second or two. Human inspectors can typically only inspect about five things total per person. So if you wanted to test five different ways something could fail that would take up your whole human, and that human will probably take 10 seconds to do that inspection. We can do those five tests much faster and in real time.

Now, if you ran, say, a thousand tests I think it would it would slow us down initially, but we would work hard to bring that the back up. Our customers are typically running 10 to 15 tests on an individual kind of inspection – an inspection being a whole view of the product.

RELATED ARTICLES:

Design News: There's also a physical component necessary as well, right? Can you talk a bit more about the hardware setup required for this?

Shedletsky: We create images from an array cameras that are deployed wherever the manufacturing lines are. The reason is because you want checkpoints that keep states of assembly before you close up the unit and it's hard to get back at it.

We put our vision in places where vision hasn't typically been deployed in the past. Typically industrial vision is deployed for a very, very narrow purpose like, “I want to measure this gap.” We're using it in different way that's more generalized.

DN: Are these proprietary cameras? How does the camera system get implemented?

Shedletsky: The cameras are not proprietary. They're off-the-shelf. We can integrate if customers already have cameras that are producing images. Many of our customers do have cameras that are in reasonable spots where they want the data records; they just want this augmentation for new defects as well.

DN: How big of an infrastructure change is it for companies that don't already have large integrated camera systems? What's the implementation process like?

Shedletsky: The reason we built our own hardware stations is so that we can deploy them incredibly quickly. We do not rely on our customers to have significant infrastructure in place. We sit down with our customers, we figure out where we're going to put stations on the line, and then four weeks later we show up with equipment. We literally plug it into the wall and then we're ready to go.

We're not taking [assembly] lines down to do this. We get everything set up. It takes about an hour or two per station. We calibrate, we train everybody, and then they just run their build and we're just like a station on their line.

|

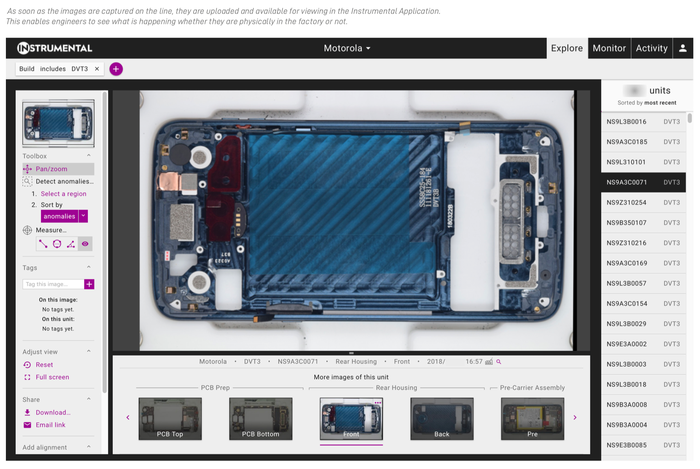

Instrumental's software displays inspection images of a product through every phase of manufacturing. (Image source: Instrumental) |

DN: Your system is partially cloud-based, but you there are also some edge processing capabilities within it too. Given this, how do you handle things in terms of scalabilty?

Shedletsky: The cloud is very scalable. We use GPU acceleration. Also, for our cloud processing we don't have to provide a result in a split second. It's okay for it to take a couple of seconds or minutes to get a result because our cloud results are latent – our users log on after the fact to see what has happened.

Once someone is expecting a real-time result that's when we push it down to the edge where we can control our GPU hardware on the line, which is pretty cheap these days. Thanks to Nvidia and other companies like that we're able to provide pretty-high powered processing units with every station for not a lot of cost, which enables us to do our computations very quickly.

DN: Does the cloud also offer advantages in terms of deploying updates to your algorithms?

Shedletsky: Absolutely. Our algorithms are constantly learning so each individually-tuned algorithm essentially learns on its own what it's supposed to be doing based on very limited feedback.

We're constantly evaluating our algorithm against a kit of of aggressive cases to actually improve our detection in challenging cases. We've made significant improvements and strides in that area and we continue to invest there.

In the last quarter, for example, we actually built new algorithms and that can automatically read barcodes from an image. The barcode is important because that's how you get traceability to look up a unit later and go back and figure out what's wrong with it.

DN: Is there any sort of crosstalk going on behind the scenes where the algorithm is learning not just from where its deployed but from algorithms deployed with other companies as well?

Shedletsky: There is no portability in the learning – meaning if we're working with a company and their proprietary data is in our system they own their data. So one customer's data is not being used directly to inform another customer's result. We're working on improving the algorithm performance for their specific detection.

However, there are opportunities from having access across the data set to make universal algorithmic improvements and do some really cool stuff that can do some useful automation. We're actually working on that now with customers who have given us permission to use their data.

DN: Instrumental sits in a very interesting space at a time when there are a lot of conversations happening around AI, robotics, and factory automation. How do you see your work playing a role in the larger trend towards Industry 4.0?

Shedletsky: As far as the future, what I would say is when I think people sit back and dream about the future of the manufacturing space they see robots everywhere. But they're actually dreaming of something much bigger than that.

People think automation and Industry 4.0 is the answer, but that's only halfway there. What people are really dreaming about and want is autonomy. But automation is not autonomy.

DN: So you see Industry 4.0 as only a stepping stone to something larger?

Shedletsky: Autonomy is a whole other level on top. It's a brain that will create factories that can actually self heal and self optimize. That's what Instrumental is building. We're building brains. We have an application that has to do with visual inspection today, but within the next year we'll be working on data that's not just visual in nature.

I talked about all the waste that occurs in manufacturing to bring one product to market. We can we can start to chip away at that if the line itself can actually improve. And I'm thinking much bigger than an individual line. You've got to zoom way out because there's a whole supply chain and there is an opportunity to connect the data all the way from the top of the supply chain down to the customer.

DN: Ultimately, would Instrumental like to link all of these pieces of the supply chain together in an autonomous way?

Shedletsky: Yes, and way that we're doing that is, frankly, very different than other companies who talk about Industry 4.0. We're a manufacturing data company that does not sell to the big manufacturers. Our customers are the brands that build and design these products, whose logos go on the product – because the brands own the supply chain.

Having access across the supply chain is really where the value is going to be in the long term – having all of that data and creating a brain that can process that data and push back correction.

In the first steps it will be pushing insights to a person who's still evaluating those insights before they implement an action. The next step is to actually tell the human why the feedback is happening so they don't have to do that stuff either. Eventually, we'll get to a point where we can even take the action. There will be robots on the line to handle those things and we'll just plug right in.

That's the blue sky opportunity of the space. And I think that the Industry 4.0 people have it wrong. To make an analogy to automotive: If the goal is a sort of Level 5 autonomy for manufacturers, Industry 4.0 is only Level 3.

*This interview has been edited for content and clarity.

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, blockchain, and robotics.

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)