Teaching Autonomous Cars to Learn

October 29, 2015

For autonomous cars to be successful, they can’t just think. They have to learn.

That’s a vexing problem for engineers. But it’s the reality facing the creators of tomorrow’s self-driving cars. Machine learning – in which software algorithms actually learn and make predictions based on sensor data – will play an important role in the development of those vehicles.

“The autonomous car will have to have the ability to adapt, based on rules it learns by itself,” said Ian Chen, manager of marketing for systems, applications and software at Freescale Semiconductor Inc.

For engineers, it will be a lot like raising children: Teach the vehicles how to learn; oversee their data-gathering process; then set up rules to help them make good decisions.

The reason for doing it that way is obvious. No engineer can anticipate every eventuality. No one can imagine every on-road scenario. “A self-driving vehicle can always run well on a test track,” said Wensi Jin of MathWorks, which makes data analysis and simulation software. “But once you release it to the world, you have to make sure it functions well in all kinds of road, weather, and traffic conditions.

Dealing With Data

Accomplishing that is at least a two-step process. The first step is to enable the car to deal with the massive amount of sensor data based on the eventualities that the engineers can foresee.

That, too, is no easy task. To understand the complexity of it, consider a car with an autonomous braking system. The braking system must deal with a virtual blizzard of information. The first and most obvious data stream comes from the wheel speed sensors – the car must know how fast it’s going. But to operate autonomously, it needs more than that. It needs cameras to know what’s in front of it. It needs radar, LIDAR and infrared sensors to determine the nature and proximity of forward objects. It also needs data from tire pressure monitoring sensors (did a tire blow?), vehicle-to-vehicle communications sensors (is there a hazard ahead?), and gyroscopes (is the car yawing?). Experts say that future cars will even use audio microphones to help determine if they are driving through rain, ice, or snow.

All of that data will need to be analyzed before a vehicle can “decide” to apply its brakes. “The car has to know about something to react to it,” Chen said. “If it doesn’t have cameras, if it doesn’t have radar, if it doesn’t have LIDAR, it can’t see anything. It can’t avoid something as simple as a garbage can in the road.”

Even the most mundane tasks will require sensors. Dipsticks, for example, will one day be equipped with sensors because self-driving cars have no other way of checking the oil.

That sensor-heavy scenario will be a departure from the current norm. Today’s cars typically use about 100 sensors. Within a few years, that number could climb to 200. “It could go up ten-fold by the time we get to self-driving cars,” Chen said.

Moreover, the data itself won’t be simple. Instead of 1-Hz frequencies, it could be 100 Hz. Instead of an on-off voltage signal, it could be a waveform or an image.

For control system designers, the complexity is already reaching critical levels. “When you go beyond 15-20 sensors, it becomes difficult for the engineers to hold all the sensor interactions in their heads and come up with an optimal system,” Chen added.

Increasingly, the solution lies in the use of design tools. Many automakers already employ model-based simulation for algorithm development in vehicle control systems. Simulation enables engineers to model the controllers and all their sensor inputs, even automatically generating the software code in some cases. Nissan Motors, for example, has used MathWorks’ Simulink to model the engine controllers and vehicle sensors that help it meet EPA standards. Similarly, Tata Motors’ has employed Simulink on the engine control system in its Nano vehicle.

READ MORE ARTICLES ON AUTONOMOUS CARS:

Adapting to Change

In autonomous vehicles, however, the challenges will be greater. The cars will have to deal with Big Data, and the rules themselves may change.

A simple example of that lies in GPS-based navigation and parking. In some scenarios, LED-based street lights would employ cameras to help cars find parking spaces. Such cameras would send massive, constantly-changing data streams to vehicles looking for open spots. To deal with it, autonomous vehicles would have to process data from off-board servers, then adapt to the changes in real-time.

Similarly, self-driving cars may have to learn how to predict their own mechanical problems, and the rules for doing so may change on the fly. If a vehicle fleet using Big Data sees a new pattern of engine failures, for example, the car itself might have to adjust.

"More and more, there's a trend toward using data to predict things," said Paul Pilotte, technical marketing manager for MathWorks. "You collect the data in real time, then have a command center that tracks, say, a fleet of engines. If you have a failure that's happening before scheduled maintenance, being able to predict it ahead of time can save a tremendous amount of cost."

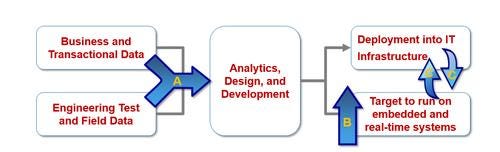

For engineers, the key is to have analytical tools that can take the sensor data, process it, and aid in the development of predictive algorithms. Such algorithms could reside on a desktop, a remote server, or even on an embedded system in the vehicle.

"We're seeing interest from the auto industry in adopting the tools of data analytics," said Seth DeLand, data analytics marketing manager for MathWorks. "They want to apply machine learning and Big Data techniques to their sensor data."

Ultimately, such tools could enable all manner of on-board control systems - from back-up cameras to adaptive cruise controls to fully-autonomous driving systems - to learn from data and change their algorithms over time.

Experts expect that capability to grow more important as autonomous cars evolve. "Learning is one of those things that you can choose not to turn off," said Chen of Freescale. "Chances are, when we've deployed enough machine algorithms, it never will be turned off."

Design News will be in Minneapolis and Orlando in November! Design & Manufacturing Minneapolis will take place Nov. 4-5, while Design & Manufacturing South will be in Orlando Nov. 18-19. Get up close with the latest design and manufacturing technologies, meet qualified suppliers for your applications, and expand your network. Learn from experts at educational conferences and specialty events. Register today for our premier industry showcases in Minneapolis and Orlando

Senior technical editor Chuck Murray has been writing about technology for 31 years. He joined Design News in 1987, and has covered electronics, automation, fluid power, and autos.

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)