Users Drive Innovation in Flash Memory

October 4, 2012

Consumers today are demanding more and more from technology, and nowhere is this more evident than in the interfaces we use to interact with our devices. There is an escalating need for devices from mobile phones to cars to home networks to workplace machines to integrate simple-to-use, intuitive user interfaces that increasingly mirror how humans interact with one another. In addition, consumers expect their devices to perform complex tasks with ease.

With each new product cycle, the pressure for designers to create meticulously operating, more intuitive UIs mounts. Following the recent proliferation of touchscreen interface technology on everything from phones, tablets, and monitors to point-of-sale solutions, ATM, and kiosks, voice recognition is rapidly becoming the next UI to drive product innovation and adoption. It's only a matter of time before voice recognition, and even more evolved UIs such as gesture and image recognition, become standard in how we interact with our various work and personal devices. However, voice recognition adoption has lagged somewhat in embedded applications and remains in its early stages. But it is clear that voice recognition as a UI will eventually be widely adopted. The automobile industry has plans to introduce more sophisticated models with improved embedded voice recognition features.

Part of the reason for the slower adoption of voice recognition is that progressively more intuitive UIs require exponentially more processing power and memory. This, in turn, is driving innovations in flash memory technologies. As most designers know, the more intuitive the user interface, the more complex the technology platform and design enabling the UI needs to be. UI technologies will become more compute intensive and require more advanced memory to accommodate high-performance processing capabilities while maintaining an optimal user experience. One solution is specialized hardware in the form of dedicated coprocessors built from next-generation memory combined with logic and incorporating flexible software algorithms. These coprocessors can act as separate hardware accelerators while offloading the main application processor to enable some of the richest user experiences the market has seen.

The evolution of HMIs

Human machine interfaces (HMIs) have come a long way from the advent of the computer mouse. Innovation in user interfaces has historically contributed to the successful adoption of new devices -- for example, the evolution from input buttons on older mobile phones to the touchscreen on a smartphone. Inventing compelling user interfaces is challenging and requires complex systems to create a functional, accessible, logical, and enjoyable user experience. These complex systems require reliable, high-performance hardware that can keep pace with processing and memory bandwidth demands. As innovation in core functionalities of end products approach maturity, consumers are increasingly turning to the industrial design and user interface of the product to guide purchasing decisions. Manufacturers are taking notice, and flash manufacturers and designers alike are being pressed to innovate rapidly in response. Voice recognition is the focus of this next wave of innovation in HMIs.

How voice recognition processing works

With advanced user interfaces becoming the de facto standard in consumer electronics, embedded systems are increasingly demanding high-performance processing capabilities. In general, voice recognition can be broken down in a three-step process.

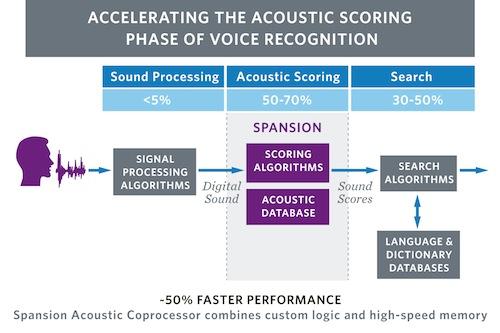

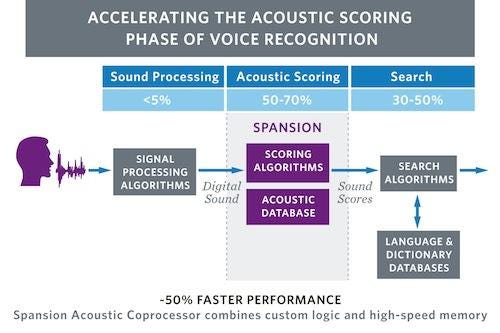

The first step is the sound processing phase, which takes less than 5 percent of the processing load. During this phase, the incoming voice signal is converted from analog to digital. This is also the phase when filtering, noise suppression, and echo cancellation take place to help distinguish the sounds of the speaker from other sounds being picked up erroneously. The output of this step is a stream of digital sounds, and each sound is unique like a fingerprint. In the next step, the system matches this sound against a library of sounds, a.k.a. the acoustic model. This matching process, which can take from 50 percent to 70 percent of the processing load, is called acoustic scoring. The resulting sound scores are the input for the third step, where the system interprets those sounds as words by searching the language and dictionary models. This search phase takes from 30 percent to 50 percent of the processing load.

Traditionally, this entire process is handled by a CPU that is also loaded to handle multiple other tasks. Since voice recognition is incredibly compute and memory intensive, sharing resources for voice recognition in embedded solutions often results in unacceptable latencies or limited bandwidth to handle the ever-increasing software models, which will increase in size to achieve better accuracy.

About the Author(s)

You May Also Like