What Happened To Intel’s Early Facial Recognition Platform?

What started out as a 2D to 3D depth camera has evolved to include tracking and LiDAR capabilities.

January 14, 2020

Facial recognition technology is one of the big trends at CES 2020. That’s not surprising since facial recognition market is expected to grow from USD 5.07 billion in 2019 to USD 10.19 billion by 2025, according to Mordor Intelligence. The hardware market is segmented into 2D and 3D facial recognition systems with the latter expected to grow the most in the coming decade.

|

Image Source: Intel / SID |

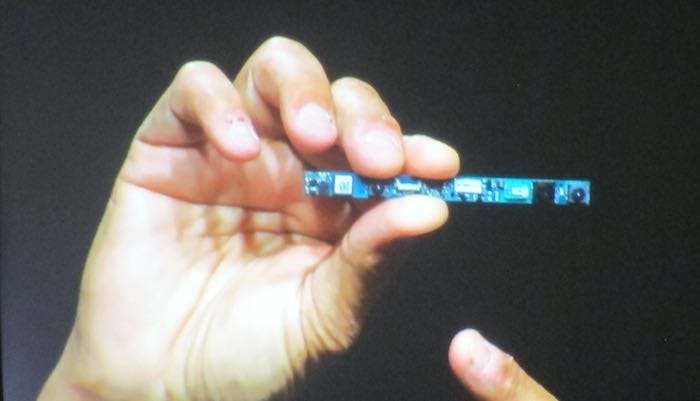

One of the early hardware platforms that would enable facial recognition was Intel’s Realsense. When the platform was first introduced in 2015, it was positioned as a way for PCs, mobile phones and robotic systems to see beyond two-dimensions or 2D. The smart-camera-based system was capable of sensing the third-dimension or depth perception to better understand objects in its environment. Since the first introduction in 2015, the camera-based system has gotten even smaller in size yet better in performance thanks to the scaling benefits of Moore’s Law.

One of the reasons for the early adoption and growth of the system was that software developers had free access to all of the Realsense APIs. These interfaces interacted with the camera to enable motion tracking, facial expressions – from smiles and frowns to winks – and more. Gesture tracking was also provided to create programs for those cases when users could not really touch the display screen, as while using a cooking recipe.

“Computers will begin to see the world as we do,” explained Intel’s then CEO Brian Krzanich at the 2015 Society for Information Display conference. “They will focus on key points of a human face instead of the rest of the background. When that happens, the face is no longer a square (2D shape) but part of the application.”

At the time, one of the early companies adopting the technology was JD.com, a Chinese online consumer distributor. JD.com had replaced its manual tape ruler measurements with container dimensions captured by the RealSense camera platform. This automation had saved almost 3 minutes per container in measurement time.

|

Image Source: Intel / SID |

Back then, the big deal was to move from 2D to 3D computing, where the third dimension really meant adding depth perception. An example of this extra dimension was given by Ascending Technology, a Germany company that used the Intel platform to enable a fast-moving drone to move quickly through a forest including up and down motions. To accomplish this feat required the use of multiple cameras and an processor.

Now, fast forward to CES 2020, where Intel’s Realsense has further evolved into a platform that not only supports depth perception but also tracking and LiDAR applications. Tracking is accomplished with the addition of two fisheye lens sensors, an Inertial Measurement Unit (IMU) and a Intel Movidius Myriad 2 Visual Processing Units (VPU). The cameras scan the surrounding areas and the nearby environment. These scans are then used to construct a digital map that can be used detect surfaces and for real world simulations.

One application of depth perception and tracking at CES was for a robot that would follow its owner and carry things. Gita, the cargo robot from the makers of Vespa, not only followed it owner but also tracked their where-about on the CES exhibitor floor.

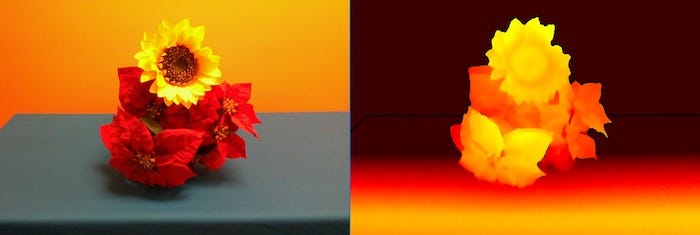

LiDAR (Light Detection and Ranging) was the newest addition to the Realsense platform. LiDAR cameras allow electronics and robots to “see” and “sense” the environment. Such remote sensing technology measures distance to a target by shining the target with a laser light and then measuring the reflected light. It is very accurate and is being used in the automotive industry to complement ultrasonic and regular cameras.

At CES 2020, one of the highlighted LiDAR applications was a full body, real-time, 3D scan of people. Another application of LiDAR was skeletal motion tracking with the Cubemos Skeletal tracking SDK, which boasted the capability to integrate 2D and 3D skeleton tracking into a program with a mere 20 lines of code. The SDK provided full skeleton body tracking of up to 5 people.

|

Image Source: Intel / Realsense LiDAR |

Since its release over 5 years ago, there have been many competitors to Intel’s Realsense platform, including Google Scale, Forge, ThingWorx Industrial IoT, and several others. Such healthy competition attests to the market for compact, relatively inexpensive camera platforms that are capable of depth perception, tracking objects an using LiDAR for scanning of shapes.

RELATED ARTICLES:

John Blyler is a Design News senior editor, covering the electronics and advanced manufacturing spaces. With a BS in Engineering Physics and an MS in Electrical Engineering, he has years of hardware-software-network systems experience as an editor and engineer within the advanced manufacturing, IoT and semiconductor industries. John has co-authored books related to system engineering and electronics for IEEE, Wiley, and Elsevier.

About the Author(s)

You May Also Like