Apple vs. the FBI vs. Our Privacy

Apple Computer's refusal to comply with an FBI request to unlock a mass shooter's iPhone has set off a debate at all levels of the technology sector. Here's everything you need to know about this landmark legal battle.

March 7, 2016

There were about 80 people in attendance at the event. We don't know what the argument was about or what sparked it, but on December 2, 2015, after a dispute with a coworker at a company holiday party, Syed Rizwan Farook, a 28-year-old environmental engineer for the San Bernardino County Health Department, left the the Inland Region Center in San Bernardino, Calif.,only to later return with his wife Tashfeen Malik. Both were heavily armed with semi-automatic pistols and rifles and reportedly wearing black tactical gear.

Less than four minutes later the couple had killed 14 people and injured 22 others. The couple would both die later that same day in a shootout with San Bernardino police. Days later, after the FBI opened a counter-terrorism investigation and uncovered Farook's ties to ISIL, the San Bernardino attack officially became the worst terrorist attack on US soil since 9/11.

As part of their investigation, the FBI was able to recover Farook's iPhone 5C, provided to him by his employer. The bureau hoped information on the phone would prove valuable both for the background on the shooting and preventing possible future attacks. But there was one hitch...they couldn't unlock the phone and in attempting to do so could potentially lose all of its data.

On February 9, 2016 the FBI turned to Apple for help in cracking Farook's iPhone, an action that would require defeating the smart phone's built-in encryption.

Apple's response: Absolutely not.

The FBI responded by obtaining a court order under the All Writs Act of 1789, mandating Apple to create and provide the FBI with software that would open Farook's phone. Under the All Writs Act a federal judge can compel individuals and companies to perform actions, as long as those actions fall within the law. It has been used in the past to compel manufacturers to release data from their devices in criminal cases. Apple should be no different, right?

Apple fought back. The case is still ongoing in the California courts and whether or not Apple will be (or should be) forced to comply with the FBI's demands has set off a debate all throughout the technology industry.

The guns used in the San Bernardino attack.

(Image course: San Bernardino County Sheriff's Department [Public domain], via Wikimedia Commons)

Setting a Dangerous Precedent

“We have great respect for the professionals at the FBI, and we believe their intentions are good. Up to this point, we have done everything that is both within our power and within the law to help them,” Apple CEO Tim Cook wrote in a customer letter dated February 16. “But now the US government has asked us for something we simply do not have, and something we consider too dangerous to create. They have asked us to build a backdoor to the iPhone.” In the letter Cook also states:” For many years, we have used encryption to protect our customers’ personal data because we believe it’s the only way to keep their information safe. We have even put that data out of our own reach, because we believe the contents of your iPhone are none of our business.”

RELATED ARTICLES ON DESIGN NEWS:

Apple contends that creating a backdoor into the iPhone would not only violate its policy of protecting its customers' data, but it would also open up the popular smartphone for dangerous cyber attacks and abuse by hackers in the future. Once the backdoor is open, Apple believes, there will be no way of shutting it. Not to mention that this would set a dangerous precedent whereby the government opens a Pandora's box of personal privacy invasions. “The implications of the government’s demands are chilling,” Cook said. “If the government can use the All Writs Act to make it easier to unlock your iPhone, it would have the power to reach into anyone’s device to capture their data. The government could extend this breach of privacy and demand that Apple build surveillance software to intercept your messages, access your health records or financial data, track your location, or even access your phone’s microphone or camera without your knowledge.”

And a large part of the cybersecurity community agrees. On March 2 a group of seven security experts submitted an amicus brief to the California court expressing their concern that the order against Apple “endangers public safety.”

Among the authors of the brief was Charlier Miller. If you remember that name, he was one of the people who exposed a massive vulnerability in Fiat Chrysler vehicles that allowed him to remotely take control of a moving jeep. Miller also has a history of working with Apple, helping the company plug various security holes in the iOS operating system. The experts' argument falls under four main points:

1.) “The Court’s Order Will Most Likely Force Apple To Create An Insecure Version of iOS Capable of Bypassing Passcode Functionality On Any iPhone”

2.) “Apple Will Likely Lose Control Of the Code, Due Either to Legal Compulsion or Theft”

3.) “The Court’s Order Would Set A Precedent For Forcing Vendors to Turn Their TVs and Other Consumer Goods Into FBI Surveillance Tools”

4.) “The Court’s Order Risks Undermining Critical Public Trust in Automatic Software Security Updates”

The brief goes on to state that, “Experience and history lead to the conclusion that forcing a company to undermine its own product security will thereby imperil not just the cybersecurity but also the physical security of its worldwide users.” The authors concluded that because of the potential dangers to public security and safety, “...Congress, and law enforcement alike should refrain from dictating to companies that they must weaken or bypass security features or build forensic capabilities into their products...”

But It's Just One Phone, Right?

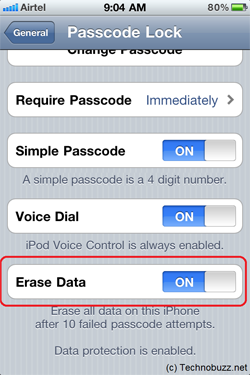

So here's how the newer models of iPhone (any model with iOS 8 or higher) function. Every piece of data on the phone is encrypted on the phone's hard drive. Whenever you enter your personal passcode to unlock your phone your passcode combines with your iPhone's unique ID number to create an encryption key that unlocks your data. Not even Apple knows the phones ID number and to keep hackers from brute forcing the phone – trying every possible passcode combination until something works – Apple has installed software that requires the passcode to be entered only using the phone's touchscreen and also has the option to delete all of the phone's contents after 10 failed passcode tries.

Given that each phone has a unique ID any bypass of Farook's phone would be unique to his, however creating the software to do so opens up possibilities for other phones to be cracked. And Apple, and other security experts, just aren't willing to take that chance. Even if this was all done inside some sort of ideal Mission Impossible-style super security hub, experts say the knowledge would still get out there. In an interview with the MIT Technology Review Andy Sellars, a lawyer with the Cyberlaw Clinic at Harvard Law School, said, “How long will that room really stay clean? The privacy benefit right now comes from the fact that nobody knows how to do this. Not Apple, not the FBI, and we think not the NSA, though maybe they do...As soon as Apple does this, there’s no way this wouldn’t get out, be stolen, be leaked. There is no way that would stay a secret.”

iOS 8 and newer iPhones have a security feature that deletes all of the phone's data after 10 failed password attempts.

(Image source: Technobuzz.net)

The pulse of the street is that many people are siding with and applauding Apple, if not for the company's dedication to privacy in the post Edward Snowden era, then at least for the perceived sticking it to the man. Large tech companies including Microsoft, Reddit, and eBay have pledged support for Apple, not to mention the ACLU. However by Apple's own admission it has worked with law enforcement in the past to obtain private data from an Apple product. This particular case around the San Bernardino shooting is unique and requires a solution beyond the typical workarounds Apple has used in the past.

“When the FBI has requested data that’s in our possession, we have provided it. Apple complies with valid subpoenas and search warrants, as we have in the San Bernardino case,” Cook says in his letter. “We have also made Apple engineers available to advise the FBI, and we’ve offered our best ideas on a number of investigative options at their disposal. we have great respect for the professionals at the FBI, and we believe their intentions are good. Up to this point, we have done everything that is both within our power and within the law to help them.”

Trust The Government?

For its own part the FBI has issued a statement saying that this is a singular case and that the agency is not trying to set up any sort of precedent to circumvent privacy in the future. In a letter dated February 26, FBI Director James Comey asked that a broader view of the circumstance be taken:

“The particular legal issue is actually quite narrow. The relief we seek is limited and its value increasingly obsolete because the technology continues to evolve. We simply want the chance, with a search warrant, to try to guess the terrorist’s passcode without the phone essentially self-destructing and without it taking a decade to guess correctly. That’s it. We don’t want to break anyone’s encryption or set a master key loose on the land. I hope thoughtful people will take the time to understand that. Maybe the phone holds the clue to finding more terrorists. Maybe it doesn’t. But we can’t look the survivors in the eye, or ourselves in the mirror, if we don’t follow this lead.”

John Carlin, assistant attorney general for national security, has said that this whole issue can be resolved very simply and without setting any sort of new legal precedent. According to the MIT Technology Review, Carlin points out that since Farook's iPhone was provided by his employer (San Bernardino County) it is the county that actually owns the phone. All Apple has to do, according to Carlin, is comply with the county's request to unlock Farook's phone and the company would be acknowledging a customer request, and not one by the US government...no precedent set, merely customer service.

And not all security experts believe Apple is handling this situation correctly. In that same article, cryptography expert Adi Shamir, criticized Apple, saying that the company has “goofed” and should have worked with the FBI then shored up the backdoor later with an improved version of iOS. “Apple should close this loophole and roll out an operating system that will really help them make this argument in future,” Shamir said.

No matter how the technology community, law enforcement, or the nation as a whole fall on the issue, there will be a court hearing held in California on March 22 to ultimately decide the issue. Whatever the court decides, expect either Apple or the Department of Justice to file an appeal.

Where do you fall on this issue? If you were Apple would you comply with the FBI? Share your opinion with us in the comments!

Chris Wiltz is the Managing Editor of Design News.

Like reading Design News? Then have our content delivered to your inbox every day by registering with DesignNews.com and signing up for Design News Daily plus our other e-newsletters. Register here!

[Main image source: FreeDigitalPhotos.net / Tuomas_Lehtinen]

About the Author(s)

You May Also Like