6 Times AI Didn't Live Up to the Hype

There's a lot of hype surrounding artificial intelligence, but it's important that we don't forget the realities of the technology and what it really can and can't do.

February 20, 2018

Hollywood hasn't done artificial intelligence any favors, according to Oliver Christie. Speaking at the recent 2018 Pacific Design & Manufacturing Show in Anaheim, Calif., Christie, a consultant specializing in artificial intelligence, said we're in danger of letting the hype and hyperbole around AI cloud our thinking about the technology and its true capabilities.

“We think of AI in a war type setting,” he told the audience. “We think of the technology as if we're in a sci-fi world, but we're not. And these views are impacting decisions made in the real world.”

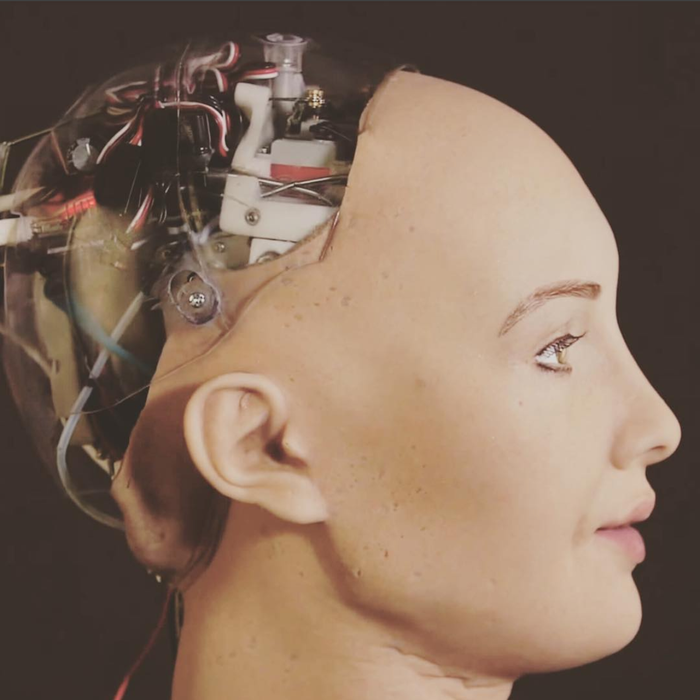

When it came to fantastical things that we were afraid were going to come and destroy the world, aliens used to be at the top. But now there's strong evidence that AI has taken the top spot. Between the scenarios painted out in shows like Westworld and movies ranging from 2001 to Terminator and Ex Machina Christie said films and TV are doing for AI what Jaws did for sharks.

Indeed people have accused AI of all sorts of shenanigans from eavesdropping on us to creating Bitcoin as part of a master plan to take over the world. And exaggerated scenarios like these have the potential to have real influence on the policymakers and engineers overseeing AI.

“I don't want regulation to come from hollywood,” Christie said. “People in government panic; they overreact and if we're not careful we're going to have a straitjacket put on [AI] very quickly.”

But tackling AI hype isn't a daunting task, merely a matter having a clearer conversation on what AI and machine learning can really do. In that spirit we present six times AI failed to live up to the hype and what it really means for our industries and society.

Click the image above to start the slideshow

Chris Wiltz is a Senior Editor at Design News, covering emerging technologies including AI, VR/AR, and robotics.

About the Author(s)

You May Also Like