COBOL Coders Needed for Coronavirus Fight

One of the oldest of all programming languages is still in demand but thanks to open source compilers and online learning, it’s easier than ever to add COBOL to your skill set.

April 23, 2020

Once every decade or so, the call goes out for Common Business-Oriented Language or COBOL programmers. Although over 60 years old, the language is still in use today.

COBOL programmers are needed because so many state’s systems still run on older mainframe computers. In many states, the unprecedent number of unemployment claims caused by the COVID-19 related economic hardships are requiring changes to the existing COBOL account program. As but one example, Connecticut has admitted it is struggling to process the large volume of unemployment claims with its 40-year old, mainframe-based COBOL applications.

The problem isn’t just with unemployment systems. According to Reuter, COBOL code is used in almost 95 percent of ATM transactions. The language is even used to power 80 percent of in-person transactions. The report claims that there’s still 220 billion lines of COBOL code currently being used in production today. Scarier still is that COBOL systems handle $3 trillion in commerce every day.

Some of us will remember the pre-cloud computing days when the popular buzzwords were rightsizing and client-server network architectures. Back then, in the 1990s, PC clients and Internet connected servers where meant to replace older mainframe computers for most enterprise grade operations. The migration from mainframes to client-server networks (today we might say edge to cloud networks) was particularly urgent as the end of the millennium heralded the famous Year 2000 or Y2K problem.

The Y2K issue arose as several of the original mainframe-based software programs – like COBOL and RPG – used two-bit digital dates to save on expensive computer memory and a shortage of mass storage. For example, the year 1990 was represented in such program by only the last two digits, e.g., “9” and “0.” Once the new millennium arrived, these programs based on the two-digit year formats would not distinguish between 1900 or 2000. Economic chaos would surely ensure, and it would be TEOTWAWKI (The End Of The World As We Know It).

Thanks to warning by leading software gurus of the time, like Edward Yourdon, much of the suspectable Y2K code was repaired before the end of the millennium.

The takeaway is that, regardless of the calamity, COBOL programmers seem always to be in short supply.

My Take:

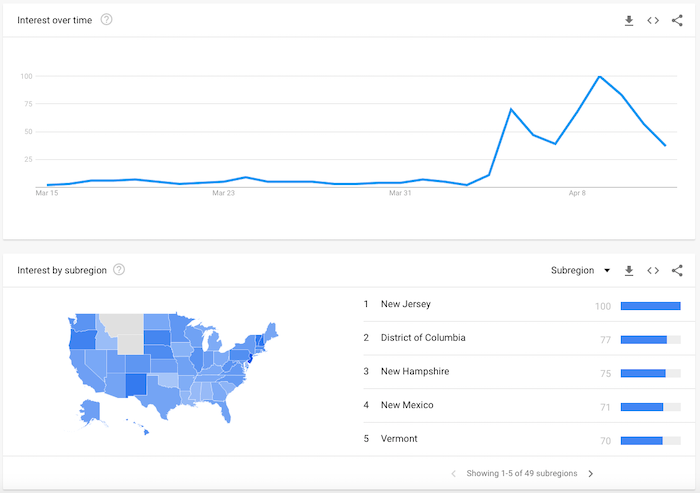

Yep – We need more COBOL programmers to get us through this latest programming crisis. State governments and large companies should simply hire existing older COBOL programmer or spend money to train new ones. Not surprisingly, there has been a surge in Google queries on the search term “Learn COBOL” over the last few weeks. It’s really not that difficult a language, although it is archaic and appreciating the basic workings of a mainframe would help.

Linkedin Learning is just one of many places providing COBOL training. The coursed aims to, “help new and experienced programmers alike add COBOL (or add COBOL back) to their skill set. Among the many topics will be a review of COBOL's data types and constants, control structures, file storage and processing methods, tables, and strings. That should be fun.

Did you know there is even a visual version of COBOL? One company, Micro Focus, claims that its Visual COBOL suite will future-proof your COBOL business applications. It will even interface with Microsoft’s Azure.

Finally, and perhaps most importantly, there is now an open source COBOL compiler. These compilers have traditionally been closed source and expensive, since most COBOL code was written in corporate environments. Thankfully, the open source GNU package named GnuCOBOL is available to all.

|

Image Source: Google Trends |

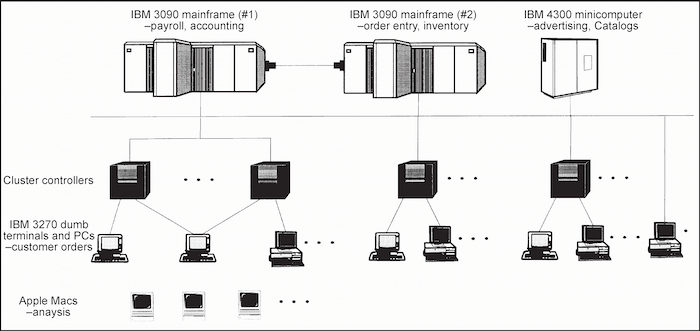

To help get COBOL enthusiasts in the right frame of mind, consider the following system-level problem faced by businesses in the late 1990s. If you can solve it, you’ll be a likely systems-level candidate for modern COBOL challenges. Then all you’ll need to learn it the actual programming language in your spare time.

Problem Statement: The government in your favorite state urgently needs to convert some legacy applications written in COBOL on mainframes to the new downsized environment consisting of Sun servers and PC clients. The state had to decide whether the code should be compiled (essentially unchanged) on the new servers, first translated to C, or rewritten from scratch. Since the state had already concluded that a client/server architecture was the way to go, it knew that the code would have to be changed significantly in some places. The hope was to break out large modules that could be left mostly unchanged. At the same time, the state was worried about characteristics of the old code that would break over time. The biggest concern was the transition from December 31, 1999, to January 1st, 2000. How much of the old code assumed a year of 19xx? The code could be recompiled, or tools could be used to translate it to another language, but those tools would not add any understanding to what went on in the legacy applications. The state also worried that there were subtle bugs in the old code, such as using uninitialized memory, that had not showed up yet due to luck or because some logic paths had never (or had rarely) been used.

Solution: What would address this problem? Would you convert the changed portions of the COBOL code to C, Java, etc.? How would you test, verify and validate these changes? What would be your backup plan? What API or GUI would you used to input data into the new program in the future?

How would you conduct the acceptance phase of this project, i.e., divorcing the direct connection between the legacy application and its database management system? This would be a very challenging process because the connections were all through the old code. But after moving these out and isolating them in one code block, the legacy application would become a true tiered application and the client/server system would be very flexible, able to run the GUI, business logic, and database on three different machines, if necessary. At least, that would be the hope.

|

Image Source: What’s Size Got To Do With It |

RELATED ARTICLES:

John Blyler is a Design News senior editor, covering the electronics and advanced manufacturing spaces. With a BS in Engineering Physics and an MS in Electrical Engineering, he has years of hardware-software-network systems experience as an editor and engineer within the advanced manufacturing, IoT and semiconductor industries. John has co-authored books related to system engineering and electronics for IEEE, Wiley, and Elsevier.

About the Author(s)

You May Also Like