Yes, AI Can Be Tricked, And it's a Serious Problem

Adversarial examples represent potentially very dangerous flaws in artificial intelligence systems that researchers are still working to understand and overcome.

November 9, 2018

|

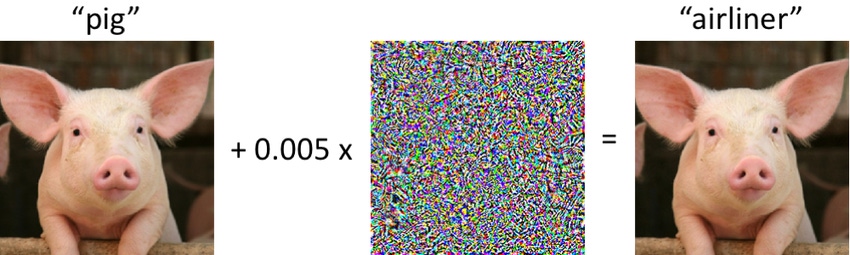

By introducing imperceptible adversarial examples into an image of a pig, researchers were able to trick an image recognition system into believing it was looking at an airliner. (Image source: MIT CSAIL) |

In 1985, famed neurologist Oliver Sacks released his book, The Man Who Mistook His Wife for a Hat. The titular case study involved a man with visual agnosia, a neurological condition that renders patients unable to recognize objects or, in this case, creates wild disassociations in objects (i.e., mistaking your wife's head for a hat). It's a tragic condition, but also one that offers neurologists deep insight into how the human brain works. By examining the areas of the brain that are damaged in cases of visual agnosia, researchers are able to determine what structures play a role in object recognition.

While artificial intelligence hasn't reached the levels of sophistication of the human brain, it is possible to approach AI research in the same way. Would you ever mistake a pig for an airliner? What about an image of a cityscape for a Minion from "Despicable Me?" Have you ever seen someone standing up and thought they were lying down? Chances are, you haven't. But machine learning algorithms can make these sorts of mistakes where a human never would.

Giving the system “brain damage”—understanding where mistakes occur in the system—allows researchers to develop more robust and accurate systems. This is the role of what are called adversarial examples—essentially, optical illusions for AI.

Plenty of humans have been fooled by optical illusions or sophisticated magic tricks, but we go about our daily lives recognizing objects and sounds pretty accurately. But the same task of recognizing an image that may seem more than obvious to a human can actually trick AI due to tiny anomalies, or perturbations, in the image. Adversarial examples are imperceptible to the humans, but they can cause AI to make errors and miscalculations that no healthy human ever would. Thus, an image of a city street that looks normal to a human can contain hidden perturbations that make an AI system think it is looking at a Minion character. (The Facebook AI researchers demonstrated this exact case in a 2017 study.)

Creating adversarial examples is an important part of ensuring that algorithms and neural networks are as robust as their biological counterparts—particularly when deployed in the real world. After all, we want an object to be recognized as what it is no matter what size, orientation, or color it is or the amount of noise or interference laid over it.

RELATED ARTICLES

And we don't want these systems to be deliberately and easily fooled. The idea of being able to imperceptibly trick an AI of course has wider implications in the overall fidelity and level of AI performance. It brings deeper security concerns as well.

In a 2018 study published in the journal Computer Vision and Pattern Recognition, researchers were able to cause a neural network to misclassify road signs using adversarial examples. Another 2018 study, done by researchers at the University of California, Berkeley, showed that adversarial examples hidden in audio recordings can trick a speech recognition system into transcribing what sounds like a normal message to any human into a completely different message of an attacker's choosing. Imagine a hacker being able to send an audio recording to Alexa or a similar program that could sound like a simple query about the day's weather, but actually contain hidden malicious code and instructions.

Extrapolating cases like these points to a number of potential real-world hazards. Malicious attackers could use adversarial examples to pass explicit images through filters, deliver viruses and malware into systems, sneak past surveillance cameras, and even trick autonomous and semi-autonomous vehicles into ignoring traffic signals, signs, and lane markers.

While such attacks haven't happened in the real world yet, they represent a very real and inevitable threat unless researchers and developers are able to get ahead of it.

|

Facebook AI researchers used a new method of developing adversarial examples to trick an AI looking at a city street into thinking it was looking at a Minion. (Image source: Facebook AI Research) |

No Simple Trick

The challenge for AI researchers today is that current methods of creating adversarial examples, though effective, don't necessarily yield the quality and accuracy in their results that researchers and developers desire—particularly when it applies to applications beyond image recognition, such as speech recognition, human pose estimation (recognizing humans in a given space), and machine translation (translating language from one machine to another).

A typical adversarial example is created by introducing some sort of disturbance into a data set. In the case of an image, it may be altering the values or weight of pixels in an image. In the case of speech, it may be adding imperceptible background noise or distortion to words. All of these are challenges humans can overcome with ease, but they can be problematic for AI.

The issue with these methods is that the results they give don't necessarily translate into an immediate means of improving the neural network or system. The quality of speech recognition systems, for example, is measured by their word error rate. So, if it's possible to trick a system into mistaking the word “apple” for “orange,” the question for researchers is: What is the solution? Should the system be trained more on recognizing those specific words? Should it be given a better understanding of the overall context of a sentence to better infer what the word might be? Maybe another solution entirely?

Added onto this is that methods of creating adversarial examples don't transfer between models. Given the layered nature of neural networks, with different layers performing their own specific tasks, this means researchers need to create a different adversarial example to attack specific models. This can be time consuming and complex.

Research groups are working to tackle this problem, however. In 2017, researchers from Facebook AI Research (FAIR) in Paris and Bar-Ilan University in Israel published a study about a new, flexible model for testing neural networks for potentially dangerous classification errors, dubbed Houdini.

In this video, researchers demonstrate tricking an AI into thinking a live turtle is actually a rifle. (Source: 2018 International Conference on Machine Learning) |

The goal of Houdini is to move toward a one-size-fits-all method—one that can create powerful adversarial examples across models and applications. The easier it is for researchers to create powerful and precise adversarial examples, the better they can work to improve AI.

True to its namesake, Houdini deceives AI into seeing and hearing what it shouldn't—all with perturbations even less perceptible than those created with older methods. Houdini attacks AI with a level of sophistication that allows adversarial examples to be applied in applications beyond image recognition—overall, providing researchers with a deeper understanding of how neural networks and algorithms function and how and why they make mistakes.

Closing this gap between our expectations and how AI algorithms perform is an important step—particularly for advancing AI toward increasingly sophisticated applications, such as autonomous vehicles and high-level manufacturing automation. Malicious hacking aside, even unforeseen errors and illusions by an AI can result in time and money lost on the factory floor. As Harry Houdini himself once said, “What the eyes see and the ears hear, the mind believes.”

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, and robotics.

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)