How AI at the Edge Is Defining Next-Generation Hardware Platforms

In preparation for DesignCon 2020, here's a look at some of the latest research being done on AI inference at the edge.

November 18, 2019

The Center for Advanced Electronics through Machine Learning (CAEML) has been very active in the newly-established machine learning track at DesignCon, helping to present many quality papers from the hardware design community.

Celebrating its third anniversary this year, CAEML has been at the forefront of machine learning and its applications in hardware and electronic design. Much of the center's research has direct applications in the area of hardware and device management through machine-learned inference – from proactive hardware failure predictions, to complex performance modeling through surrogate models, to high dimensional time series prediction for resource forecasting.

This article will take a look at some of the results of this research and its applications for AI-defined, next-generation hardware platforms.

IoT Is Demanding AI at the Edge

There has been an explosive growth of Internet of Things (IoT) devices in recent years. Analysts at Gartner predict the IoT will produce about $2 trillion US in economic benefit in the next five to 10 years. Managing so many devices becomes a major challenge. And to that effect, the International Electrotechnical Commission (IEC) has come up with a three-tier framework to manage IoT devices: enterprise, platform, and edge.

Of the three tiers, managing IoT at the edge tier has become one of the hottest topics in recent years. As seen in Figure 1, the initial generation of IoT management was cloud-centric, where sensor data were collected from the field, then processed and analyzed at the enterprise or platform tier. However, a tremendous amount of data needs to flow back to the cloud and a huge amount of data processing power is needed to structure and analyze it.

|

Figure 1: The three-tier IoT management framework. |

Advances in artificial intelligence (AI), combined with availability of various field sensor data, allows for intelligent IoT management using AI at the edge. When inference happens at the edge, the net traffic flowing back to the cloud is greatly reduced, while the response time for IoT devices will be cut to a minimum since management decisions will be available on premise (or on-prem), close to the devices.

|

Figure 2: Cloud-based IoT management. |

A good amount of research has been done in the area of AI inference at the edge to support IoT devices. The bulk big data collection of sensor data and training of AI engines can be done at the enterprise or platform tier and the resulting AI models can be downloaded into the edge tier and intelligent management through inference of sensor data.

A variety of scaled-down AI hardware, such as GPUs, vector processors, and FPGAs, has been proposed to provide the efficient and cost-effective inference at the edge. This is possible because typical IoT functions are relatively simple and repetitive. A well-trained model can be easily fitted into a simple inference engine and perform the necessary tasks.

|

Figure 3: IoT management using edge inference. |

Small, Medium, and Deep Learning Models for Edge-based AI

It is easy to assume the edge tier approach with simple GPU inference hardware for IoT can be scaled up for more complex hardware system such as compute server, storage, or network system management. However, upon close examination of the applications for complex hardware system management, the answer is not that simple. We can roughly divide the challenges for complex hardware system management at the edge into three groups: small learning; medium learning; and deep learning models.

|

Figure 4: Three classes of models for edge inference. |

The small learning models represent machine learning inference models that have a feature size that is less than 100. They are typically reliability-related applications such as hardware/software failure predictions and security applications such as ransomware detection. While the models can be small due to the small feature sizes, their throughput may be high (up to millions of inference/second) and the latency expectation can be very low (less than millisecond response time for security/detection applications).

The medium learning models are machine learning inference models that have a feature size above 100 and can go up to hundreds. They represent complex system performance models that can be used to perform dynamic system optimizations such as application latency, file system throughput, or high speed channel optimization. Applications’ tuning and channel optimization are typically more tolerant of response time (in seconds or tens of seconds) and their throughput will be less due to finite channels and applications running at a time in a system.

The deep learning models are high dimensional models that have a feature size exceeding 1000. They represent models that require the highest compute power to train and perform inference. They represent customer demand resources such as network or data forecasting in a cloud or enterprise environment. Fortunately, these kinds of resources forecast are limited to minutes to tens-of-minute time intervals. However, since most of the clouds or enterprise environments are different and will be constantly changing, online training is a must for these deep learning models.

Researching Small and Medium Models for Inference at the Edge

CAEML has been conducting a lot of research around the above three models.

The small learning models are mostly ensemble predictive classifiers (for failure prediction or application detection) or abnormal detection classifiers (for virus or ransomware detection). It has been shown that causal inference is a very useful tool for feature selections for these types of classifiers.

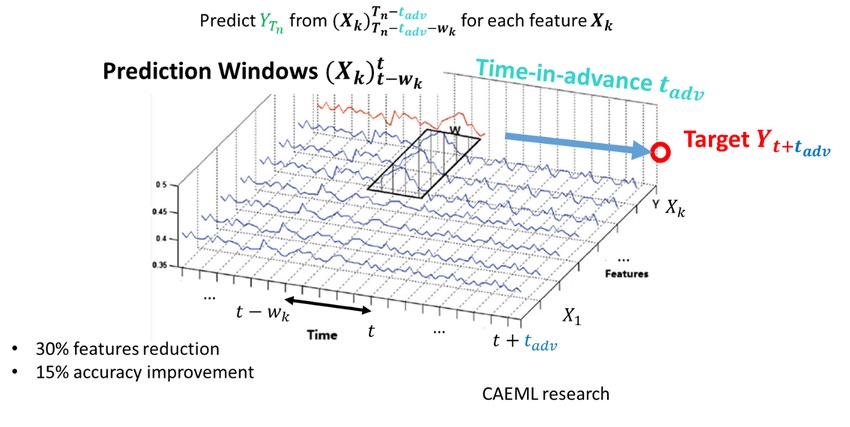

As shown in Figure 5, as sensor signals or system status are being collected, causal inference can be used to detect which of the signals are useful for the prediction classifier and the optimal sampling window to feed into the classifier.

|

Figure 5: Causal inference for feature selection and sample window setting. |

In CAEML’s research, it has been shown that causal inference can result in a reduction in needed sensor/system signals by removing unnecessary or dependent signals. The result is a net decrease in network traffic and storage needed for sensor signals.

Also, because the time interval for each signal can be optimally set, it enhances the accuracy of the prediction results. Better accuracy with less traffic was one of the key factors that Hewlett Packard to deploy a proactive hardware failure prediction service in its Enterprise Infosight solution, for example.

Going forward, it has been observed that ensemble classifiers are not beneficial for normal AI hardware such as GPUs with vector processing.

|

Table 1: Relative execution time for ensemble classifier multi-core processor (Sk-learn) vs. GPU (CUDA-Tree). Larger is longer execution. |

As shown in Table 1, execution time for 10-50K ensemble classification took longer using a GPU than a multiprocessor CPU. This is because most ensemble classifiers induce a lot of branch divergence in the GPU that results in significant degradation in performance. New proposals are being made at CAEML to investigate special hardware that can accelerate these kinds of classifiers for security applications at the edge.

CAEML has also investigated using generative surrogate models for system performance prediction and optimization. Generative models are machine learning models that solve for joint distribution of the inputs and outputs together – i.e. given an output from a system, what is the likely distribution of the inputs that will result in such output?

|

Figure 6: A generative system model. |

This is different from discriminative models, which are the conditional probability of an output given a set of inputs – i.e. what will be the output given a set of inputs?

|

Figure 7: A discriminative system model |

CAEML researchers from Georgia Tech published an excellent paper on using a generative polynomial chaotic expansion surrogate model for performance prediction at DesignCon 2019. Normally these generative models are very complex and can be limited by the curse of dimensionality in practice. However, a previous DesignCon paper by Hewlett Packard Enterprise has shown a high dimensional system performance model can be mapped to a lower dimensional vector principal component analysis (PCA) space. A generative surrogate model in PCA space will be an ideal method to handle the medium learning performance tuning and predictions.

|

Figure 8: Speed up of optimization steps by optimizing in PCA space instead of original feature space. |

Deep Learning for Inference at the Edge

For high dimensional deep learning models, CAEML researchers have looked into using deep Markovian models (DMM) or long short-term models (LSTM) for high dimensional time series predictions. This model is very similar to smart grid power demand forecast that involves thousands of households or hundreds of cities in a state.

Typically, an observation window is set up and demands are sampled at a regular interval to give demand forecast in the next few hours to 24 hours ahead. Because the resource demand of a complex cloud or enterprise system is very different between customers and changes over time, it is necessary to have online training in addition to inference at the edge. This is the highest compute resource needed for edge inference/training.

Figure 9 illustrates the complexity and difficulty of inference at the edge for complex compute, network, and storage systems. IoT devices can utilize a pre-trained model and set up the edge inference engine pipeline to handle the simple inference with tremendous throughput with their GPU vector processors with multi-threading.

|

Figure 9: Smart grid power consumption prediction showing dependency on time of day and day of week. |

In compute, storage, and network systems, multiple types of inference (small, medium, and deep learning models) that require different time intervals (from milliseconds, to seconds, to tens of minutes) are not ideal for multi-threading vector processors as the inference tasks are constantly switching between various models.

As illustrated in the Table 2, below, as the number of samples increases, the GPU performance degrades faster than a multicore CPU. Therefore, it is important to consider the complexity of the inference at the edge before committing the necessary hardware to support the inference task.

Number of Samples (Million) | Sk-learn on multi-core CPU (Seconds) | CUDA-Forest on Nvidia GPU (Seconds) | Improvement (X) |

1 | 4.4 | 13.1 | 2.9 |

2 | 8.1 | 31.1 | 3.8 |

4 | 15.2 | 53.0 | 3.5 |

CAEML researchers have provided the necessary algorithms and methodologies for developing edge inference for all three classes of models. However, it is up to individual member companies to apply these technologies and tailor them for their own deployment.

Chris Cheng is a distinguished technologist on the hardware machine learning team at Hewlett Packard's enterprise storage division. He is also chairman of CAEML’s industrial advisory board and co-chair of the machine learning track at DesignCon.

About the Author(s)

You May Also Like