3D Scanners Capture Clear Images in Darkness

January 2, 2014

Taking 3D scans in the dark or low-light conditions was once nearly impossible. Take Microsoft's first Kinect, for example -- it works best in dim conditions with even lighting throughout the room in which it is used. Forget about using it in dark rooms because the sensor is incapable of finding the subject, unless, of course, the Kinect was modified with a night-vision lens. MIT researchers have recently solved that problem with the development of two new 3D scanners capable of capturing images in total darkness.

The first scanner, dubbed the First-Photon Imaging system, works in a way similar to a lidar scanner (found in autonomous vehicles and some land surveying equipment). The lidar scanner gauges depth by bouncing laser light at several discreet targets to form a grid-like pattern and measures the time it takes for the photons to come back to produce an image. In this case, MIT's system uses a single laser (instead of lidar's multiple photon emissions) that is bounced off a single position in a grid until the photon is detected and then moves on to another point on the grid.

The more reflective the target, the fewer photon bursts are needed, which makes the system highly energy efficient. While that's certainly impressive, it's the researcher's algorithm that produces the magic. Photons can sometimes stray when bounced off a target, which results in what's known as background noise. The algorithm filters out that background noise and stitches each reflected photon pixel together, resulting in a high-resolution 3D image.

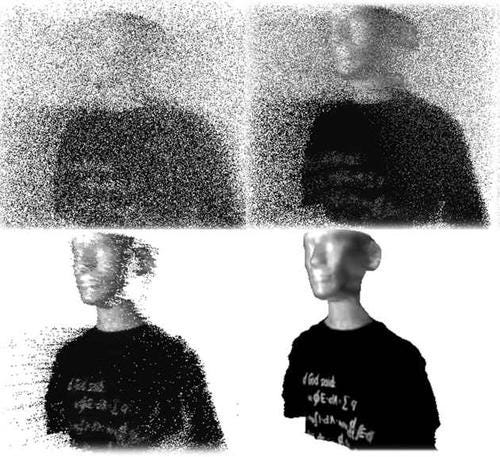

The second 3D scanner to come out from MIT is known simply as the nano-camera and can be used for a host of applications, including collision avoidance detection, medical imaging, and even gesture-based interactive gaming.

The camera uses the same Time of Flight technology in Microsoft's newest Kinect sensor, in which the position of an object is calculated by the time it takes for light to bounce back from a target to the camera. Unlike other devices that use the ToF technology, MIT's nano-camera imaging system isn't affected by adverse conditions such as rain, fog, darkness, or even the translucence of an object. To accomplish this feat, the researchers used a real-time encoding application found in the telecommunications industry, where a single bounced signal is calculated to find the distance and depth of a target even while moving.

Back in 2011, the same group of researchers developed a trillion-frame-per-second femto-camera that was able to capture a single pulse of light traveling through one single scene. It did that by surveying the scene with a femtosecond pulse of light and then used lab-grade optical equipment to snap an image at each pulse.

Related posts:

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)