Company also announces first Omniverse SaaS cloud service, AI enhancements.

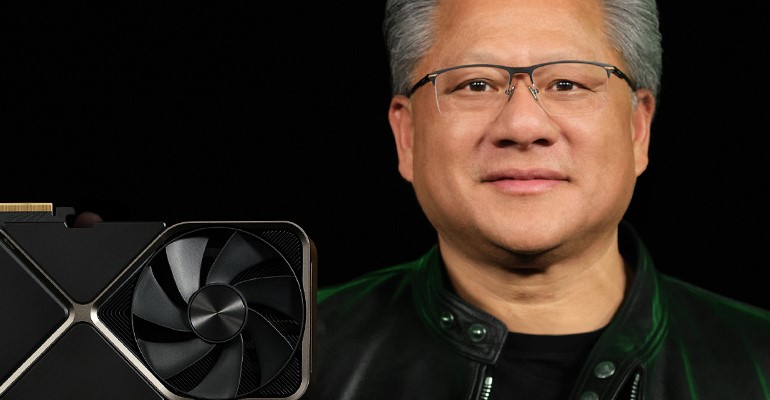

Nvidia made several announcements at its GTC event this week, highlighted by its GeForce RTX 40 Series GPUs powered by Ada. Nvidia CEO Jensen Huang, in a keynote speech, said the GPUs would provide a substantial performance boost that would benefit developers of games and other simulated environments.

During the presentation, Huang put the new GPU through its paces in a fully interactive simulation of Racer RTX, a simulation that is entirely ray traced, with all the action physically modeled. Ada’s advancements include a new streaming multiprocessor, an RT Core with twice the ray-triangle intersection throughput, and a new Tensor Core with the Hopper FP8 Transformer Engine and 1.4 petaflops of Tensor processor power.

Ada also introduces the latest version of NVIDIA DLSS technology, DLSS 3, which uses AI to generate new frames by comparing new frames with prior frames to understand scene changes. This capability improves game performance by up to 4x over brute force rendering.

The GeForce RTX will come in several configurations. The top-end 4090, for high-performance gaming applications, will sell for $1,599 starting in mid-October. The GeForce RTX 4080 will in November with two configurations. The GeForce RTX 4080 16GB, priced at $1,199, has 9,728 CUDA cores and 16GB of high-speed Micron GDDR6X memory.

Nvidia will also offer the GeForce RTX 4080 in a12GB configuration with 7,680 CUDA cores, for $899.

Omniverse Cloud SaaS

The company also announced new cloud services to support AI workflows. NVIDIA announced its first software- and infrastructure-as-a-service offering— called NVIDIA Omniverse Cloud, which enables artists, developers and enterprise teams to design, publish, operate and experience metaverse applications anywhere. Using Omniverse Cloud, individuals and teams can design and collaborate on 3D workflows without the need for any local compute power.

Omniverse Cloud services run on the Omniverse Cloud Computer, a computing system comprised of NVIDIA OVX for graphics and physics simulation, NVIDIA HGX for advanced AI workloads and the NVIDIA Graphics Delivery Network (GDN), a global-scale distributed data center network to deliver high-performance, low-latency metaverse graphics at the edge.

Rise of LLMs

Huang also noted during his keynote speech the growing role of large language models, or LLMs, in AI applications, powering processing engines used in social media, digital advertising, e-commerce and search. He added that large language models based on the Transformer deep learning model first introduced in 2017 now drive leading AI research as they are able to learn to understand human language without supervision or labeled datasets.

To make it easier for researchers to apply this “incredible” technology to their work, Huang announced the Nemo LLM Service, an NVIDIA-managed cloud service to adapt pretrained LLMs to perform specific tasks. For drug and bioscience researchers, Huang also announced BioNeMo LLM, a service to create LLMs that understand chemicals, proteins, DNA and RNA sequences.

Huang announced that NVIDIA is working with The Broad Institute, the world’s largest producer of human genomic information, to make NVIDIA Clara libraries, such as NVIDIA Parabricks, the Genome Analysis Toolkit, and BioNeMo, available on Broad’s Terra Cloud Platform.

To power these AI applications, Nvidia will start shipping its NVIDIA H100 Tensor Core GPU, with Hopper’s next-generation Transformer Engine, in the coming weeks. According to the company, partners building systems include Atos, Cisco, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Lenovo and Supermicro. In addition, Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will start deploying H100-based instances in the cloud starting next year.

Powering AV systems

For autonomous vehicles, Huang introduced DRIVE Thor, which combines the transformer engine of Hopper, the GPU of Ada, and the amazing CPU of Grace.

The Thor superchip delivers 2,000 teraflops of performance, replacing Atlan on the DRIVE roadmap, and providing a seamless transition from DRIVE Orin, which has 254 TOPS of performance and is currently in production vehicles. The Thor processor will power robotics, medical instruments, industrial automation and edge AI systems, according to Huang.

Spencer Chin is a Senior Editor for Design News covering the electronics beat. He has many years of experience covering developments in components, semiconductors, subsystems, power, and other facets of electronics from both a business/supply-chain and technology perspective. He can be reached at [email protected].

About the Author(s)

You May Also Like