The third installment of this Matlab video series examines fusing sensor inputs from both a GPS and an IMU.

The importance of sensor fusion is growing as more applications require the combination of data from different sensor inputs. Self-driving cars, radar tracking systems, and the IoT are some key applications.

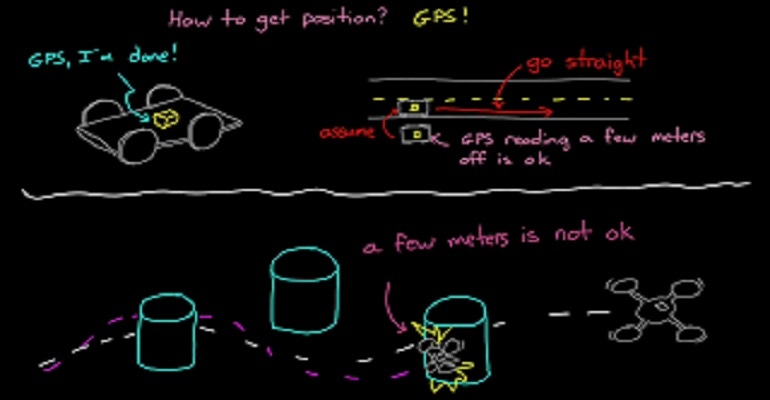

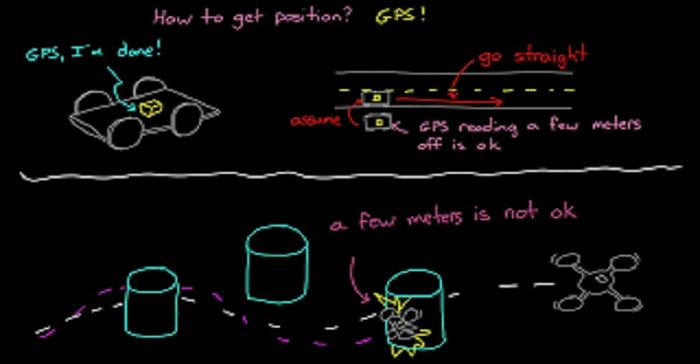

In the third installment of this video series, Matlab examines how input from both a GPS and an IMU can be fused together to provide more accurate position location than a GPS alone. The video discusses the pose algorithm, which accounts for both orientation and position. The video shows, step-by-step, how pose algorithmic data not only more accurately calculates orientation and position data, it can show the information for using different sensors to aid sensor selection. The data obtained through the pose algorithm is particularly useful for applications where extremely precise location information is needed; for instance as with drones.

You can view the video here.

Spencer Chin is a Senior Editor for Design News covering the electronics beat. He has many years of experience covering developments in components, semiconductors, subsystems, power, and other facets of electronics from both a business/supply-chain and technology perspective. He can be reached at [email protected].

About the Author(s)

You May Also Like