July 14, 2015

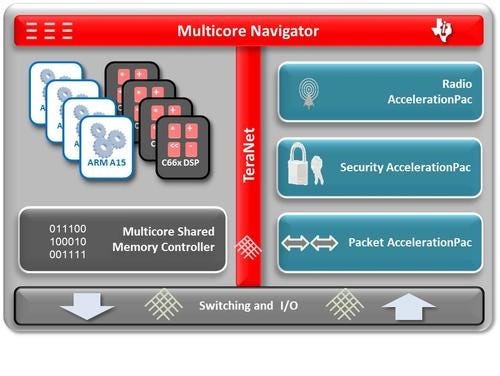

Thirty years ago, the digital signal processor (DSP) began as a standalone device for processing audio signals. The electronics industry and technology have changed tremendously, and DSPs have too, morphing into small-scale and massively parallel multicore DSPs and combining with general-purpose processors (GPP) and other types of processing cores in heterogeneous system-on-a-chip (SoC) devices.

Along the way, an extensive ecosystem of software support tools and programming aids has emerged and simplified the task of integrating DSPs into new systems and developing new application programs. In fact, programmers who have developed GPP-based systems have discovered a host of DSP tools that are quite similar to the GPP tools with which they are familiar. As a result, DSPs have become an essential cog in many of the most exciting new applications emerging today, such as machine vision, automotive and infotainment systems, home and industrial automation, video encoding/decoding, biometrics, high-performance computing, and many advanced avionics and defense systems.

A Math Whiz

The fact of the matter is that DSPs are very good at performing mathematically intense processes. A DSP's bread-and-butter operations are add, subtract, multiply, and accumulate -- the DSP is able to process these functions quickly and with very little power. As a result, DSPs are deployed in a wide range of application programs that contain a great deal of computational processing loops, such as analytics, Fast Fourier Transforms (FTT), matrix math, and others. This kind of processing comes in handy when real-world signals such as audio, vision, and video are being processed in real-time systems with little or no latency.

MORE FROM DESIGN NEWS: Chip Makers Target Internet of Things Complexity

In addition to their low-power consumption, the small footprint of DSPs is also very valuable to developers of portable and handheld systems, which typically operate off a battery. Low power and small footprint go hand-in-hand in these portable systems.

Although they perform a specialized type of processing, DSPs are also very versatile in how they function in applications. A DSP or an array of DSPs often operate relatively independently of many systems, but in many others one or more DSP cores will function in a complementary role to a GPP. For example, DSPs might function as accelerators or co-processors, offloading mathematically intense, repetitive processing from the system's GPP so its processing capacity is reserved for overall operations and housekeeping tasks.

Easy to C

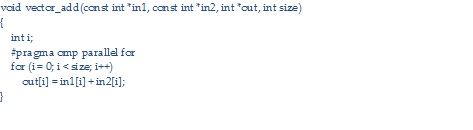

The days of programmers laboriously toiling over DSP assembly code are long gone. Today, many DSPs are supported by the most prevalent programming languages, including C and C++. These high-level languages, which many GPP application engineers are quite familiar with, abstract the low-level computational processes that underlie DSP processing, so programmers can adopt a top-down vision of the system's operation. In addition, some C/C++ compilers have been developed in tandem with specific DSP chips so that the two -- the compiler and the hardware architecture -- complement each other. Compiled code can be more readily optimized to the DSP platform.

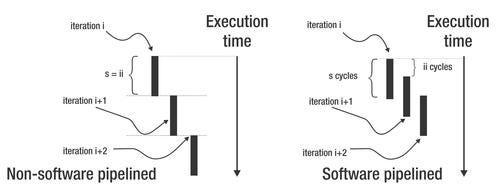

In addition to the optimization made possible by this hand-in-glove relationship between compiler and silicon, some C/C++ compilers for DSPs include a number of automatic optimization capabilities that other compilers may not. For example, one such compiler optimization feature might be the automatic generation of software pipelined loops. Since DSP software usually includes a great deal of loop processing, overlapping the execution of multiple loop iterations can have a huge impact on program performance.

Without software pipelining, each iteration of the loop is processed to completion before the next iteration begins executing. When the compiler utilizes software pipelining, multiple iterations of a loop run at the same time, exploiting many, if not all, of the functional units on the DSP during every cycle of the loop and resulting in significantly accelerated program performance.

Another technique of advanced compilers is known as loop unrolling, which, among other things, enables them to take advantage of the single instruction/multiple data (SIMD) functionality in some DSPs, greatly increasing performance. Loop unrolling duplicates the instructions in a loop and enables the compiler to use the SIMD instructions on the DSP, where one SIMD instruction is executing one part of the loop across multiple iterations of the loop. In this way the SIMD capabilities of the DSP can be taken advantage of without developers manually coding it into a program.

About the Author(s)

You May Also Like