Fog can be a huge deal for autonomous vehicle sensors like radar, lidar, and visual cameras. Testing and especially simulation can help.

May 11, 2021

Fog is a major challenge for autonomous vehicles (AV). The usual suspects when it comes to AV sensors – radar, lidar, visual cameras – can get confused. Plus, it’s hard to actually test fog because it’s not often foggy in most parts of the world.

That’s why the capability to verify perception systems virtually with accurate fog models is so important. But this capability requires a combination of simulation and testing of hardware and software. The recent collaboration between Ansys and FLIR Systems is one example of successful system integration. Ansys provides simulation tools while FLIR has technologies such as thermal camera sensors that enhance perception and awareness.

To better understand the test and verification challenges in fog perception systems, Design News reached out to Sandra Gely, application engineering manager at Ansys, and Kelsey Judd, technical project manager at FLIR Systems. What follows is an edited version of that discussion.

Design News: How does perception and awareness simulation technology differ from more ordinary simulations of AV sensors (e.g., radar, lidar, visual cameras). In general, what has to be added to the models to deal with fog? Is it similar to the 3D shading models used by developers in the gaming community?

Sandra Gely (Ansys): This solution is different from other 3D shading models used in the gaming community in that extensive physics-based simulation is required. In other words, the propagation of light in the scene is based on a physics formula. Optical properties are defined for all the elements in the scene in order to simulate the accurate behavior of the light when it reflects off different objects. The simulation uses a dedicated and randomized algorithm to propagate rays forward from source to sensor or from sensor to source. The algorithm is required for light appearance, photometric analysis of intensity distribution, or sensor analysis.

The fog is modeled as a diffuse material in the air. We are defining the optical properties of a particle, as well as the size, distribution, and density for all the particles. When we add this fog model in the scene, the light will propagate in this medium and be scattered due to the interaction with the particles in the medium.

Kelsey Judd (Flir): As Sandra mentions, the primary difference here is that a physics-based model is needed, whereas many other simulation tools are not. To illustrate one benefit of physics-based simulation, imagine a simulated scene of two pedestrians on a foggy road, where one is 50 meters away, and the other is 100 meters away. By simulating the physics, designers can accurately model that the farther pedestrian will be more difficult to detect because the light emitted from that person has to travel through twice the amount of fog as the person that is only 50 meters away. This type of detail is likely to be missed by more artistic-based simulation renderings but is a crucial detail for assessing the performance of different sensor combinations in real-world situations.

DN: Was the Ansys/Flir perception algorithm and test systems verified in actual fog chambers or real-world foggy conditions?

Sandra Gely (Ansys): FLIR has evaluated their system in actual fog chambers and also in real-world foggy conditions. The correlation study to validate the fog model in Ansys software is based on the analysis in the fog chambers in order to have a controlled fog environment that can be identically reproduced in Ansys SPEOS software.

Foggy is a well-known edge case for autonomous vehicles. OEMs know that they need to have efficient AV/ADAS systems to navigate fog, requiring physical tests or virtual tests. Virtual tests are easier to set up and don’t rely on meteorology.

Kelsey Judd (Flir): The particular results of this paper are based on the correlation between the simulation model and a manmade fog chamber. FLIR does also have several diverse datasets used to train and validate system performance, including many instances of real-world fog. It’s also worth noting that system developers who use physics-based simulation have the ability to adjust several fog parameters, enabling Monte Carlo simulations to stress test system performance.

DN: What tools can a designer use to verify fog perception systems? How do these tools fit into the designers’ typical flow of development?

Sandra Gely (Ansys): Engineers can use Ansys SPEOS to generate the image from their camera at different positions in the typical automotive scene, including fog. Then they can run this series of images into their perception algorithm.

Kelsey Judd (Flir): By using physics-based simulation, system designers can create a diverse set of training and test scenarios that can be difficult to capture in the real world, such as running a variety of scenarios in fog at different times of the day or with different oncoming traffic patterns. By allowing developers to create and modify test datasets with a variety of sensors, including FLIR thermal cameras, the SPEOS can help stress-test ADAS and autonomous vehicle system performance and ultimately drive improvements in the safe performance of these systems when used in a holistic design and verification environment.

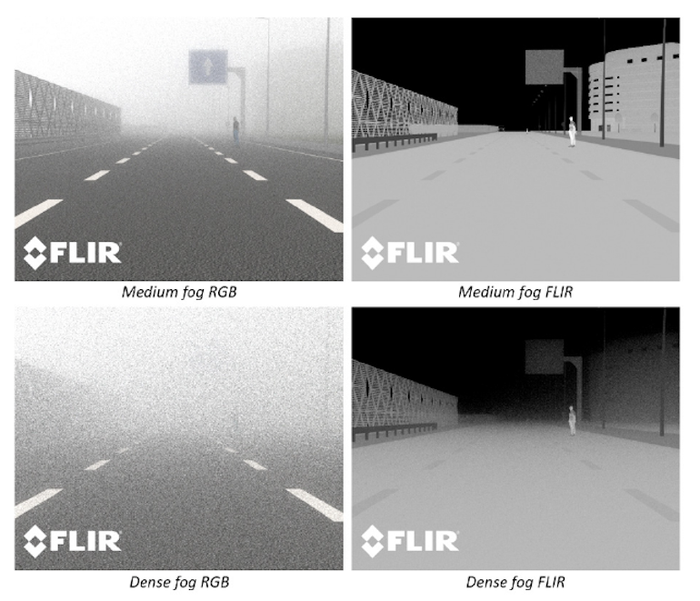

In real-world testing, FLIR found that long-wave infrared imaging is very effective in foggy conditions compared to other sensing modalities such as visible cameras and lidar, and the ability to test that in a simulated environment is critical to accelerating the development of multispectral neural networks required to produce functionally save ADAS and autonomous vehicle systems.

John Blyler is a Design News senior editor, covering the electronics and advanced manufacturing spaces. With a BS in Engineering Physics and an MS in Electrical Engineering, he has years of hardware-software-network systems experience as an editor and engineer within the advanced manufacturing, IoT and semiconductor industries. John has co-authored books related to system engineering and electronics for IEEE, Wiley, and Elsevier.

About the Author(s)

You May Also Like