Research center and industry join forces to defend against ransomware at the intelligent edge with custom hardware and machine learning.

July 7, 2021

The recent ransomware attack on Colonial Pipeline is another painful reminder of how vulnerable we are to such attacks and difficult it is to defend our infrastructures against them. RaaS (Ransomware as a service) is a thriving industry in many dark corners of the world, and protecting against it at the intelligent edge is particularly difficult. Challenges include day zero detection with no previous example or known signature, low latency response time, and high detection throughput rate needed to handle the ever-increasing online transactions at the intelligent edge. Additional challenges included limited compute and power resources and hardware architecture flexible enough to change when threat conditions change.

Center for Advanced Electronics through Machine Learning (CAEML) researchers have been investigating machine learning hardware solutions that can accelerate ransomware detection at the intelligent edge. The center site director and principal investigator Paul Franzon and his graduate student Archit Gajjar at NC State are teaming up with Hewlett Packard Enterprise Primary storage division engineers to investigate the optimal hardware solution to detect ransomware at the edge.

There are references in using machine learning techniques to detect ransomware, with many suggesting using ensemble classifiers as the underlying detection engine. We will be focusing on finding hardware solutions that can accelerate ensemble classifiers.

To evaluate the optimal hardware for ensemble classifiers at the edge, we must consider the following performance benchmarks. First and foremost is the detection accuracy. The XGBoost classifier is very efficient for such detection tasks. It requires fewer trees, and overfitting can easily be controlled by limiting the tree depths. A shallow tree approach also helps hardware acceleration and parallelism.

The classifier must handle a targeted number of transactions per second or input/output operations per second (IOPS). Typical IOPS target varies greatly depending on the edge platform, but 100,000s to 1,000,000 IOPS for high-end edge devices are not uncommon. Some online transaction devices also have service level agreements (SLA) that guarantee a response time in a few ms. This also imposes a latency requirement on the classifier.

Some intelligent devices are expected to perform different inferences for different tasks in a time multiplex manner. This means the data processing batch size may be limited, and execution units will be shared by other inference models. Finally, edge devices may have a limited power supply. A lower power per inference for the hardware is also highly desirable.

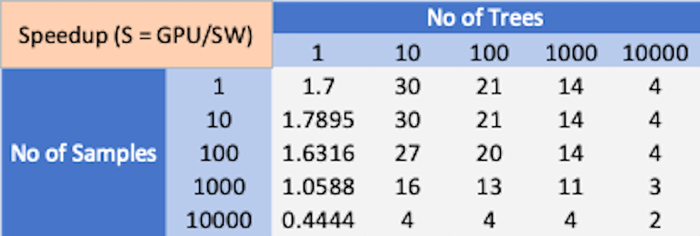

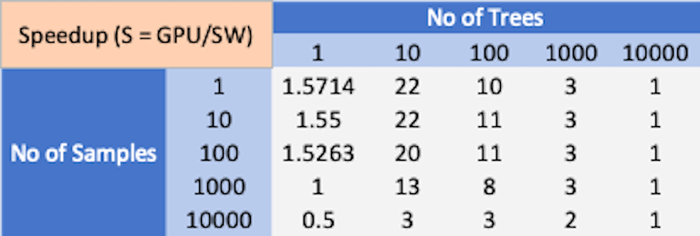

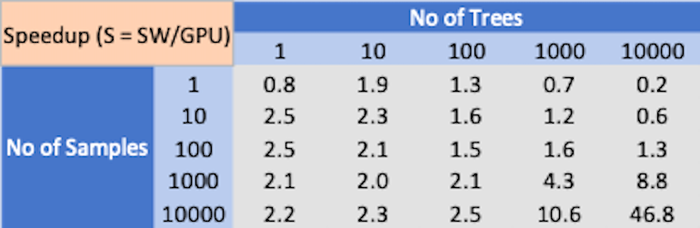

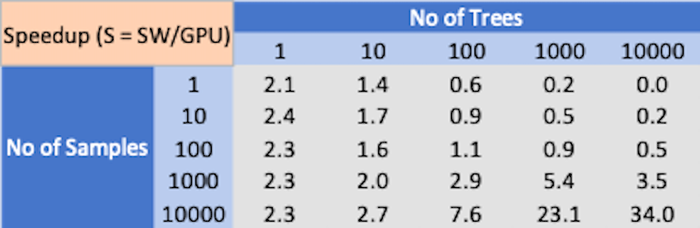

Using 12 cores while benchmarking, we set up the below benchmarks and evaluated the following hardware options: An Intel multicore processor (2.3 GHz (base), 2.7 GHz (turbo) Intel Xeon E5-2686 v4 Processor) and GPU (Nvidia Tesla T4). Some well-known public domain ransomware and security application dataset are used to measure their inference speed with these hardware options. The results are as follows:

As can be seen from the above results, there is no clear winner in XGBoost inference performance between multicore CPU vs. GPU. Depending on the complexity of the problem and the batch size, the CPU or GPU can outperform each other.

It is also well known that CPU typical has a power efficiency advantage over GPU. Some references on random forest inference performance suggest that GPU suffers performance loss due to branch divergence problems in decision trees execution. This leaves the door open for custom design hardware that can be optimized for XGBoost inference. The custom design can take advantage of parallel execution that can outperform multicore CPU while avoiding branch divergence problems like GPU.

CAEML researchers have been looking into such a design, and preliminary results are very encouraging. We would expect a more detailed report toward the end of the year, when the silicon prototype will be ready for testing. The custom design silicon will also be able to multiplex other XGBoost inference tasks for edge inference.

As more and more intelligent IoT devices are deployed in the field for critical infrastructures, the need to secure them becomes paramount. We are glad that CAEML researchers are focusing on designing this new class of hardware to help ensure the edge from ransomware attacks.

This research is partially funded by grants from National Science Foundation (award CNS 16-244770) and CAEML member companies.

Register for DesignCon to learn more.

Chris Cheng is a Distinguished Technologist at the Storage Division of Hewlett-Packard Enterprise. He is responsible for managing all high-speed, analog, and mixed-signal designs within the Storage Division. He also held senior engineering positions in Sun Microsystems and Intel. Cheng is currently the co-chair of DesignCon’s Machine Learning for Microelectronics, Signaling & System Design conference track. To register for DesignCon, August 16-18, 2021, in San Jose, CA, and gain more insight into machine learning and AI for hardware design, visit DesignCon.com.

About the Author(s)

You May Also Like