Determining the right combination of centralized and decentralized information processing is essential to realizing the optimal design and functionality of autonomous vehicles.

December 28, 2018

|

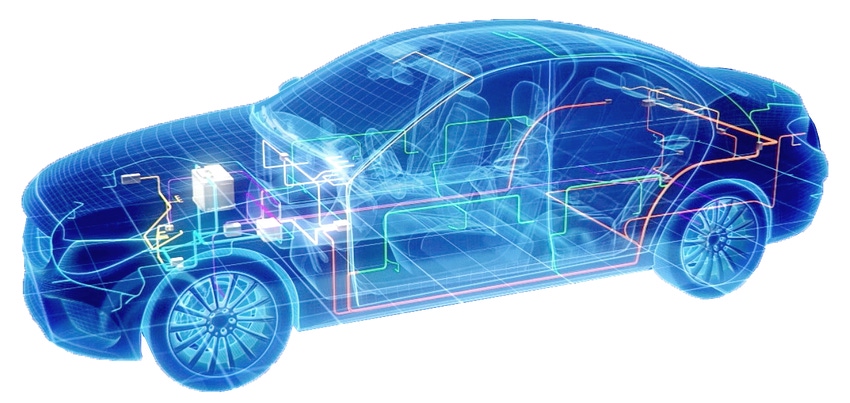

Designing autonomous vehicles includes making key architectural decisions relative to in-vehicle networks. (Image source: Mentor, a Siemens business) |

If you were to ask any scientist or engineer how long until fully autonomous vehicles are commercially realized, the answers would range from a few years through to a few decades.

There are surely a number of reasons for this disparity, but what cannot be underestimated are the technical challenges necessary to realize this milestone. Specifically, one of those challenges will be the sensing and sensor fusion technology necessary to effectively understand the environment in a way that is conducive to the driving scenario. Presently, there isn’t even a consensus on whether driverless cars should be considered automated or autonomous, which poses a stark challenge to the engineers developing sensor integration frameworks.

So how will the sensors be developed to support driverless vehicles, and should they be automated or autonomous? Sensors for highly assisted driving (i.e., ADAS) are used to measure a very specific property such that the assistance system can undertake a very specific action. In automated driving, the sensor systems will be used to support the robotic vehicle to undertake a pre-defined set of maneuvers as precisely as possible. At the extreme end, sensors will be used in autonomous vehicles to provide the system with a sufficiently high-fidelity representation of the environment such that it can plan its own course of action. This, of course, introduces a substantial challenge from the sensing perspective, as no single sensor offers either the fidelity, precision, or spectral capability necessary to perceive the environment to the level necessary to support fully autonomous driving.

In order to achieve such a highly effective level of sensing, there remains a number of open concepts for sensing. One of the most important of those is the role of sensor fusion and, in particular, the question of centralized versus decentralized architectures for fusion. One of the challenges with traditional ECU-based architectures is that their growing number and sophisticated software escalates the vehicle system design complexity at a rate that is not commensurate with the application functionality. While it’s possible to develop a centralized information and communications technology (ICT) architecture for cars, it remains a challenge that has yet to be effectively addressed.

What we do know is that, with the increasing number of sensors in vehicles (as outlined in A Centralized Platform Computer Based Architecture for Automotive Applications by Stefan Sommer at the research institute fortiss GmbH in Munich, Germany), architectural complexity will continue to increase. This raises the question as to whether the architectures for integrating sensors in autonomous vehicles should employ a centralized or decentralized approach, where individual sensors estimate independent object tracks to be shared with a centralized system. Below, we consider some of the key technical challenges from a sensor fusion perspective.

Centralized Fusion Architectures

Within a centralized fusion architecture, all of the remote nodes are directly connected to a central node, which undertakes the main fusion functionality. The client nodes are often disparate in terms of their physical location, spectral operation, and physical characteristics. For example, radar and LiDAR sensors will be placed in different locations, have different capabilities and levels of fidelity, and have different physical operation. These sensors will forward their “raw data,” which is aligned and registered to a common spatial and temporal coordinate framework. Sets of data from the same target are associated, and then the data is integrated together. The system has thus generated an estimate of the target state using multi-sensor data.

From a fusion perspective, a centralized architecture is preferable, as the measurements from different sensors can be considered conditionally independent in that there has been no sharing between nodes prior to the centralized fusion. However, there are two major drawbacks: The communications bandwidth necessary to transport all of the ‘raw data’ to the centralized node expands to the order of Gigabits per second, and the computational expense of associating all of the raw data to possible targets rises significantly. It does, however, have the benefit of providing the most appropriate framework for optimal Bayesian fusion (remembering that the data is conditionally independent). From a systems perspective, a centralized architecture results in reduced system complexity (in terms of the system design) and decreases the latency between the sensing and actuation phases.

|

The right combination of centralized and decentralized information processing is key to the optimal design and functionality of autonomous vehicles. (Image source: Mentor, a Siemens business) |

Decentralized Fusion Architectures

A decentralized fusion system consists of a network of sensing nodes—each with an ability to process its own data to form object tracks, and then communicate these with both adjacent nodes and the centralized node. In this system, locally generated object tracks are communicated toward the central fusion system, which combines all of the local tracks to form a common estimate that is used for global decision making. This is typically the type of fusion system implemented in modern, highly assisted and automated driving vehicles.

In many ways, the decentralized fusion process is more complicated from both an architectural and algorithmic perspective. This is principally due to more efficient use of the individual sensor characteristics and optimization of signal processing in each sensor. From an architectural perspective, this increases latency between sensing and actuation, but greatly reduces the demand on bandwidth and centralized processing. From a fusion perspective, it reduces the complexity of the data association step, but introduces challenges related to the sharing of common information. Specifically, there is a danger of information being double-counted within the system. There is an entire research field that investigates how to undertake this most effectively.

What is important to realize in this context is the importance of sensor modeling. Fusion algorithms, such as the Kalman filter, rely on effective models of sensor noise/uncertainty in order to function effectively. However, sensor modeling is a complex and sophisticated process. Many systems (at least to my experience) rely on guesstimates of the sensor model, as opposed to undertaking high-precision measurements to determine the real sensor models. In these cases, fusion algorithms degenerate into weighted averaging algorithms, which simply combobulate the data. With multiple fusion algorithms running across different nodes in the system, this process can be exacerbated.

RELATED ARTICLES:

So what is the right architecture to use? Well, like all good answers, it depends. Both architectures offer different tradeoffs. Centralized architectures are less complex, have less total computational power, and likely produce the optimal fusion result. However, they are more algorithmically complex and require a team to have excellent knowledge of the sensor systems in order to effectively model them. Decentralized architectures, on the other hand, have lower bandwidth requirements, but increase latency, boost system complexity, and make the fusion system more conservative. However, they allow the system developers to focus purely on the system integration and development of object-level fusion algorithms, as opposed to needing deep expertise in raw sensor data processing and complex data association methods.

Success in making the most effective autonomous vehicle will undoubtedly go to the organization that effectively realizes the right combination of centralized and decentralized information processing.

Dr. Daniel Clarke is principal engineer for the Automotive Business Unit at Mentor, a Siemens business.

|

About the Author(s)

You May Also Like