Reliability Physics provides a way for design engineers to predict reliability and improve product performance.

July 25, 2018

This is part two of Craig Hillman’s article on reliability in electronic design. Click here to read his first article, "The End is Near for MIL-HDBK-217 and Other Outdated Handbooks."

Welcome to the 21st Century! Now What?

From smart phones and wearables to self-driving cars and UAVs, electronics are not just proliferating everyday life. They also are increasingly impacting consumer safety. As electronics become smaller, run hotter, and are placed into the "real" world (no more cushy data center or home office!), they also become more susceptible to failure. How do you ensure that the electronic hardware operating in just about everything today operates safely and reliably over the product lifetime? There is truly only one answer: Throw out the outdated handbooks and join the world of Reliability Physics Analysis (RPA).

What is Reliability Physics Analysis (RPA)?

Reliability Physics Analysis is a science-based approach that uses what we know about failure mechanisms to predict reliability and improve product performance. Powerful simulation tools are used at the product design phase to model possible causes of failure like shock, vibration, temperature cycling, wear-out, and corrosion. While this methodology is practically common sense in other industries (Want to drive over a bridge whose reliability is only based on statistics?), Reliability Physics has only recently gained significant traction within the electronics industry.

RPA used to be known as Physics of Failure, or PoF. However, evangelizers of RPA should be aware that PoF has become an outdated terminology that is no longer preferred due to its unintended implications. The rationale behind RPA vs. PoF can be confusing to engineers, but it is quite clear to management. Quite simply, words matter. The introduction of a new process, such as RPA, almost always requires support and acceptance throughout the organization for it to become standardized and widely accepted. RPA implies a dedication to best practices to ensure product performance. Unfortunately, the use of the term "Physics of Failure" can imply that the organization embraces failure, which is hard for even the best executive to overcome.

The History of Reliability Physics

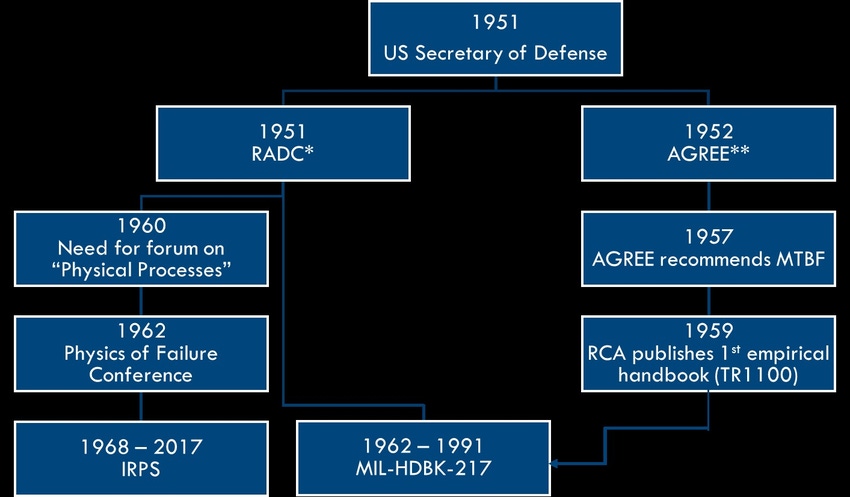

Even though the methodology of Reliability Physics is only just now gaining significant traction within the electronics industry, the concept of Reliability Physics was first sponsored by the U.S. military over 60 years ago. Its goal was to address the poor performance of weapons and other electronics systems during World War II. Unfortunately, the history of that initiative is quite convoluted, as indicated by the timeline below.

|

The concept of Reliability Physics was first sponsored by the US military more than 60 years ago to address the poor performance of weapons and electronics in World War II. (Image source: DfR Solutions) |

The US Secretary of Defense sponsored two complementary (competing?) initiatives in the 1950s. The Rome Air Development Center (RADC) initiated a series of activities and industry forums that eventually resulted in the robust RPA practices performed by the semiconductor industry today. The Ad-Hoc Group on Reliability of Electronics Equipment (AGREE) went down a completely different path, eventually deciding that basing reliability on physics was too complicated. Instead, they steered the military supply chain toward a statistical approach based on an empirical handbook. For some reason, RADC agreed with this premise and the first empirical handbook, MIL-HDBK-217, was born.

But RPA never went away. While RPA clearly became a powerful tool in semiconductor design, innovative engineers at a variety of organizations—including Engelmaier at Bell Labs, Steinberg at Litton Industries, and Gasperi at Rockwell Automation—worked diligently to develop RPA techniques at the board level. They were motivated because RPA is always better than handbooks. The superiority in the RPA methodology can especially be seen through four specific benefits:

Benefit #1: RPA accurately predicts actual field failures over time.

The justification behind empirical handbooks is that they are based on data from the field. (This is not quite true; see previous article.) But they do a horrible job of predicting actual field failures because the information is not based on what might fail. In many industries, RPA would be described as common sense. Find out why something fails and then design the product to avoid those failures. This is especially critical for safety applications, where the type of failure is almost as important as the likelihood of failure. Additional mitigations (fuses, monitoring circuits) can be implemented based on the results of RPA. RPA also does not claim that failure rates are “random” over time, so it can provide a clear understanding of maintenance requirements, actions, and timing.

Benefit #2: RPA can predict performance of technology that you have never used.

The glaring flaw of empirical handbooks is that with new technology, someone has to go first. That is, if reliability can only be predicted based on prior use, what is the reliability of something that has never been used? Unfortunately, since handbooks do not address this fundamental flaw, organizations that utilize handbooks are required to develop arbitrary approaches, such as reliability by similarity (RBS), with little to no justification (also known as “opinioneering”). The value of RPA is its foundation on fundamental mechanisms that are independent of technology. While the rate of damage evolution may change, depending primarily on the specific material, the base behavior rarely does. And the method for deriving the new parameters is executed through a set of well-known characterization and accelerated testing activities.

Examples abound of the consistency of reliability physics for both old and new technologies. For semiconductor devices, dielectric breakdown has been a known failure mechanism since the earliest integrated circuits. The fundamental behavior has been relatively consistent (including the extremely interesting thickness vs. Weibull slope observation). Debates over E, 1/E, and V models are primarily based on the specific mechanism by which breakdown is occurring and the need for more extended testing. For packaging, low-cycle fatigue of SnPb, SAC305, and new reliability solders can all be accurately predicted based on the work performed/strain energy dissipated over each temperature cycle (no need to be afraid of lead-free electronics).

The fundamental advantage of this capability is that it allows reliability decisions to be actively involved in technology roadmaps. High-reliability industries, such as military, aerospace, and industrial, only need to use new technology once it is sufficiently reliable for their application based on robust RPA. So the need to figure out what everyone else is doing effectively evaporates. As a perfect summary, RPA provides a robust basis for estimating reliability, regardless of prior experience.

RELATED ARTICLES:

Benefit #3: RPA allows reliability to be truly a part of the design process.

One of the greatest limitations of the handbook approach is that it is not truly part of the design process. Designs are rarely changed due to failure rates calculated through the handbook. (“Adjustments” in the calculation are far more likely.) In fact, this activity is often performed after the design has been finalized (or close to it). As a result, handbook calculations become a check-the-box exercise rather than a value-add activity.

By comparison, RPA is a fundamental part of a robust design for reliability (DfR) process. Interest in DfR is exploding around the world as senior-level managers and executives experience substantial return on investment (ROI) over traditional design-test-fix methods. (If the world never sees another Duane curve again, it will be too late.) Most critically, an organizational focus on design for reliability (DfR) allows the hardware design team the opportunity to transition to a real agile or semi-agile development process. If you don’t need to build a physical prototype every time you make a change, “let’s try it and see” becomes a much more realistic approach. (And it aligns with the agile process that the software team implemented 10 years ago!)

Benefit #4: RPA changes the mindset, allowing for a proactive maintenance strategy.

The biggest benefit of RPA is that it aligns the entire design team (including reliability) and the supply chain with the notion that failure rate, failure site, failure mode, and failure mechanism can be predicted and mitigated. With this knowledge comes the ability to introduce more proactive maintenance strategies. The actual approach varies, depending on the organization and the technology. Some companies use weak links and others monitor environmental conditions, while still others gather system performance data.

Regardless of the approach, none of the three approaches are effective if blind statistical analysis is performed. RPA is critical for identifying the following: the best methodology, the parametric data that should be monitored, how the data should be analyzed, and the fail points that indicate maintenance should be performed.

The Biggest Challenge: Implementing RPA into the Design Process

If RPA provides such clear benefits, why is it not adopted worldwide? The challenge is multifaceted, but it includes inertia, requirements, supply chain, and tool sets. Inertia is the clear winner in terms of rationale that applies to multiple industries. We have to remember that RPA prevents failures, but it does not directly make the product functionally better, lower cost, or smaller size. (It may do it indirectly, but that is harder to measure.) Better/cheaper/smaller is the mantra of the technology industry, and the focus of every hardware executive is to move faster than their competitors on any or all of those three attributes. So, if RPA is not required, it can take a lot longer and a lot more data to prove ROI.

RPA is also not required (at least, not as much as handbooks). The lack of RPA requirements stifles the use of RPA not only within the organization, but also down the supply chain. As an example, if the FAA does not require or does not accept RPA, then it can be harder for Boeing or Airbus to require RPA from its supply chain. And if the part manufacturers are not providing RPA results or the information needed to perform RPA—primarily because marketing told them it was not necessary—it can stifle the entire process.

Finally, the surprising lack of tool sets has held back the implementation of RPA. Historically, when many companies do perform some variant of RPA, the tools are internal or leverage simple spreadsheets. The use of these internal tools results in inconsistent approaches from company to company. (There’s that supply chain thing again.) It also tends to cause loss of knowledge when the tool’s owner retires or moves on to another company. This is a big undesirable risk for management.

But this paucity of RPA tools is finally changing. There are now RPA tools that leverage the major advances in software and computational power over the past 20 years. Web-based libraries provide access to an extensive range of part and package-level information necessary to perform RPA. The movement to CAD/CAE apps, leveraging the explosion of mobile apps, has given a wider range of engineers access to powerful simulation and modeling capabilities. And Moore’s Law has surely been beneficial. Simulation times have dropped from days to hours to minutes, allowing RPA activities to do the following: evaluate multiple parts, instead of just one; perform several iterations within a reasonable time period; and incorporate all relevant details.

These advanced tools are allowing RPA at the design edge, with electrical and mechanical design teams performing RPA with a consistent approach that encourages sharing of results up and down the supply chain. The result is that design teams are hitting project schedules more consistently and OEMs are starting to accept RPA as a methodology for product qualification. Maybe, just maybe, RPA has finally turned the corner.

Craig Hillman, PhD, is the CEO of DfR Solutions.

|

About the Author(s)

You May Also Like