Open-source software verification of open-source hardware design like RISC-V possesses many new challenges.

March 29, 2021

The allure of open-source hardware is the flexibility for designers to create their own CPU-based platforms. Advocates believe that freely available open-source systems will encourage a new wave of processor innovation and create new market segments. These advocates point to the increasing amount of open-source IP that is available and being implemented in many new chip designs. But what about verification?

While the design community is encouraged, the verification engineers are cautious. In the past, instruction set architectures (ISA) used with intellectual property (IP)-based processors came from a single source, e.g., Arm, Intel, AMD, etc. These ISAs utilized industry verification tools and methodologies.

However, designers using RISC-V-based processors using open-source ISAs will need to ensure the verification flow of their customized processors and system-on-chip (SoC) open-source hardware.

The electronic design automation (EDA) and IP communities worry that they will have to devise customized and untried verification flow for the still relatively new RISC-based open-source hardware.

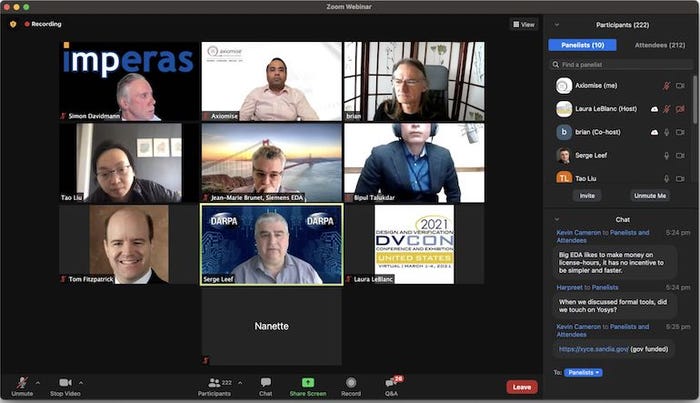

These concerns were discussed during the recent virtual DVCon 2021 panel. Semiconductor Engineering’s Brian Bailey moderated the panel of design verification experts and open-source proponents to address the verification challenges. What follows are the edited positioning statements from each of the panelists, which set the tone of the more detailed debate that can be seen on the DVCon 2021 site.

Jean-Marie Brunet/Siemens EDA: For those of us in the hardware assistance verification domain, the open-source hardware issue is really just business as usual. You need views, RTL that compiles, a test bench that runs, and the like. It’s not too different from what is typically provided by a hardware system verification, simulation, and prototyping provider. But from a high-level standpoint, the open-source systems are not too different.

Ashish Darbari/Axiomise: I want to highlight that open source doesn't mean everything is free. In the context of test and verification, tools that are open source do not automatically translate into high-quality verification since using these tools takes expertise to develop and a lot of human effort, which we cannot overlook. Also, in the context of open source, design for hardware is a lot different than software because the software can be patched up, but hardware can not.

This brings me to the quality side of the verification issue. What is really important in the context of open source and much less so in the context of a proprietary development, is the aspect of visibility, transparency, and reproducibility. So, any tool could be used to reproduce the results, the verification plans, and the strategy. Everything is transparent. I think this takes a lot of effort and it largely gets taken for granted. If we can actually do this level of work, which is transparent and visible and is of high quality, I think a lot would work out quite nicely – in open-source verification.

Simon Davidmann/Imperas: Our focus is really about software and helping people get the software up and running. That’s why we thought RISC-V was just another ISA - we have 13 or 14 that we support. Then, we realized that all the interest around RISC-V wasn't about software but rather it was how the people can design the processors. That was when we switched more into verification around RISC-V.

The issue for me is about quality open-source hardware. The challenge of the cost isn't about individual verification tools but actually getting verification done. I think, in reality, you should buy, borrow, and beg anything you can to get better quality verification. It shouldn’t really matter if that quality comes from the open-source or commercial tools and processes. You should use the best technologies available.

Serge Leef/DARPA: When I was in the commercial EDA space, I was never a particular believer in open source “anything,” including open-source verification. But what I have seen since then is that when people try to accelerate simulation, the general computing performance always outworked the accelerators. With Moore's law reaching the end of the road, we can no longer expect clock rates to keep increasing. This is why more and more people are thinking about using distributed computing to support simulation and verification on the cloud. But when you go to the cloud, you often need a lot of licenses which soon becomes prohibitively expensive.

That is why the community that I serve in the defense ecosystem is looking closely at open-source verification because of the affordable cost. The defense ecosystem is a sort of an underprivileged community because they don't have any coordinated purchasing capabilities and they do have very complicated contracting mandates.

One of the programs I inherited is an analog simulator called Zeiss, which is specifically targeted for many instances, a high degree of parallelism development. I have a growing community that is using it very successfully and the cost of individual licenses is zero. So the users are able to scale way up. One of the areas that we are investigating is should we do something similar for digital assimilation, specifically event-driven simulation.

The hope is that the simulation kernel can be better mapped onto the cloud so that it can scale linearly with the addition of instances. This would allow the use of high-performance computing (HPC) strategies and maybe incorporate F1 instances and emulators. That is why, in my community, the open-source verification issue is driven by cost.

Tao Liu/Google: This journey for us started two or three years ago especially with the coming of the RISC-V world. What we noticed was that there was no open-source verification solution at that time. A lot of people had put the effort into the design of the open-source hardware but very little into the verification. So, we started to develop our own verification for the RISC-V platform. We were faced with many challenges, such as how could we generalize the verification platform to adapt to different requirements. The big question was how we could build something that was easily customized and integrated into our design environment.

Another issue that came up was the challenge between open-source and free tools. In general, open-source doesn’t have to be free. Open source is something that is made available and from which someone can build on top of it, but a free tool is another dimension of the issue. (Image Source: DVCon 2021, Twitter)

John Blyler is a Design News senior editor, covering the electronics and advanced manufacturing spaces. With a BS in Engineering Physics and an MS in Electrical Engineering, he has years of hardware-software-network systems experience as an editor and engineer within the advanced manufacturing, IoT and semiconductor industries. John has co-authored books related to system engineering and electronics for IEEE, Wiley, and Elsevier.

About the Author(s)

You May Also Like