Autonomous Vehicles

Mercedes-Benz Drive Pilot is the first SAE Level 3 driver assistance system that is approved for use in the U.S.

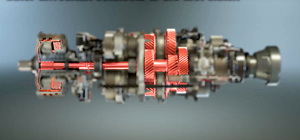

Automotive Engineering

Autonomous Vehicles Drive New Business Models for Technology AdoptionAutonomous Vehicles Drive New Business Models for Technology Adoption

Why automotive OEMs need to see HPC hardware as an investment to enable application software development.

Sign up for the Design News Daily newsletter.

.gif?width=700&auto=webp&quality=80&disable=upscale)