This combination of factors, including better depth sensors, will help create a new family of depth application.

September 8, 2021

Edmund Neo

The availability of high-resolution, long-distance, low-power, 3D sensors promises to enable a new wave of applications that use depth sensing to understand and interact with the real world more intimately. Augmented reality (AR) has become the poster child for applications that understand depth to blend the real and virtual world. Still, high-quality 3D sensing will also benefit sectors such as domestic robotics, architecture and interior design, smart retail, leisure and gaming, object scanning, and even warehousing and logistics. As much as no one knew that adding cameras to phones would enable citizen journalism, Instagram, and TikTok, it seems likely that the wide availability of 3D sensors will lead to a host of as yet unimagined capabilities and services.

Depth Sensing

We are already seeing depth sensing being used to bring the real and virtual worlds more closely together. For example, mobile phone depth-sensing applications enable users to scan real-world objects at high resolution to be manipulated in imaging and 3D design programs. Architects and interior designers are using 3D measurement apps to quickly scan whole rooms, furniture, and fittings so that they can plan modifications to these environments, using the real 3D data in their familiar design tools. Estate agents [Realtors] are using similar scanning techniques to create 3D walkthroughs of homes that they are marketing online.

Retailers are investigating using depth measurement to make their store concepts smarter by installing 3D body scanners so that shoppers can check whether clothes will fit and how they will look without having to try them on. Warehouse and logistics operations use depth scanning to drive apps that help operators plan how best to put an arbitrary combination of boxes of different sizes on a pallet for the greatest packing efficiency.

Domestic robot companies use depth sensing to help autonomous floor sweepers and floor washers map and traverse their environments most effectively. This is such a large market for depth sensing that OEMs have stepped in to create standard depth-sensing modules that robot makers can buy as a subsystem for use in their designs.

Artificial Reality

The poster child for depth-sensing technology is AR, usually implemented on mobile. There have been some successful implementations of AR in games such as Pokémon GO, retail contexts such as the Ikea ‘see how it looks in your home app, and some tourism and mapping applications that overlay useful information or directions on a live view of the user’s surroundings. However, these applications could not usually realistically interact with the world because they lack a true understanding of its third dimension. Instead, the augmented views of reality they create look more like someone has placed a placard in the scene, whose size and position you can then manipulate. The apps have little sense of how real objects are related to each other in space, how their paths will interact as they move, and no way, for example, to put a 3D model of a chair behind a desk in a room and have its image properly occluded by the real object.

What’s needed to turn the promise of AR apps into more believable mixed-reality (MR) offerings is high-quality yet low-power depth sensors that offer long-range, good resolution, and fast framerates. These characteristics, coupled with sophisticated algorithms and fast processors, will enable a more realistic blending of real scenes with augmented overlays. The depth information these sensors deliver will also allow better photography by enhancing a camera’s ability to focus properly in almost any lighting condition and simplify the work of some machine-learning algorithms by making it easier for them to separate objects that overlap in a scene from each other.

Deriving Depth

There are several approaches to deriving depth information from a scene, particularly if the sensor is being mounted in a handheld device. For example, Apple is using direct time-of-flight (ToF) techniques to create depth maps in its top-end phones. Other phone developers have tried to combine the output of a standard RGB camera chip with movement data from an inertial-measurement unit to derive depth maps by correlating multiple views of a static scene, but this approach has its limitations.

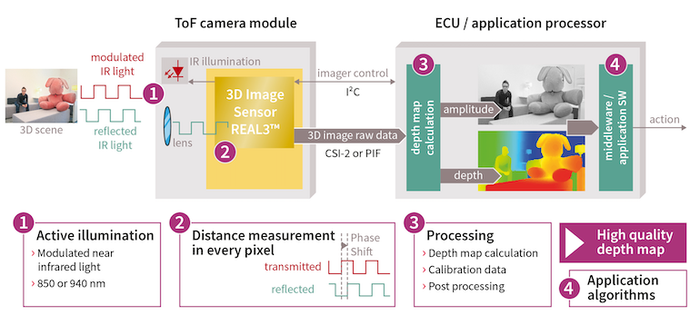

Infineon and pmd have developed a better and more robust approach to depth sensing, a sixth-generation camera that uses indirect ToF principles. This system uses a monolithic vertical cavity surface-emitting laser (VCSEL) chip to bathe a scene in modulated infrared light and a CMOS imager to capture the reflection from the scene. Changes in the phase of the returned infrared light are used to calculate depth information.

One challenge of this approach is that the phase difference is periodic after 360 degrees. One phase difference can represent several different distances separated by a flight time representing a phase shift of one or multiples of 360 degrees. The consequence is that distance cannot be determined unambiguously. The range over which depth measurements are unambiguous can be increased by decreasing the modulation frequency of the infrared light, but doing so reduces the depth resolution of the signal. ToF sensors can square this circle by modulating the infrared light with two different frequencies, extending the range over which it can measure depth unambiguously while retaining depth resolution. The latest VCSEL drivers enable modulation frequencies of up to 200MHz, sustaining depth resolution while ensuring unambiguous position sensing out to distances of 10m. Such sensors can also be configured to run at up to 100 frame/s to enable real-time applications.

This combination of hardware and supporting software has enabled the development of ToF depth sensors that are effective over distances of up to 10m and can derive up to 40,000 depth points from a scene 5m away. Technology advances and more aggressive integration have cut the footprint of some of these sensors by up to 35%, compared with the previous generation, and reduced their power consumption by 40%. Power efficiency is particularly important if a ToF sensor is going to be in use for long periods, for example, during AR games. The use of narrowband infrared also means that the ToF sensor can give accurate and robust depth data in almost all lighting conditions and enable a degree of ‘night vision’ imaging that can help cameras focus properly in very low lighting levels.

The market for depth sensors is growing rapidly as their utility becomes more widely appreciated and their capabilities and form factors improve. Apple’s inclusion of depth-sensing facilities in its high-end mobile phones is helping to solve the ‘chicken and egg’ problem of market development by providing companies such as Instagram and TikTok with a way to use depth data to make their apps more engaging. Meanwhile, Google is working hard to improve ARCore, the AR application support framework that has been shipped on 850 million Android phones, to make AR objects look more realistic, move more realistically, and interact with reality more believably.

This combination of better depth sensors, greater market development efforts from Apple, Google, and others, and a growing understanding of the power of mixed reality, should enable the development of a new family of applications and services based on understanding the real world in depth.

Edmund Neo, Ph.D., is a Senior Manager of Product Marketing at Infineon.

You May Also Like