Engineers look to the human brain as a model for smarter AI. But how can we model something we don't fully understand? Brain2Bot is finding an answer from the bottom up.

November 9, 2017

The holy grail for AI research has always been creating machines that can think like (or better than) humans. Whether it's making Alex or Google Assistant better at natural language processing or using reinforcement learning to develop AI that can teach itself, the ultimate goal has always been clear – creating smart AI that can rival, or even exceed, the level of complexity exhibited by the human brain.

Sometimes you get great success, other times you get things like robots that seem straight out of a science fiction movie ... but can't handle simple tasks like navigating stairs.

|

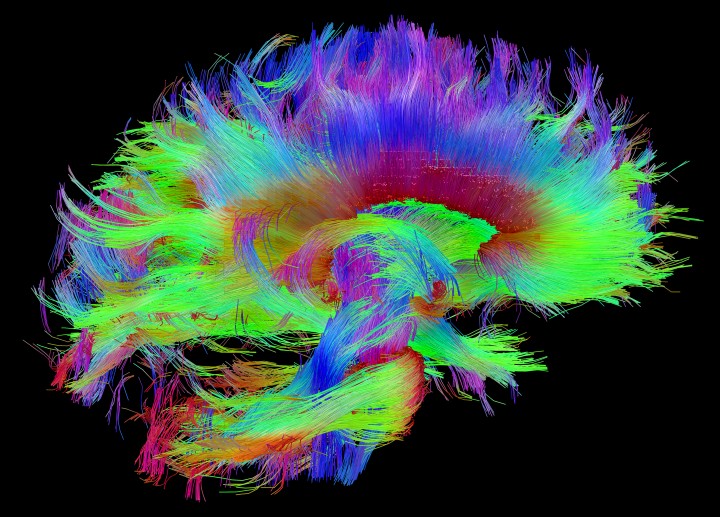

A scan of white matter fiber architecture from the Human Connectome Project. Despite efforts, we still don't have a full understanding of how the brain's connections function. (Image source: The Human Connectome Project) |

We have a good understanding of the brain's anatomy, but know comparatively little about the connections and wiring of the brain that make cognition possible. The Human Connectome Project, a government-funded effort, begun in 2010, to fully map the connections in the brain was supposed to do for the neuroscience what the human genome project did for genetics. But as of October 2017 the project has yet to be completed.

If the human brain is the goal, how are we supposed to design AI based on something we don't even fully understand ourselves? “In my research I've realized there really isn't a good theory about how the brain works. It took me toward seeing what people are trying to understand on a behavioral and molecular level.”Dr. Gunnar Newquist, founder and CEO of Brain2Bot, told Design News, ahead of his AI-focused keynote at the upcoming 2017 Embedded Systems Conference in Silicon Valley. Brain2Bot calls itself a “natural intelligence” company and works to develop autonomous products using the fundamental principles of neuroscience. The company's first products are robots targeted at entertainment and social applications.

Newquist, who holds a PhD in cellular and molecular neuroscience from the University of Nevada, Reno, and has done post-doctorate work in learning and memory at ESPCI Paris, said that in his work he found that the results weren't really matching the theories in terms of how the brain functions. He believes a bottom-up approach, understanding the underlying biology in order to grasp the higher functions of the brain, is the best way to approach AI.

“It really applies in the business end of things because you have all these robots, self-driving cars, and other game characters and all of this technology is pushing toward something that is artificial cognition,” Newquist said. “If we can figure out how the natural systems work you can translate that into something that's really useful for humans. Imagine instead of your Roomba just wandering around ,you can get more of what science fiction is talking about, more of an R2D2 that is your companion.”

Ask the Fruit Flies

Can our understanding of neuroscience also help evolve AI? And if so how do you bridge the gap between neuroscience and computer science and engineering? Is technology currently unable to reach the potential we need it to? Or is our understanding of neuroscience limiting our ability to leverage technology? “It has to go both ways, one side can't just direct the conversation,” he said.

|

Gunnar Newquist will be speaking at ESC Silicon Valley 2017 on how the next revolution in AI will come from an understanding of natural intelligence. (Image source: Gunnar Newquist / Brain2Bot) |

“At first I thought it was a hardware issue because robots and computer chips work differently from a brain. But I think there are ways around that now. I think it's mostly a conceptual problem. And that's a programming strategy issue. I think we can get a lot of what biology has to offer in simulation. Because there is a lot of simulation power in a computer.”

For Newquist, what has been holding AI back is old ideas in psychology, ideas like the Freudian concepts of the id and ego, which are easy to define conceptually but not biologically or physically. “When I hear even high-level computer scientists talk about the brain I hear them trying to inject things about the brain that the brain doesn't actually do. Ideas from Freud pop up even in what people are trying to make software do. But you're never going to get to actual intelligence by trying to mimic something that doesn't actually happen,” Newquist said.

He pointed to Edward Thorndike's Law of Effect as an example. In essence, the Law of Effect is a behavioralist principle that states people are more likely to do things that cause a pleasing after effect in any given situation. If ordering a burger and fries makes you feel better than ordering a salad, then you're more likely to order the burger every time. But for Newquist and other critics, Thorndike's law represents a circular logic that can't be well applied to programming. “People are trying to mimic circular logic in the way that they're building their programming strategy. So it doesn't work because it's not logically sound and there's no way to experiment to see how it really works. So you're stuck in this loop.”

There's no biological definition for consciousness. So rather than seeking one out directly, we have to start with something we can actually define and compare.

For the team at Brain2Bot that begins with fruit flies. “When your'e looking at a fruit fly as opposed to a human it's easier to be objective and bring less bias in because people don't think of consciousness in terms of a fruit fly,” Newquist said. Starting with understanding a simple insect brain, Brain2Bot wants to gradually scale up into a comprehensive grasp of higher functioning creatures, and eventually humans.

But Newquist said the approach isn't to “evolve” an AI up from simple to more complex, after all animals can do some things far better than humans can. He calls the approach more one of comparative anatomy. “Think of a task that different animals can do, but they have different strategies for how to do it. If you look at how all of that works you get to an underlying principle of how a feature happens,” he explained. “I think that's a more powerful approach in understanding the principle of intelligence rather than the mechanism of that specific animal. So you really have to compare a lot of animals in order to get the gist of how it works.”

Who Needs R2D2?

The idea of a robot companion like R2 might sound like an attractive ambition to any engineer that grew up on Star Wars and other science fiction, but does the world really need one? Collaborative robots in the factory are usually designated to single tasks and even when reports find AI is adding jobs to the market it is because of its ability to augment very specific, and even niche, jobs and tasks.

|

A prototype of one of Brain2Bot's early robots that will use natural intelligence-based AI to interact with users. (Image source: Brain2Bot) |

So does the world need a robot with a general purpose intelligence? Newquist believes that the value in understanding consciousness is in creating AI that can adapt very rapidly and function in areas outside of where AI is traditionally deployed. “A lot of what AI is doing now is data analytics; human brains are terrible at taking tons of data and making sense of it,” he said. “I'd say there's specific applications to this, but most of them are real-world applications where either you can't get data or it's so unstructured that traditional AI won't work. If you have something that learns on the fly, adapts on the fly, and doesn't need a lot of a experience, these are the types of adaptions of the software that we're looking into.”

Newquist pointed to those robots of the DARPA Challenge as a prime example, “Things that are so simple to us are what the robots fail at. But they can crunch tons and tons of numbers that we would never ever be able to,” he said.”So we're thinking, what are the things animals do well that computers don't and let's fix those things first.”

There's also the value off having AI that is able to understand and process emotions. “Having [a robot] interact with humans requires personality, and various personalities respond differently. So if you can add that level onto the robot you get this much more charismatic, intuitive interaction between the robot and humans for application's like healthcare and user interface.”

Somewhere in the Rain Forest

Right now Brain2Bot is concentrating its efforts into entertainment applications, creating toys and consumer-facing robots. Newquist said these “entertaining but not 100% useful” robots are great for development. “We can get some things wrong if we start with a toy before we move to something bigger.”

That bigger ambition? A level-5 autonomous car. “The reason is we just find [level 5 autonomy] interesting,” he said. “But to get to that intelligence to drive a car in any condition, in any road, without a map, you need about human level cognition. And there's a lot of things that have to come together for a vehicle to navigate uncharted territory. The need to communicate with passengers for example is one area where companies are lagging way behind.”

The language of biology is already built into how we think and talk about AI. The very concepts of deep learning and neural networks are based on the human brain – seeking to achieve higher levels of AI by mimicking the way our own neurons link and fire together. Where the disconnect comes for Newquist and his team is that the ways we program Ai isn't growing along with our understanding of neurology.

“Having an appreciation of biology for engineers is important because this is really where all the inspiration for this comes from,” he said. “What if the next breakthrough of intelligence is hiding in the Amazon rain forest? There might be some animal out there that might really help us understand how biology works...”

As things currently stand we don't know enough about the human brain to create an AI model with human-level cognition. But Brain2Bot's step-by-step approach, starting with simpler brains, can give us an understanding of the building blocks we need to reach that point in the future. “There isn't anything that humans do that isn't in a simpler form in much simper animals,” Newquist said. “If we take it step by step, even if we don't get to human intelligence we're going to get to some useful features along the way and it will be way better than it is today.”

In a keynote presentation at ESC Silicon Valley , taking place Dec. 5-7, 2017, Gunnar Newquist, Founder & CEO of Brain2Bot Inc. , explores how the next revolution in AI will come from an understanding of natural intelligence. Click here for more information on Gunnar's talk. Click here to register for the event today! |

Chris Wiltz is a Senior Editor at Design News , covering emerging technologies including AI, VR/AR, and robotics.

About the Author(s)

You May Also Like