When You Train Robots With VR, You Only Have to Teach Them Once

What if you only had to show a robot a task once? OpenAI has developed an artificial intelligence system in which a robot can learn from a single, virtual demonstration and repeat that task in the real world in different setups.

July 14, 2017

The best workers are the ones whom you can show a task once, then have them do it perfectly from then on. While collaborative robots like Rethink Robotics' Baxter are able to mimic assembly tasks after a real world walkthrough, teaching robots can be a time-consuming physical task. And even once the robot is taught it won't necessarily be able to adapt to a situation dynamically. Having one misplaced part in a bin, for example, could derail a robot's entire process.

OpenAI, a non-profit AI research company has developed a solution around this – a system that trains robots in virtual reality (VR) environments. When successfully deployed this system allows robots to learn a task after only seeing it once.

|

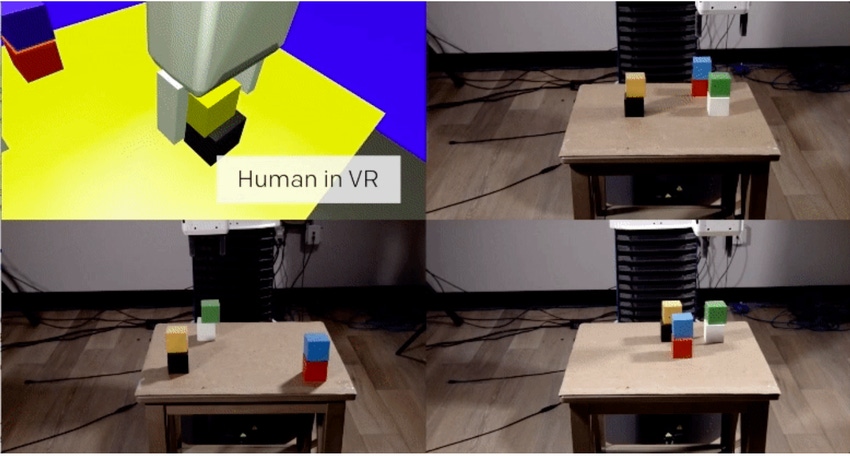

With OpenAI's system, a robot can learn a behavior from a single demonstration via a simulator, then reproduce that behavior in different setups in reality. (Image source: OpenAI) |

OpenAI, which boasts the likes of Tesla CEO Elon Musk, PayPal founder Peter Thiel, and Y Combinator founder Jessica Livingston among its sponsors, has created a working prototype of the system that allows a robot to learn and dynamically perform a block-stacking task. The hope is that this will be a stepping stone toward creating robots and cobots that can learn and adapt to even more complex tasks in the future.

“Initiation allows humans to learn new behaviors rapidly. We'd like our robots to learn this way too,” Josh Tobin member of technical staff OpenAI explained in a video released by OpenAI.

The system works by combining two deep learning neural networks, one for vision and one for imitation. The vision network processes what the robot's camera is seeing and the imitation network then figures out what actions the robot needs to take to perform its assigned task based on what it's seeing.

The vision portion of the system was trained using a method called domain randomization, which allows for simulated images to be associated with real images. “We generate thousands of object locations, light settings, and surface textures and showed them to the neural network,” Tobin said. “After training, the network can find blocks in the physical world, even though it has never seen real images from a camera before.”

The imitation neural network was trained using one-shot imitation. Essentially, when using one-shot imitation a network learns a task (i.e. stacking blocks into a tower) and then figures out how to achieve its result regardless of its situation. Combining this with the visual neural network means the robot is able to figure out how to stack blocks on its own in a variety of conditions. There's no need for the blocks to be laid in the same arrangement each time, as the machine can recgonize the block, then place them where it needs them.

OpenAI is not the only group looking to use virtual simulations to train robots. Earlier this year, GPU maker Nvidia announced Isaac, a system for training robots in virtual environments using reinforcement learning (having a robot do a task over and over until it gets it right). The Isaac system in part utilizes the OpenAI Gym, an open source toolkit released by OpenAI for developing and comparing AI algorithms.

A video released by OpenAI further explains its virtual training system:

ARM Technology Drives the Future. Join 4,000+ embedded systems specialists for three days of ARM® ecosystem immersion you can’t find anywhere else. ARM TechCon . Oct. 24-26, 2017 in Santa Clara, CA. Register here for the event, hosted by Design News ’ parent company UBM.

ARM Technology Drives the Future. Join 4,000+ embedded systems specialists for three days of ARM® ecosystem immersion you can’t find anywhere else. ARM TechCon . Oct. 24-26, 2017 in Santa Clara, CA. Register here for the event, hosted by Design News ’ parent company UBM.

Chris Wiltz is the Managing Editor of Design News.

About the Author(s)

You May Also Like

.jpg?width=300&auto=webp&quality=80&disable=upscale)